- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Column that tells if value occurs for first time

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

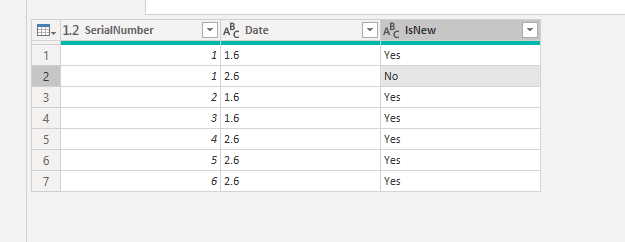

Column that tells if value occurs for first time

Hi!

I would like to add a column in my current table, that indicates if serial number has already mentioned in table before.

Simple demo what I'm looking for:

SerialNumber | Date | IsNewEntry

1 | 1.6. | Yes

2 | 1.6. | Yes

3 | 1.6. | Yes

4 | 2.6. | Yes

1 | 2.6. | No

5 | 2.6. | Yes

6 | 2.6. | Yes

How can I make this IsNewEntry-column?

Thank for your help in advance! 🙂

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here another attempt to solve (in some ways more directly and in a simpler manner - non table.group and related difficulties) the problem, which makes use of the auxiliary index column

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTI01Tcw0zcyMDJQitWJVjIGCZmhCBmBhMxRhEwwVZmChCwwzTLHNMsMUxWqRgtMIUs0p8YCAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [SerNum = _t, Date = _t]),

ct = Table.TransformColumnTypes(Source,{{"SerNum", Int64.Type}, {"Date", type date}}),

#"Sorted Rows" = Table.Sort(ct,{{"Date", Order.Ascending}}),

ai = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1),

#"Added Custom" = Table.AddColumn(ai, "IsNew", each if List.Contains(List.FirstN(ai[SerNum],[Index]-1),[SerNum]) then "no" else "yes")

in

#"Added Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Smauro , @Anonymous and @camargos88

I Think I found a solution!

I duplicated my TestResults table and took only [SerialNumber]-, [LocalDate]- and [Team]-columns into a new table.

Then I made sure that [LocalDate] was sorted Ascending and then removed the duplicates from [SerialNumber]-column.

It left those first entries of the serial numbers in the column (Before there were two entries for that serial number ending 1000, on 23.6. and 24.6. but after removing duplicates only 23.6. entry stayed) so I assume this works.

Thank you for your help! I think that list-sorting style was bit heavy to implement to my database when there is over 5M rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm happy for you, but if you had asked that you were interested only in the first occurrence of the serial number you would have had a much faster response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This was pretty close, but still removed the other columns.

I can now open up bit more the project.

First, my focus is on TestResults-table, which comes from SQL-server as an import. I have separate calendar-table to manage time intelligence in Power BI while slicing visuals. TestResults is independent table and it won't fetch data from another tables, just from the SQL-source.

TestResult-table column names and data types with short descriptions what they contain:

[Id], Data type: Whole number, just increasing number that's not in order when sorting date as ascending/descending.

[SerialNumber], Data type: Text, shows products serial number, can contain also letters.

[Description], Data type: Text, Gives an verbal explanation for test result ("OK", "OK(re-tested)", "FaultReasonX", "FaultResaonY", etc.).

[Product], Data type: Text, contains products model name.

[Result], Data type: Whole number, contains numeric value of test result (1=OK, 2=FaultReasonX, etc.).

[CellName], Data type: Text, contains name of the testing-cell.

[TimestampUtc], Data type: Date/Time, contains date and time when test took place in UTC-timezone.

[IsAutomaticallyManufactured], Data type: True/False, contains information if product was made in automation line or by hand.

[IsTestMod], Data type: True/False, indicates if tested product was actual product or known fulty one to check if tester works properly.

[CellCode], Data type: Text, contains test-station code to identify which one it is (For example TeamX-TestStation1).

[FinalComponentSoftwareVersion], Data type: Text, has information for component sofware version

[SoftwareVersion], Data type: Text, has information of products software version

[Measurements], Data type: Table, contains details of test results

[Team], Data type: Any, shows team name based on the [CellCode]

[LocalTime], Data type: Date/Time, conversion from [TimestampUtc] to local time.

[LocalDate], Data type: Date, Copied [LocalTime], just data type changed.

I need [IsNew]-column added on this TestResults-table. Goal is to see if [SerialNumber] is in the table already. It can be with binary 1=new entry, 0=AlreadyExisting or basic Yes/No-format. Comparisation should be focusing [LocalDate].column, not [TimestampUtc].

I managed also to generalize the advanced editor contents, so here it is:

let

Source = Sql.Database("xx.xx.xx.xx", "ABC"),

dbo_TestResults = Source{[Schema="dbo",Item="TestResults"]}[Data],

#"Added Team column" = Table.AddColumn(dbo_TestResults, "Team", each if Text.StartsWith([CellCode], "aaaa") then "aaaa" else if Text.StartsWith([CellCode], "bbbb") then "bbbb" else if Text.StartsWith([CellCode], "cccc") then "cccc" else if Text.StartsWith([CellCode], "dddd") then "dddd" else if Text.StartsWith([CellCode], "eeee") then "eeee" else if Text.StartsWith([CellCode], "ffff") then "ffff" else if Text.StartsWith([CellCode], "gggg") then "gggg" else if Text.StartsWith([CellCode], "hhhh") then "hhhh" else null),

#"Added LocalTime" = Table.AddColumn(#"Added Team column", "LocalTime", each if [TimestampUtc] < #datetime(2016, 3, 27, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2016, 3, 27, 2, 0, 0) and [TimestampUtc] < #datetime(2016, 10, 30, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2016, 10, 30, 1, 0, 0) and [TimestampUtc] < #datetime(2017, 3, 26, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2017, 3, 26, 2, 0, 0) and [TimestampUtc] < #datetime(2017, 10, 29, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2017, 10, 29, 1, 0, 0) and [TimestampUtc]< #datetime(2018, 3, 25, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2018, 3, 25, 2, 0, 0) and [TimestampUtc] < #datetime(2018, 10, 28, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2018, 10, 28, 1, 0, 0) and [TimestampUtc]< #datetime(2019, 3, 31, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2019, 3, 31, 2, 0, 0) and [TimestampUtc] < #datetime(2019, 10, 27, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2019, 10, 27, 1, 0, 0) and [TimestampUtc]< #datetime(2020, 3, 29, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2020, 3, 29, 2, 0, 0) and [TimestampUtc] < #datetime(2020, 10, 25, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2020, 10, 25, 1, 0, 0) and [TimestampUtc]< #datetime(2021, 3, 28, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2021, 3, 28, 2, 0, 0) and [TimestampUtc] < #datetime(2021, 10, 31, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2021, 10, 31, 1, 0, 0) and [TimestampUtc]< #datetime(2022, 3, 27, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2022, 3, 27, 2, 0, 0) and [TimestampUtc] < #datetime(2022, 10, 30, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else [TimestampUtc]),

#"Duplicated LocalTime for LocalDate" = Table.DuplicateColumn(#"Added LocalTime", "LocalTime", "LocalTime - Copy"),

#"Renamed column to LocalDate" = Table.RenameColumns(#"Duplicated LocalTime for LocalDate",{{"LocalTime - Copy", "LocalDate"}}),

#"Changed LocalDate Type to date" = Table.TransformColumnTypes(#"Renamed column to LocalDate",{{"LocalDate", type date}}),

#"Changed LocalTime Type to date/time" = Table.TransformColumnTypes(#"Changed LocalDate Type to date",{{"LocalTime", type datetime}}),

#"Filtered Rows to start from 2019" = Table.SelectRows(#"Changed LocalTime Type to date/time", each [LocalDate] >= #date(2019, 1, 1))

in

#"Filtered Rows to start from 2019"

----------

[IsNew] should be placed before "Filtered Rows to start from 2019", so I could change the date range if needed.

Hope this helps and clarifies the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

may be you lost this old post which contains suggestion for solution which don't cut away any column from your table.

Here I tryed to make a sort of transplat, following your indication. You should pay attention to the name of column used.,

For instance I called SerNum the serial number column LocalDate the date column. change them if necessary.

try it and good luck

let

Source = Sql.Database("xx.xx.xx.xx", "ABC"),

dbo_TestResults = Source{[Schema="dbo",Item="TestResults"]}[Data],

#"Added Team column" = Table.AddColumn(dbo_TestResults, "Team", each if Text.StartsWith([CellCode], "aaaa") then "aaaa" else if Text.StartsWith([CellCode], "bbbb") then "bbbb" else if Text.StartsWith([CellCode], "cccc") then "cccc" else if Text.StartsWith([CellCode], "dddd") then "dddd" else if Text.StartsWith([CellCode], "eeee") then "eeee" else if Text.StartsWith([CellCode], "ffff") then "ffff" else if Text.StartsWith([CellCode], "gggg") then "gggg" else if Text.StartsWith([CellCode], "hhhh") then "hhhh" else null),

#"Added LocalTime" = Table.AddColumn(#"Added Team column", "LocalTime", each if [TimestampUtc] < #datetime(2016, 3, 27, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2016, 3, 27, 2, 0, 0) and [TimestampUtc] < #datetime(2016, 10, 30, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2016, 10, 30, 1, 0, 0) and [TimestampUtc] < #datetime(2017, 3, 26, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2017, 3, 26, 2, 0, 0) and [TimestampUtc] < #datetime(2017, 10, 29, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2017, 10, 29, 1, 0, 0) and [TimestampUtc]< #datetime(2018, 3, 25, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2018, 3, 25, 2, 0, 0) and [TimestampUtc] < #datetime(2018, 10, 28, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2018, 10, 28, 1, 0, 0) and [TimestampUtc]< #datetime(2019, 3, 31, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2019, 3, 31, 2, 0, 0) and [TimestampUtc] < #datetime(2019, 10, 27, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2019, 10, 27, 1, 0, 0) and [TimestampUtc]< #datetime(2020, 3, 29, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2020, 3, 29, 2, 0, 0) and [TimestampUtc] < #datetime(2020, 10, 25, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2020, 10, 25, 1, 0, 0) and [TimestampUtc]< #datetime(2021, 3, 28, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2021, 3, 28, 2, 0, 0) and [TimestampUtc] < #datetime(2021, 10, 31, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else if [TimestampUtc] >= #datetime(2021, 10, 31, 1, 0, 0) and [TimestampUtc]< #datetime(2022, 3, 27, 2, 0, 0)

then [TimestampUtc]+#duration(0,2,0,0)

else if [TimestampUtc] >= #datetime(2022, 3, 27, 2, 0, 0) and [TimestampUtc] < #datetime(2022, 10, 30, 1, 0, 0)

then [TimestampUtc]+#duration(0,3,0,0)

else [TimestampUtc]),

#"Duplicated LocalTime for LocalDate" = Table.DuplicateColumn(#"Added LocalTime", "LocalTime", "LocalTime - Copy"),

#"Renamed column to LocalDate" = Table.RenameColumns(#"Duplicated LocalTime for LocalDate",{{"LocalTime - Copy", "LocalDate"}}),

#"Changed LocalDate Type to date" = Table.TransformColumnTypes(#"Renamed column to LocalDate",{{"LocalDate", type date}}),

#"Changed LocalTime Type to date/time" = Table.TransformColumnTypes(#"Changed LocalDate Type to date",{{"LocalTime", type datetime}}),

#"Sorted Rows" = Table.Sort(#"Changed LocalTime Type to date/time",{{"LocalDate", Order.Ascending}}),

ai = Table.AddIndexColumn(#"Sorted Rows", "Index", 0, 1),

#"Added Custom" = Table.AddColumn(ai, "IsNew", each if List.Contains(List.FirstN(ai[SerNum],[Index]),[SerNum]) then "no" else "yes")

#"Filtered Rows to start from 2019" = Table.SelectRows(#"Added Custom", each [LocalDate] >= #date(2019, 1, 1))

in

#"Filtered Rows to start from 2019"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It has been 18hrs from the time I implemented this but PC still has not been able to give the resulting table. Tried to restart Power BI yesterday and even left the PC running for night but as I came to office 5mins ago, it is still processing something 😞

It made the index column but the logic behind Yes/No-sorting didn't apparently work. Or maybe would have worked, but it stuck with the processing phase.

I think this would work for smaller datasets but not with this. We were so close with this one 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could try the logic using a little subset of you dataset.

how many columns and rows your table has?

I could suggest some ideas to try, to analise the situation.

Could you, with you table loaded, add the following step (I give some picture to explain) and tell us the result.

Select groub by and then should only click "OK"

to get the following table:

if this task is completed quickly. you can proceed from here working only on the lines that have count> 1.

PS

one last observation.

why is filtering on posterio lines nwith localtime before 2019?

this way you work on a smaller table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did this, MAde another table with SN and their count. It's linked to the original TestResults-table, so is there now a way to determine the first entry and other entries?

Rows are filtered to start from 2019 because it's unnecessary to have data from before that in this project.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

"Rows are filtered to start from 2019 because it's unnecessary to have data from before that in this project."

I understand that reason. My observation was about the fact that is probably more efficient filter the rows before the "yes" or "no" labeling.

I asked you to give usa un idea of the size of you data set. Without information I'm not able to help you.

I think the better way to manage your situation is that used here

https://community.powerbi.com/t5/Power-Query/CountIfs-by-past-data/m-p/1184949#M38513

I simulated you situation with a table containing 445000 rows and get results in few minutes

PPS

here the code to transform a randomly generated table of 500K rows, with 730 different dates (about 2 years) and 2000 different serila numbers.

You can change these values as you need to simulate your table and see how long it takes.

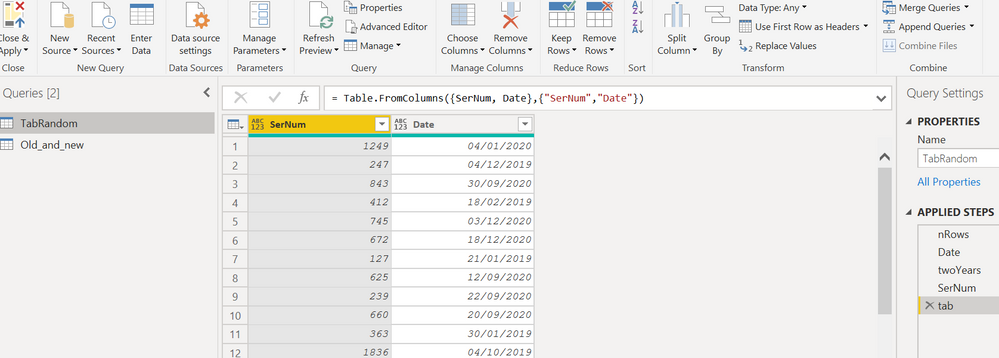

query tabRandom:

let

nRows=500000,

Date = List.Transform(List.Random(nRows), each twoYears{Number.RoundDown(_*730)}),

twoYears=List.Transform({1..730},each Date.AddDays(#date(2018, 12, 31), _)),

SerNum = List.Transform(List.Random(nRows), each Number.RoundDown(_*2000)),

tab=Table.FromColumns({SerNum, Date},{"SerNum","Date"})

in

tab

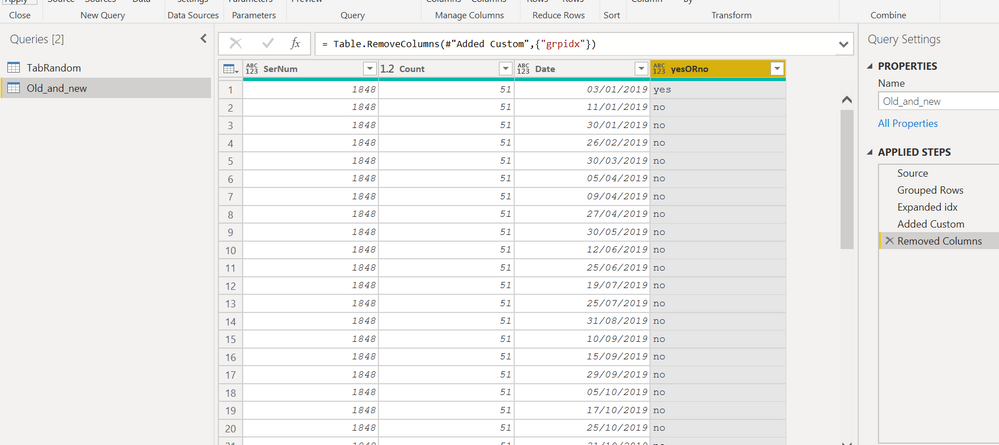

query old_and_new

let

Source = TabRandom,

#"Grouped Rows" = Table.Group(Source, {"SerNum"}, {{"Count", each Table.RowCount(_), type number},{"idx",each Table.AddIndexColumn(Table.Sort(_,"Date"),"grpidx",0,1)}}),

#"Expanded idx" = Table.ExpandTableColumn(#"Grouped Rows", "idx", {"Date", "grpidx"}, {"Date", "grpidx"}),

#"Added Custom" = Table.AddColumn(#"Expanded idx", "yesORno", each if [grpidx]=0 then "yes" else "no"),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"grpidx"})

in

#"Removed Columns"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My apologies, I missed the question about rows and columns completely.

Currently there is almost 5,2M rows and 16 columns, adding 2250 rows a day in average.

Of course with filtering the row count is lower but still remarkable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Haven't tested this code out, but you could try this:

let

Source = Sql.Database("xx.xx.xx.xx", "ABC"),

dbo_TestResults = Source{[Schema="dbo",Item="TestResults"]}[Data],

#"Added Team column" = Table.AddColumn(dbo_TestResults, "Team", each if Text.StartsWith([CellCode], "aaaa") then "aaaa" else if Text.StartsWith([CellCode], "bbbb") then "bbbb" else if Text.StartsWith([CellCode], "cccc") then "cccc" else if Text.StartsWith([CellCode], "dddd") then "dddd" else if Text.StartsWith([CellCode], "eeee") then "eeee" else if Text.StartsWith([CellCode], "ffff") then "ffff" else if Text.StartsWith([CellCode], "gggg") then "gggg" else if Text.StartsWith([CellCode], "hhhh") then "hhhh" else null),

// This Function could go elsewhere

DateTimeToLocal = (d as anynonnull) as duration =>

let

d = if (d is datetime ) then d else DateTime.From(d),

LastSundayOfOctober = DateTime.From(Date.StartOfWeek(#date(Date.Year(d), 10, 31), Day.Sunday)) + #duration(0, 1, 0, 0 ),

LastSundayOfMarch = DateTime.From(Date.StartOfWeek(#date(Date.Year(d), 3, 31), Day.Sunday)) + #duration(0, 2, 0, 0 ),

Return = if (d >= LastSundayOfMarch and d < LastSundayOfOctober) then #duration(0,3,0,0) else #duration(0,2,0,0)

in

Return,

#"Added LocalTime" = Table.AddColumn(#"Added Team column", "LocalTime", each DateTime.From([TimestampUtc]) + DateTimeToLocal([TimestampUtc]), type datetime),

#"Added LocalDate" = Table.AddColumn(#"Added LocalTime", "LocalDate", each Date.From(LocalTime), type date),

// Serial number check

#"Select SN & Date Columns" = Table.SelectColumns( #"Added LocalDate", {"SerialNumber", "LocalDate"}),

#"Group to find min date" = Table.Group( #"Select SN & Date Columns", {"SerialNumber"}, {{"MinDate", each List.Min([LocalDate]), type date}}),

#"Add Key to Join" = Table.ReplaceKeys ( #"Group to find min date", {[Columns = {"SerialNumber", "MinDate"}, Primary= true]}),

#"Merge Back" = Table.NestedJoin( #"Added LocalDate", {"SerialNumber", "LocalDate"}, #"Add Key to Join", {"SerialNumber", "MinDate"}, "IsNew", JoinKind.LeftOuter),

#"Fix IsNew Column" = Table.TransformColumns(#"Merge Back", {{"IsNew", each if Table.IsEmpty(_) then 0 else 1, Int64.Type}}),

#"Filtered Rows to start from 2019" = Table.SelectRows(#"Fix IsNew Column", each [LocalDate] >= #date(2019, 1, 1))

in

#"Filtered Rows to start from 2019"

As you see i've changed a bit the DST fix.

This step should also be changed, it must be too heavy with your db:

#"Added Team column" = Table.AddColumn(dbo_TestResults, "Team", each if Text.StartsWith([CellCode], "aaaa") then "aaaa" else if Text.StartsWith([CellCode], "bbbb") then "bbbb" else if Text.StartsWith([CellCode], "cccc") then "cccc" else if Text.StartsWith([CellCode], "dddd") then "dddd" else if Text.StartsWith([CellCode], "eeee") then "eeee" else if Text.StartsWith([CellCode], "ffff") then "ffff" else if Text.StartsWith([CellCode], "gggg") then "gggg" else if Text.StartsWith([CellCode], "hhhh") then "hhhh" else null),

You could maybe try something like this:

#"Added Team column" = Table.AddColumn(dbo_TestResults, "Team", each let t = Text.Start([CellCode], 4) in if t = "aaaa" then "aaaa" else if t = "bbbb" then "bbbb" else if t "cccc" then "cccc" else if t = "dddd" then "dddd" else if t = "eeee" then "eeee" else if t = "ffff" then "ffff" else if t = "gggg" then "gggg" else if t = "hhhh" then "hhhh" else null),

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

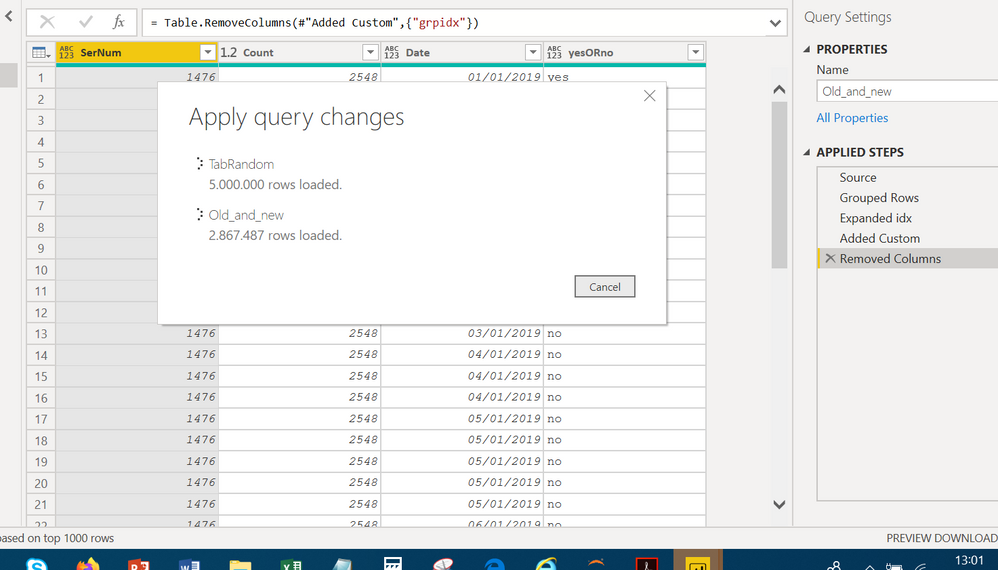

I tried to make a homemade bench, with the following results.

| sec | 25 | 57 | 163 | 1253 |

| rowsX1000 | 500 | 1000 | 2000 | 4000 |

Using the exponential interpolation function of excel the estimate for a table of 5.2M is 4890sec.

Of course the time for your table (19 cols versus 2 cols9 and the time to have the table avalaible is greater.

Codo to generate a table of 4M rows X 2 columns

let

nRows=4000000,

Date = List.Transform(List.Random(nRows), each twoYears{Number.RoundDown(_*720)}),

twoYears=List.Transform({1..730},each Date.AddDays(#date(2018, 12, 31), _)),

SerNum = List.Transform(List.Random(nRows), each Number.RoundDown(_*2000)),

tab=Table.FromColumns({SerNum, Date},{"SerNum","Date"})

in

tab

code for the time calculation from Chris Webb's blog

let

idxgrp = (tab)=>

let

grp = Table.Group(tab, {"SerNum"}, {{"Count", each Table.RowCount(_), type number},{"idx",each Table.AddIndexColumn(Table.Sort(_,"Date"),"grpidx",0,1)}}),

yyy = Table.ExpandTableColumn(grp, "idx", {"Date", "grpidx"}, {"Date", "grpidx"})

in yyy,

NumberOfRows = Number.ToText(Table.RowCount(idxgrp(TabFourM))),

EndTime = DateTime.LocalNow(),

Output = "Query analized " & NumberOfRows

& " rows and took "

& Duration.ToText(EndTime - DateTime.FixedLocalNow())

in

Output

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

really remarkable, indeed.😁.

I tryed whith nRows=5M:

few minutes to generate the random ytable (But you already have this)

##############################################################time 12:57

after 4 minutes:

Then within 30 minutes it should end.

but if we remove the add of the column that translates to "yes" and "no" the progressive numbers associated with the serial numbers the time decreases a lot, in my opinion.

I say more, turning "0" to "yes" and "1, 2,3, .." to "no" you lose information as well as time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you! I'm trying to implement this and now it's crunching those numbers, I'll let you know if this worked! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Try this mcode:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTLUM1OK1YlWMkJiGyOxTYBsIyjbEIltisQ2g7FjAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [SerialNumber = _t, Date = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SerialNumber", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"SerialNumber"}, {{"Rows", each Table.AddColumn(Table.AddIndexColumn(Table.Sort(_, {"Date", Order.Ascending}), "Index", 1,1), "IsNew", each if [Index] = 1 then "Yes" else "No" ), type table [SerialNumber=number, Date=text, IsNew=text]}}),

#"Removed Other Columns" = Table.SelectColumns(#"Grouped Rows",{"Rows"}),

#"Expanded Rows" = Table.ExpandTableColumn(#"Removed Other Columns", "Rows", {"SerialNumber", "Date", "IsNew"}, {"SerialNumber", "Date", "IsNew"})

in

#"Expanded Rows"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here another attempt to solve (in some ways more directly and in a simpler manner - non table.group and related difficulties) the problem, which makes use of the auxiliary index column

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTI01Tcw0zcyMDJQitWJVjIGCZmhCBmBhMxRhEwwVZmChCwwzTLHNMsMUxWqRgtMIUs0p8YCAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [SerNum = _t, Date = _t]),

ct = Table.TransformColumnTypes(Source,{{"SerNum", Int64.Type}, {"Date", type date}}),

#"Sorted Rows" = Table.Sort(ct,{{"Date", Order.Ascending}}),

ai = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1),

#"Added Custom" = Table.AddColumn(ai, "IsNew", each if List.Contains(List.FirstN(ai[SerNum],[Index]-1),[SerNum]) then "no" else "yes")

in

#"Added Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here

https://community.powerbi.com/t5/Power-Query/CountIfs-by-past-data/m-p/1184949#M38513

is treated a similar problem (in some respects a generalization of your problem).

Considere to use this solution for you problem.

You would have 0 for a new serial number and an increasing sequence of numbers> 0 for the others.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try this (the first parameter inside [] is the column name of your table before,the second SerNum after '=' sign is the column name of your currant table:

= Table.AddColumn(#"Changed Type", "IsNew", each Table.Contains(TableBefore, [SerNum=[SerNum]]))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have the custom column expression for this? I tried to apply but it shows error 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the code for the add_custom_column tool is:

Table.Contains(TableBefore, [SerNum=[SerNum]])

which set to true or false the rows value.

If you should have problem , let me see (copy and paste here) the contents of your advanced editor (and/or give the exact name of tabbles and columns) and I will try to add the requested lines of code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Table name: TestResults

Current columns I want to use to compare: [SerialNumber], [LocalDate]

Wanted column: [IsNew]

So I just want to check if serial number has appeared before in the table and output to [IsNew]-column is "Yes" or "No" based on that.

Hope this helps.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.