- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Caching API access token

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Caching API access token

Hi everyone,

I have multiple queries requesting data over an API needing an access token to do so. There is a token request limitation for this API in place. Only five access tokens per second can be requested. One access token is valid for one hour. Enough time for all queries to request the data unsing the single requested access token.

How do I cache that access token for multiple queries enabling a scheduled refresh in PBI-Service?

I tried to encapsulate the access token request as a function but this didn't do the trick. I always get this error when refreshing the dataset online:

- Processing error: [Unable to combine data] Section1/data_orig/filtered_table references other queries or steps, so it may not directly access a data source. Please rebuild this data combination.

- Cluster URI: WABI-NORTH-EUROPE-E-PRIMARY-redirect.analysis.windows.net

- Activity ID: 04cd4d1d-b5cc-47cb-8c33-63b429f0ff88

- Request ID: c1466cd1-5fb2-fdb9-1f46-9b48c2505e89

- Time: 2022-11-02 11:54:06Z

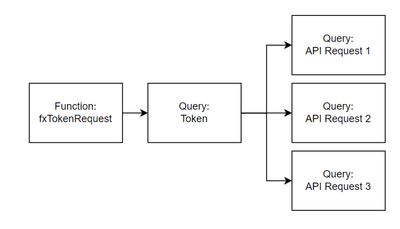

The PBI-Desktop version works. The query staging looks like this:

- I get the token via a function Token_Response = Web.Contents(...)

- The token is just a string and passed into a simple query via Token = Token_Respone[token]{0} // This step could be skipped I think. But I thought doing this would call the function only once and cache its result to be used for all queries of step three.

- The individual queries use the (single) token for authentification within their headers like

token = Token, ..., #"Authorization" = "Bearer " & token

I think one problem is, that this still calls the function every time an API Request query is refreshed and not only once for all. And the other problem is that this I cannot use a query inside another query (Formula.Firewall).

Just to mention it,

- I already tried to use the option setting "Always ingnore Privacy Levels settings" - didn't work online for the refresh.

- I included the token request to each API request query to use an individual token for each one - triggers the token request limitation, so cannot be done but works also for the scheduled refresh online

- I tried to come up with a function which addes a new row to a table containing the last refresh datetime, the token expiry datetime (token validity one hour from last refresh) and the cached token itself. This table can then be used to trigger if a new token should be requested or the available one is still valid and can be used for another API request query. Unfurtionatly I was unable to do so as I ran at some point into recursive queries or simply didn't know how to store the datetime values so that PBI doesn't forget them once the function is called again.

Now = DateTime.LocalNow(), // datetime

TokenValidity = #"TokenTable[Valid]{0}, // datetime

Token = if TokenValidity > Now

then #"TokenTable[Token]{0} // use the token which is still valid

else Web.Contents(...) // request a new token and cache it into the #"TokenTable (Table.InsertRow(...))

Thanks for any help or hints in this regards. ✌️😁

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I solved it by creating a workspace dedicated to the access token and the API data requests via dataflows.

- Create a workspace

- Create a dataflow for the access token

- Simply copy / paste the M-code from the legacy token query into the dataflow editor

- Create a dataflow for the data requests

- Also, it is a matter of copy / paste. For the needed token of the token dataflow (2) add it as a separate data source

- Set up the scheduled refresh for both dataflows

Things to remember:

- Only enable load of tables which you need to share as data sources for other objects like the final data set of a report

- You probably need to configure that all privacy level needs to be ignored. For me it was prompted by the system.

- Make sure that you manually refresh the token request to have a valid token before editing the data requests. Otherwise, the editor will throw the error "The credentials provided for the Web source are invalid. (...)". After you hit "Configure connection" > "Connect > "Safe & Close" you would end up with a new web source in the lineage view even though it is the same a before. Its ugly and should be avoided. So far, I am not able to get rid of such a redundant web source. As a token is valid for one hour in my case, I configure the scheduled refresh on an hourly basis. This way I don't have to worry about that.

Positive about this approach is, that any credentials are saved in a separate workspace. Users with viewing rights can only see the tables with active "Enable load" option like report builders in other workspaces. Also, each individual dataflow can have its own scheduled refresh. This gives a nice structure for governance in a growing team with different roles. Everything is withing PBI-Service. And it solves the token cache problem. Only one token is requested and used.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PhilippDF ,

Please see if the document can help:

API Management Policy for Access Token Acquisition, Caching and Renewal - Microsoft Community Hub

Best Regards,

Community Support Team _ kalyj

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I solved it by creating a workspace dedicated to the access token and the API data requests via dataflows.

- Create a workspace

- Create a dataflow for the access token

- Simply copy / paste the M-code from the legacy token query into the dataflow editor

- Create a dataflow for the data requests

- Also, it is a matter of copy / paste. For the needed token of the token dataflow (2) add it as a separate data source

- Set up the scheduled refresh for both dataflows

Things to remember:

- Only enable load of tables which you need to share as data sources for other objects like the final data set of a report

- You probably need to configure that all privacy level needs to be ignored. For me it was prompted by the system.

- Make sure that you manually refresh the token request to have a valid token before editing the data requests. Otherwise, the editor will throw the error "The credentials provided for the Web source are invalid. (...)". After you hit "Configure connection" > "Connect > "Safe & Close" you would end up with a new web source in the lineage view even though it is the same a before. Its ugly and should be avoided. So far, I am not able to get rid of such a redundant web source. As a token is valid for one hour in my case, I configure the scheduled refresh on an hourly basis. This way I don't have to worry about that.

Positive about this approach is, that any credentials are saved in a separate workspace. Users with viewing rights can only see the tables with active "Enable load" option like report builders in other workspaces. Also, each individual dataflow can have its own scheduled refresh. This gives a nice structure for governance in a growing team with different roles. Everything is withing PBI-Service. And it solves the token cache problem. Only one token is requested and used.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 102 | |

| 53 | |

| 21 | |

| 12 | |

| 12 |