- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Azure Auto ML and Power BI

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Azure Auto ML and Power BI

Hi,

I have used Azure Auto ML to build a model and have deployed it successfully. I can also use the Test function that sits under the Endpoint option for my model.

I now wish to call this model in PBI desktop. The issue I have is what format should I be loading the data in to PBI. I know once loaded I can click on the Azure Machine Learning icon in the Query Editor and I can see my list of Azure ML models, however it only prompts for two things

Data and method

Data I was hoping would simply be a table of data, each row having the columns I used to train my model and the Method being 'predict'

I have also tried loading in the data as one column and that column having a Json payload with all the data fields for each row.

Any help appreciated

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

this is the latest relpy I have from MS on this. Tbh, I'm not what the Swagger things is for, but looks like an underlying bug that MS are now investigating.

*************

I’ve downloaded the same data from Kaggle and built a classification model with that. It looks like the input schema is not being generated for scoring purposes. Here’s the documentation for the scoring script and how it needs to be formatted for schema generation: https://docs.microsoft.com/en-us/azure/machine-learning/how-to-deploy-advanced-entry-script#automati...

About a month or so ago, the product team added a new parameter to the script to return the predicted probabilities for classification tasks. This ask came from customer feedback wanting to deploy a model and get the underlying probabilities, not just the label. As you see in the following picture, a parameter called “method” is in the JSON test script. I think versioning the classification model’s scoring script has affected the model consumption method with the form editor. I’ve raised the issue to the product team; they’re investigating now.

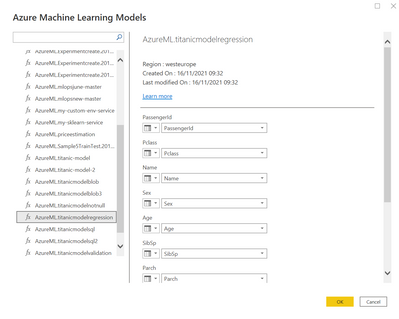

Meanwhile, I’ve built a regression model with the same data. On the AML test page, I can see the form editor now. When I connect this model with PBI, I can match the PBI dataset with the model attributes. The outputs are numeric, but you can set a threshold to convert the numeric results into categorical values. I believe that’s the quickest fix for now. I’ll keep you informed as soon as I hear an accurate answer/timeline to fix the bug for the classification models.

****************

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did this ever get resolved? It looks like I'm up against the same issue. I've registered & deployed a model using AzureML Python SDK following this guide: https://github.com/Azure/MachineLearningNotebooks/blob/master/how-to-use-azureml/deployment/deploy-t...

I'm able to query the endpoint, however PBI desktop shows the same screenshot you have - just a "data" and "method" field.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The latest message from my MS contact... waiting game I guess !

' I built several models to identify the main issue and raised a product team ticket. They’ve been working on this integration but haven’t heard any update from them yet. I’ve just sent a reminder to the product team, I will keep you informed as soon as possible.'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

this is the latest relpy I have from MS on this. Tbh, I'm not what the Swagger things is for, but looks like an underlying bug that MS are now investigating.

*************

I’ve downloaded the same data from Kaggle and built a classification model with that. It looks like the input schema is not being generated for scoring purposes. Here’s the documentation for the scoring script and how it needs to be formatted for schema generation: https://docs.microsoft.com/en-us/azure/machine-learning/how-to-deploy-advanced-entry-script#automati...

About a month or so ago, the product team added a new parameter to the script to return the predicted probabilities for classification tasks. This ask came from customer feedback wanting to deploy a model and get the underlying probabilities, not just the label. As you see in the following picture, a parameter called “method” is in the JSON test script. I think versioning the classification model’s scoring script has affected the model consumption method with the form editor. I’ve raised the issue to the product team; they’re investigating now.

Meanwhile, I’ve built a regression model with the same data. On the AML test page, I can see the form editor now. When I connect this model with PBI, I can match the PBI dataset with the model attributes. The outputs are numeric, but you can set a threshold to convert the numeric results into categorical values. I believe that’s the quickest fix for now. I’ll keep you informed as soon as I hear an accurate answer/timeline to fix the bug for the classification models.

****************

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Did you get any direct response from Microsoft yet?

tim_pasytr

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did and they completely mis-understood my ask, they ignored the fact that I mentioned Azure Auto ML and only talked about Auto ML in PBI. I have a call setup on the 16th Nov. The MS guy is ill, but hopefully back by then. Give me a nudge on the 17th if I forget to update this post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem...posted it yesterday. The Test worked fine on the Azure AutoML side but only one column is showing up on the PBI side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've raised the issue with my MS Account Mgr, she is putting me in touch with someone internally.... a waiting game now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, have you heard back from MS yet? I am running into the same issue and looking for help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, not yet, will chase again now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @arifsyeduk1 ,

what you get from Auto ML (and see in your picture) are functions that will apply the determined ML logic to the current data.

So you have to import the data that you want the Azure ML logic applied to into your model as well.

Then apply the functions to them.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

sorry, I don't quite understand what you are saying or asking me to try. The model is deployed, I'm hoping that I can import a new data set in to PBI and apply the model to get the outcome as an additional column in PBI

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @arifsyeduk1 ,

trying to understand your request/estimation:

Where do you expect the new data set to come from (" ...I'm hoping that I can import a new data set in to PBI ...")?

That is what I meant with "... So you have to import the data that you want the Azure ML logic applied to into your model as well. ... "

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a new data set (csv) that contains all the attributes/features that the Training data Set had that I used in Azure ML. The model has trained and been deployed. I now have imported a new data set in to PBI which does not have the 'outcome' column. This is fine and you can see that in my second screenshot. When I come to associate this data in PBI with the Model, one of the prompts as seen in the second screenshot is 'data'. This only allows me to select 1 column/feature. My question is what is PBI expecting in terms of data format so it can be passed in to the model row by row.

Would be happy to set up a MS Teams call to show the scenario.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a new data set (csv) that contains all the attributes/features that the Training data Set had that I used in Azure ML. The model has trained and been deployed. I now have imported a new data set in to PBI which does not have the 'outcome' column. This is fine and you can see that in my second screenshot. When I come to associate this data in PBI with the Model, one of the prompts as seen in the second screenshot is 'data'. This only allows me to select 1 column/feature. My question is what is PBI expecting in terms of data format so it can be passed in to the model row by row.

Would be happy to set up a MS Teams call to show the scenario.

Thanks

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.