- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Append query issues with new data and deleting old...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Append query issues with new data and deleting older data (possible M coding needed)

hello PowerQuery community,

I am trying to create a dashboard basis some files that are emailed to us by another department. there are two issues that I am unable to solve which possibly require M coding. Am keen to explore what is possible to achieve with PowerQuery. I am using Excel 365 & PowerBI Desktop free version.

Let's suppose there are three input files.

file #1- filename = "Report_2020049.xlsx"

representation table

| start_date | variable1 | variable2 | variable3 |

| 26-Apr-20 | |||

| 27-Apr-20 | |||

| 28-Apr-20 | |||

| 29-Apr-20 |

file #2- filename = "Report_20200430.xlsx"

representation table

| start_date | variable1 | variable2 | variable3 |

| 27-Apr-20 | |||

| 28-Apr-20 | |||

| 29-Apr-20 | |||

| 30-Apr-20 |

file #3- filename = "Report_20200501.xlsx"

representation table

| start_date | variable1 | variable4 | variable3 | variable2 |

| 28-Apr-20 | ||||

| 29-Apr-20 | ||||

| 30-Apr-20 | ||||

| 01-May-20 |

expected output after appending 3 files

| start_date | variable1 | variable4 | variable3 | variable2 |

| 26-Apr-20 | ||||

| 27-Apr-20 | ||||

| 28-Apr-20 | ||||

| 29-Apr-20 | ||||

| 30-Apr-20 | ||||

| 01-May-20 |

As you may have noticed, the input files have rolling data for the last 4 days since the size of the data is huge. Each date here actually has close to 100,000+ rows of data.

As of now, I am manually maintaining a seperate sheet that consists of unique dates as has been created in the output table. Whenever the most recent file is received, I delete the previous 3 days data and replace it with the current 4-days data. One of the main reasons to consider the last 4 days is that there may be chances of data being overwritten so the most current version must be considered. Another big problem is that new column was added from 1st May onwards (Variable4) and must be included in the appended table.

to summarise my questions:

1) how do we append new data to existing query and delete older dates data? (one solultion i'm thinking is that I may have to generate a unique index key for each file and in case of duplication between dates, i have to delete the smallest values??) This is being achieved manually right now but I would rather have this achieved by PowerQuery.

2) while appending two tables of different structures, how does one ensure additional columns are also added?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

I think something like this should work - you may need to adjust it for your needs:

The idea in brief:

1. Start from the LATEST file moving up the stream (this ensures new data overwrite old where they change)

2. Do anti join/merge on each next file/input to get only lines missing in the combined table

3. Combien the join output with the combined table

4. Repeat with the next file.

On large files, it may take quite a while to run, so I would suggest considering using some form of "incremental" refresh to speed up (so only the "new" file was processed)

The combiner function:

(existing as table, new as table)=>

let

Source = existing,

#"Merged Queries" = Table.NestedJoin(Source, {"Date"}, new, {"Date"}, "join", JoinKind.RightAnti),

#"Removed Other Columns" = Table.SelectColumns(#"Merged Queries",{"join"}),

#"Expanded join" = Table.ExpandTableColumn(#"Removed Other Columns", "join", Table.ColumnNames(new), Table.ColumnNames(new)),

#"Appended Query" = Table.Combine({#"Expanded join", Source})

in

#"Appended Query"

This is how it is called in the main query:

let

Source = {t3, t2, t1},

Output = List.Accumulate(Source, #table(type table[Date=date, Value = number, FileName = text], {}), (a, n)=> fCombineEx(a, n))

in

Output

These are the t1-3 tables:

DateValueFileName

|

DateValueFileName

|

DateValueFileName

|

New columns are added automatically.

Kind regards,

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

I think the main problem with your report is that you have historical data from the past every day, and you want to delete them and keep the incremental data. Can you upload several report files to remove sensitive data? I'll see if I can solve and provide an optimization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You've asked several questions so let me see if I can tackle them one at a time:

Question 1) how do we append new data to existing query and delete older dates data?

Without having seen your data, I would take this approach. You are importing files, so just select the most recent 3/4 days of files. Do this:

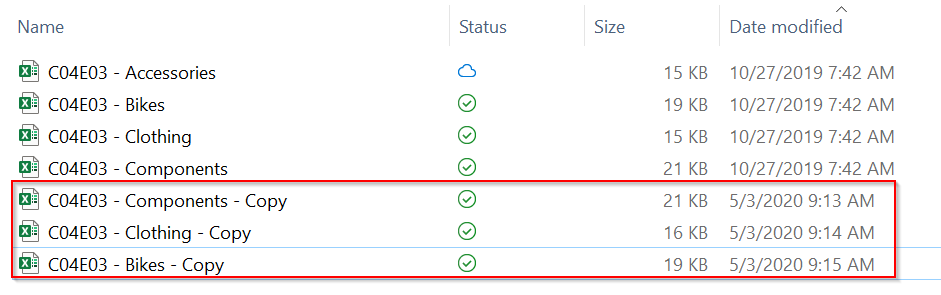

- Assume files that are like the following in a folder, and I want the most recent 3 files. This could be a hard drive folder, onedrive, or SharePoint folder. The SharePoint method is slighty different, but same concept.

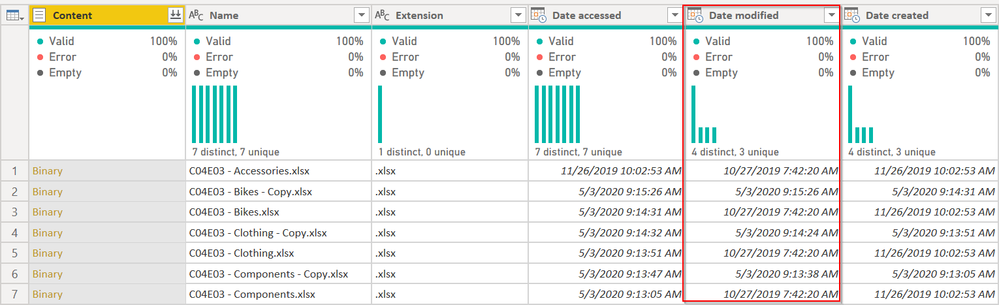

- In Power Query, get data from the folder, and hit Transform

- We want to get the 3 most recently added files. It will be in the Date Modified column of Power Query

- Filter by the latest in there. This is wrong, but will generate the code we want to tweak.

- The formula bar now has:

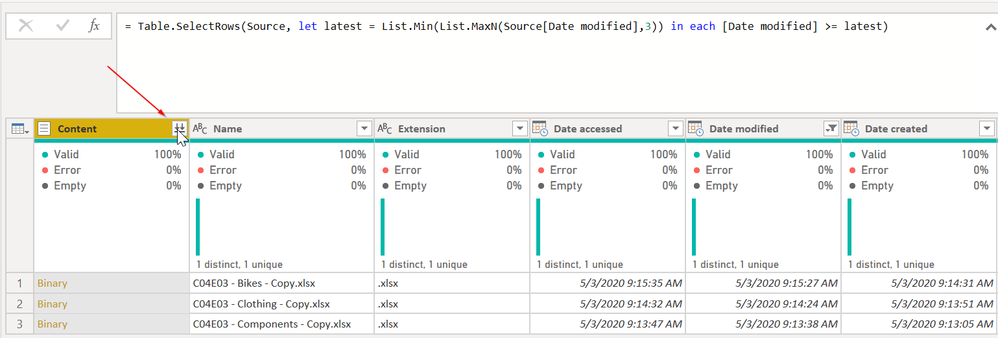

- Table.SelectRows(Source, let latest = List.Max(Source[Date modified]) in each [Date modified] = latest)

- Change this to

- Table.SelectRows(Source, let latest = List.Min(List.MaxN(Source[Date modified],3)) in each [Date modified] >= latest)

- I changed the List.Max to ListMaxN, and told it to get the top 3. So it has all of the May 3 dates, my top 3 dates. Then I used List.Min() around that to get the smallest value, then i change the = latest to >= latest. Now it will always pull the latest 3 files, unless there is some sort of tie where more than one file as identical date/time stamps.

- Press the Combine button, and it will walk you through a wizard to combine the files.

- You'll notice in your queries on the left Power Query created a folder of queries and other folders. This may be the folder query @Greg_Deckler was talking about. It is some magic farie dust Power Query uses when combining files. 😉

Question 2) while appending two tables of different structures, how does one ensure additional columns are also added?

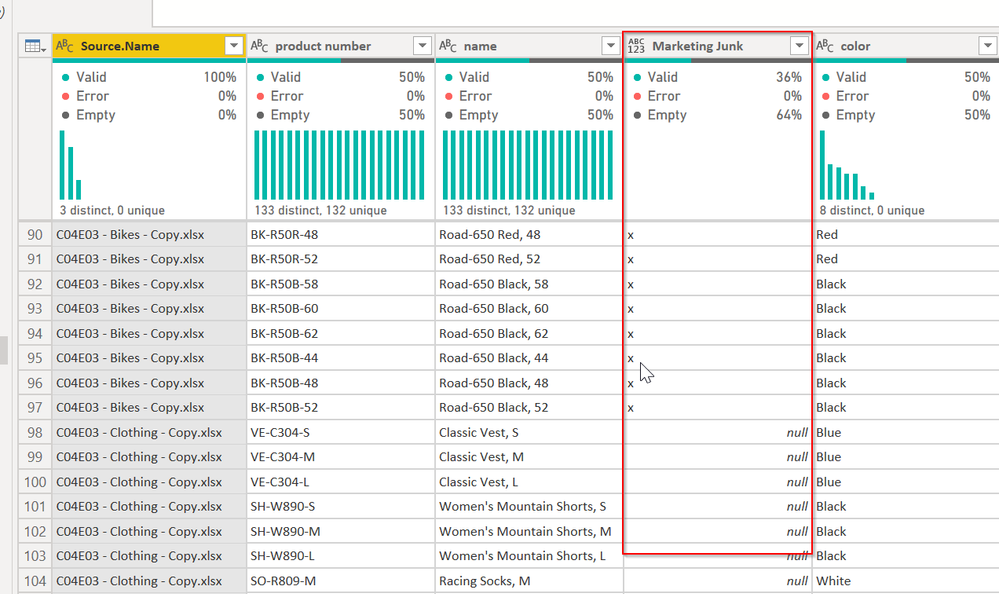

This should happen automatically when you import using the Combine operation above. To validate this, I went into one of the files and added a [Marketing Junk] column, saved it, then went back into Power Query and simply told it to refresh. This was the result:

For the Bike file, the column with the 'x' char I put in there for testing is there. For the Clothing and other files, the column is there, but null.

At this point, if you want to further transform by removing columns, I strongly recommend you select the columns you want and then use the Remove Other Columns functionality. The reason is, if you have a column today called [ID] and you are removing it, if next week the [ID] column is gone from your last 3 files, Power Query will break because there is no [ID] column to remove. but if you select all but the [ID] column and Remove Other Columns, Power Query uses a Table.SelectColumns() function and is really selecting what you want. It doesn't care that [ID] is there and gone.

The flip side of this is if you get a new [Valid Data] column in a new file, that will actually not show up either. It will be in Power Query, but Power Query will discard it.

In that case, it gets tricky. If your new column names follow a predictable pattern (variable1, variable2, variable3) then we can wild-card the Table.SelectColumns().

But that is getting more complex. See how this works for you right now, and let me know if I understood your questions properly.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for the detailed response. The 2nd question is answered but i would like to explain my 1st question again.

Problem statement is not to consider the files which are the most recent. In my current situation, each file contains data for the last 4 days. So everyday I get a rolling 4 day report. for instance, today's report (5th May) will consists of files from 1st May to 4th May. For me to maintain a continuous updated dataset (file name = Manual_dataset), i have to manually copy the rows between 1st May to 4th May in a seperate sheet. This seperate file consists of the latest data till today. So tomorrow (6th May) when I receive the next report, I now have updated data from 2nd to the 5th May. Now I have to open the Manual_dataset and then perform these steps

i) delete existing data from 2nd to 4th May

ii) copy data from 2nd to 5th May from the latest report

I followed your instructions and encountered the following error.

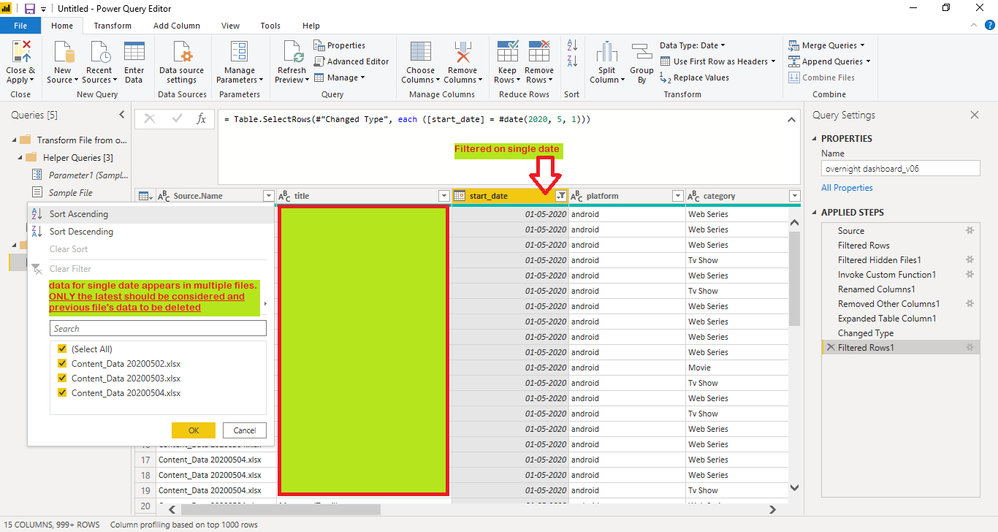

As you will see, for a single date (1st May), there are 3 files that are being reference. It is desired to remove data from the older files and only maintain the one from 4th May.

I hope I have explained my question better. Do let me know if my query is within the realm of being achieved within the free version of PowerBi desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

I'm still not sure I fully understand your problem, but here is the approach I would take.

- Store all files in a single folder.

- Have Power Query grab the latest file, and all data.

- Have Power Query grab the 2nd latest file, and then with an Anti-Merge, keep all data for dates that are not in the file in step 2 above.

- Have Power Query grab the 3rd latest file, and then with an Anti-Merge, keep all data for dates that are not in the files in steps 2 and 3 above.

- repeat step 4 until you have all the data you need.

If I'm still not understanding, let me know.

If you need help with implementing the above logic, I'll need sample files to mess with. See links below to facilitate that.

How to get good help fast. Help us help you.

How to Get Your Question Answered Quickly

How to provide sample data in the Power BI Forum

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you for the response. however, i do not think this will work since this process has to be done on a daily basis. I am trying to look for ways to make it as less manual as possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The method I proposed is 100% automatic once you know how much data you need.

If you could be really clear with some sample data, as has been requested, we can more easily help. Again, links below for supplying sample data.

How to get good help fast. Help us help you.

How to Get Your Question Answered Quickly

How to provide sample data in the Power BI Forum

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, the general answer to this is to use a Folder query. Depending on what transformations you do you may be able to get away with having additional columns in some files but it is not idea. Power Query is amazing but it is not magic, it can't simply *poof* away all manner of awful data. @ImkeF and @edhans might have some ideas.

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response Greg. Would you be able to shed some light on Folder Query?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.