- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Issues

- Issues

- Dataflows deployed from a Deployment Pipeline - in...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Dataflows deployed from a Deployment Pipeline - invalid data source

I have created a dataflow that has a single data source (a SQL database). Here it is in lineage view in my Dev workspace.

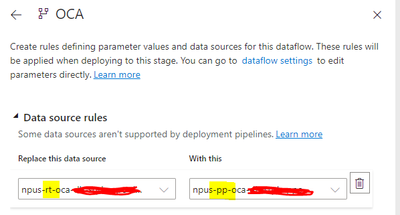

I am using a Power BI Deployment Pipeline to deploy that dataflow from Workspace Dev to Workspace Test. There is a rule applied that changes the data source from the Dev database and server specified in Workspace Dev to a different Test database and server in Workspace Test. (In various labels on these screenshots, you'll see "rt" in the naming convention which represents Dev, and "pp" which represents Test).

This is the rule I've defined:

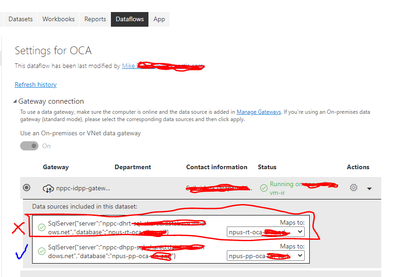

When I look at the deployed dataflow in Workspace Test, it now shows lineage for two data sources, both Dev and Test.

OCA Dataflow lineage in Test workspace

The top SQL Server database is the Dev connection (unused and invalid), the bottom one is the Test connection.

When I export the json of the dataflow in Workspace Test, it only shows one single data source, and it correctly points to the Test database.

This looks like a bug in the deployment pipeline somehow?

The problem for me is that I need to configure the gateway connection for the SQL database, and, due to company security policies, there is a gateway configured for the Test environment that should only have access to the Test Azure SQL Server instances in a virtual network, and not the Dev Azure SQL Server instances, but the data source configurations for the Test dataflow require me to map *both* those data sources to the same gateway. Since the dev database isn't actually used, I can configure a dummy datasource in the gateway for the dev instance and since it never gets queried, it won't fail. But it looks like I have both Dev and Test data sources in the Test gateway.

OCA dataflow gateway config TEST

I shouldn't have the first data source if the dataflow lineage was correct, but I need to configure this (invalid and unused) datasource in this gateway in order to map both these data sources to the same gateway.

What I'm *expecting* is that the dataflow deployed into the Test workspace has only one data source in its lineage, the one that is updated by the deployment rule. The original data source should not be present in the lineage of the dataflow.

Mike

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

v-xiaoyan-msft

on:

Report jumps between two profile logins.

v-xiaoyan-msft

on:

Report jumps between two profile logins.

- iannedrs on: Error: There is an error in XML document (1, 1).

-

v-xiaoyan-msft

on:

Cannot share PowerBi reorts and dashboards to my o...

v-xiaoyan-msft

on:

Cannot share PowerBi reorts and dashboards to my o...

-

rayishome

on:

Get External Data: Power BI semantic models throws...

on:

Get External Data: Power BI semantic models throws...

-

v-yetao1-msft

on:

Power BI Desktop April 2024 : Sort by issue. False...

v-yetao1-msft

on:

Power BI Desktop April 2024 : Sort by issue. False...

-

v-yetao1-msft

on:

Power BI Service - NetworkConnectionIssue when sav...

v-yetao1-msft

on:

Power BI Service - NetworkConnectionIssue when sav...

-

v-yetao1-msft

on:

Power BI semantic model account sign in for every ...

v-yetao1-msft

on:

Power BI semantic model account sign in for every ...

-

Idrissshatila

on:

Timeline slicer is showing 30 & 31 March under 30 ...

Idrissshatila

on:

Timeline slicer is showing 30 & 31 March under 30 ...

-

v-yetao1-msft

on:

Publish to web url not working

v-yetao1-msft

on:

Publish to web url not working

- gfbentes55_sema on: PROBLEM WHEN USE JSON FROM WEB WITH LATITUDE AND L...

- New 7,842

- Needs Info 3,356

- Investigating 3,125

- Accepted 2,036

- Declined 38

- Delivered 3,743

-

Reports

9,661 -

Dashboards

3,900 -

Data Modeling

3,853 -

Gateways

2,040 -

Report Server

2,001 -

APIS and Embedding

1,883 -

Custom Visuals

1,671 -

Content Packs

503 -

Mobile

347 -

Need Help

11 -

Show and Tell

2 -

General Comment

2 -

Tips and Tricks

1 -

Power BI Desktop

1