- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- latest transaction rows

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

latest transaction rows

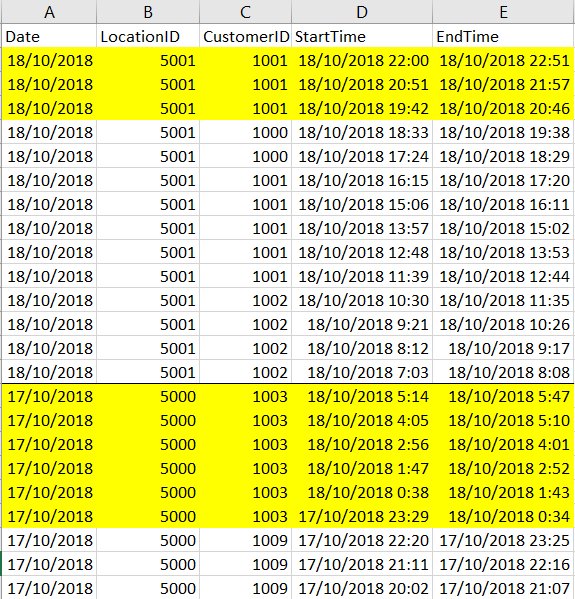

So I have the following dataset which has daily transactions for each location per customer. Each day will have multiple locations and they wont be grouped but for ease of understanding I have only shown one location and grouped it in descending order of StartTime column. So basically I want to be able to choose the section highlighted in yellow for each day which is equal to the latest transaction records per location whenever there is a change in CustomerID. I cannot group it by CustomerID because on the 18/10/2018 there are two transactions from Customer 1000 which are between the transactions by customer 1001. Hence after two transactions by Customer 1000 there are three transactions by Customer 1001 which is what I want to isolate. Hope it is clear.

The measure Im looking for is the average duration for the latest transactions which is average of (EndTime - StartTime). The measure is the easy bit but isolating the records is proving difficult. Hope someone has a solution !

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are going to need to modify your table a little bit and add an additional 'flag' column in order to make the DAX really easy.

The modification is to be done in Power Query as per below. You just need to modify the Source step to link it to your real source:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("ldRbisMwDAXQrQz5LlRXDz+0ldL9b6MxM32MBKb6MijixI6vcrsdoCvGlQnjuBxGhHPBc3k++mF2olQyHPfL1wSt/v8luPUCgenKSdW2I8KuMVwkqTIqRHfWpPKsHKQ5LKtUIcypZbVyI5D1+ZPKFYJdR1alQsBlZlV3RAgByCVe0qlagZjOcV/kvE1WEIYjmdimO7R3J4kkvYPZP4Dfw8Z2c2isaC8A6mSJpAJw/hJaIlEAsDYcSS4AtGY5kvIN0P/eJ2uUo6k7YQaB1yQn1CoE1iRHFa1CkL8C9lbpjMP9AQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Date = _t, LocationID = _t, CustomerID = _t, StartTime = _t, EndTime = _t]),

ChangedType = Table.TransformColumnTypes(Source,{{"Date", type date},{"LocationID", Int64.Type}, {"CustomerID", Int64.Type}, {"StartTime", type datetime}, {"EndTime", type datetime}}),

SortTable = Table.Sort(

ChangedType,

{

{"LocationID", Order.Ascending},

{"StartTime", Order.Ascending}

} ),

GroupTable = Table.Group(

SortTable,

{"LocationID", "CustomerID"},

{

//{ "MaxStartTime", each List.Max( [StartTime] ), type datetime },

{"FullTable", each _, type table }

},

GroupKind.Local

),

ReGroupTable = Table.Group( GroupTable, {"LocationID"}, {"MaxDate", each List.Max( Table.Combine( [FullTable] )[StartTime] ), type table }, GroupKind.Global ),

MergeTables = Table.NestedJoin( GroupTable, {"LocationID"}, ReGroupTable, {"LocationID"}, "MaxDateTable" ),

AddFlag = Table.AddColumn( MergeTables, "Flag", each if List.Max( [FullTable][StartTime] ) = List.Max( [MaxDateTable][MaxDate] ) then true else false, type logical ),

SelectCols = Table.SelectColumns(AddFlag,{"FullTable", "Flag"}),

ExpandedFullTable = Table.ExpandTableColumn(SelectCols, "FullTable", {"Date", "LocationID", "CustomerID", "StartTime", "EndTime"}, {"Date", "LocationID", "CustomerID", "StartTime", "EndTime"}),

Final = Table.TransformColumnTypes(ExpandedFullTable, {{"Date", type date}, {"LocationID", Int64.Type}, {"CustomerID", Int64.Type}, {"StartTime", type datetime}, {"EndTime", type datetime}})

in

Final

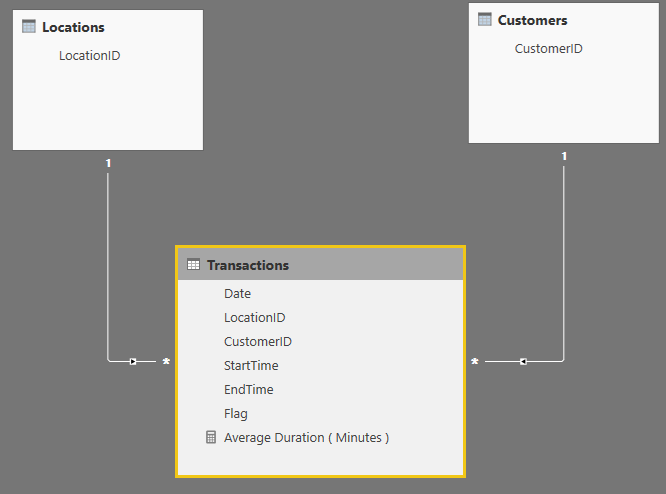

Afterwards, I have normalized the LocationID and CustomerID and built the below model:

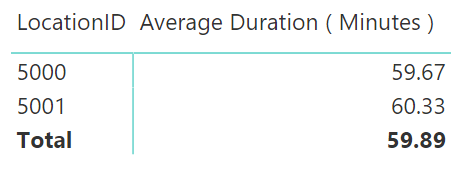

Then the dax becomes really easy:

Average Duration ( Minutes ) =

AVERAGEX (

CALCULATETABLE ( Transactions, Transactions[Flag] = TRUE () ),

( Transactions[EndTime] - Transactions[StartTime] )

* 1440

)

Let me know if you need me to share the .pbix

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Capstone

could you share a version I could easily copy / paste? thanks!@

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are going to need to modify your table a little bit and add an additional 'flag' column in order to make the DAX really easy.

The modification is to be done in Power Query as per below. You just need to modify the Source step to link it to your real source:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("ldRbisMwDAXQrQz5LlRXDz+0ldL9b6MxM32MBKb6MijixI6vcrsdoCvGlQnjuBxGhHPBc3k++mF2olQyHPfL1wSt/v8luPUCgenKSdW2I8KuMVwkqTIqRHfWpPKsHKQ5LKtUIcypZbVyI5D1+ZPKFYJdR1alQsBlZlV3RAgByCVe0qlagZjOcV/kvE1WEIYjmdimO7R3J4kkvYPZP4Dfw8Z2c2isaC8A6mSJpAJw/hJaIlEAsDYcSS4AtGY5kvIN0P/eJ2uUo6k7YQaB1yQn1CoE1iRHFa1CkL8C9lbpjMP9AQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Date = _t, LocationID = _t, CustomerID = _t, StartTime = _t, EndTime = _t]),

ChangedType = Table.TransformColumnTypes(Source,{{"Date", type date},{"LocationID", Int64.Type}, {"CustomerID", Int64.Type}, {"StartTime", type datetime}, {"EndTime", type datetime}}),

SortTable = Table.Sort(

ChangedType,

{

{"LocationID", Order.Ascending},

{"StartTime", Order.Ascending}

} ),

GroupTable = Table.Group(

SortTable,

{"LocationID", "CustomerID"},

{

//{ "MaxStartTime", each List.Max( [StartTime] ), type datetime },

{"FullTable", each _, type table }

},

GroupKind.Local

),

ReGroupTable = Table.Group( GroupTable, {"LocationID"}, {"MaxDate", each List.Max( Table.Combine( [FullTable] )[StartTime] ), type table }, GroupKind.Global ),

MergeTables = Table.NestedJoin( GroupTable, {"LocationID"}, ReGroupTable, {"LocationID"}, "MaxDateTable" ),

AddFlag = Table.AddColumn( MergeTables, "Flag", each if List.Max( [FullTable][StartTime] ) = List.Max( [MaxDateTable][MaxDate] ) then true else false, type logical ),

SelectCols = Table.SelectColumns(AddFlag,{"FullTable", "Flag"}),

ExpandedFullTable = Table.ExpandTableColumn(SelectCols, "FullTable", {"Date", "LocationID", "CustomerID", "StartTime", "EndTime"}, {"Date", "LocationID", "CustomerID", "StartTime", "EndTime"}),

Final = Table.TransformColumnTypes(ExpandedFullTable, {{"Date", type date}, {"LocationID", Int64.Type}, {"CustomerID", Int64.Type}, {"StartTime", type datetime}, {"EndTime", type datetime}})

in

Final

Afterwards, I have normalized the LocationID and CustomerID and built the below model:

Then the dax becomes really easy:

Average Duration ( Minutes ) =

AVERAGEX (

CALCULATETABLE ( Transactions, Transactions[Flag] = TRUE () ),

( Transactions[EndTime] - Transactions[StartTime] )

* 1440

)

Let me know if you need me to share the .pbix

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, that was brilliant ! I applied the solution to my real data and it worked like a charm ![]() . The only addition I had to make was in the Merge Tables step where I had to add the "Date" along with the "LocationID"

. The only addition I had to make was in the Merge Tables step where I had to add the "Date" along with the "LocationID"

MergeTables = Table.NestedJoin( GroupTable, {"LocationID","Date"}, ReGroupTable, {"LocationID","Date"}, "MaxDateTable" )I definitely learnt a few tricks along the way

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 110 | |

| 100 | |

| 80 | |

| 64 | |

| 58 |

| User | Count |

|---|---|

| 148 | |

| 111 | |

| 94 | |

| 84 | |

| 67 |