- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- We found extra characters at the end of JSON input...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We found extra characters at the end of JSON input.

Getting this error when doing a "from the web"

Details: "We found extra characters at the end of JSON input."

When I send the JSON to a validator it says that it is fine. How can I get more specific details on why it is complaining? There is no further detail in the trace file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I encountered the same error and found out that I made the mistake with my powershell script that collected the json data. I addded multiple results to the same file, which works quite ok with CSV files but not with Json

Out-File $filepath -Append

So I solved this by creating a file for every API call and then just import a whole folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This may help someone. For reference, my JSON files were extracted from Azure Activity Log exports.

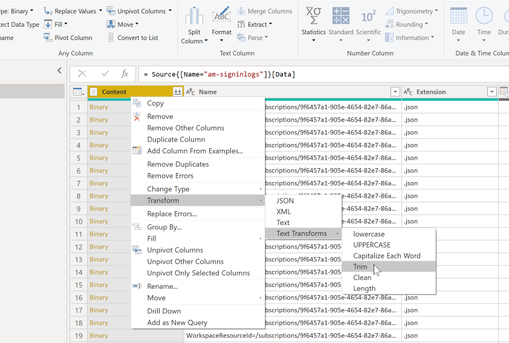

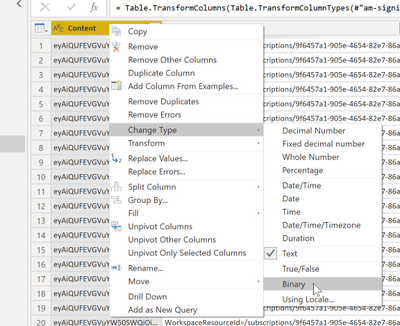

My solution was to select the Binary column, Transform -> Trim Text then set the type back to Binary. Following this I could click on the Binary and see the JSON without experiencing the 'We found extra characters at the end of JSON input.' error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @davidniw333

I´m experiencing the same with Azure Activity log export to Blob Storage Account and JSON files in Line format.

The expoert job is setup as described here:

https://learn.microsoft.com/en-us/azure/azure-monitor/logs/logs-data-export?tabs=portal#storage-acco...

Now I want to read the multiple JSON files into PowerBI for further analysis.

Is it this step you are using for the TRIM?

When vieweing the contect of the Text now, we see that the Column Name and content has been put togheter:

And number of columns has gone from 76 to 146.

And is it this step you are using to convert back to Binary?

When I then on the Binary column press the "Combine Files" button in the header of the Binary Column I get asked to decode the Text:

What kind of setting did you use on this?

If I use the default suggested setting and the "Colon" as delimiter, the output seems to be very strange, and looks much the same as in the screen shot above.

This is how it should have looked like (with correct column names and 76 columns):

This is when I look at one individual JSON file and the content. It all looks the way it should.

But now, it would seem like the Column name is part of the content of each field (either indivdual or as part of a value:

it will be possible to do a extended amount of transformation on the data, but that seems very uncecessary and there should be a way to actually get the data into PowerBI withouth any work.

Have you managed to make it work and that the data looks as expected?

Regards

Oddmar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had same problem. Then I deleted the optional encoding parameter (I had it set at 1200) within the Json.Document function. That solved the error.

Json.Document(jsonText as any, optional encoding as nullable number)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I faced the same error message in Excel - Power Query editor.

A reason for that issue was wrongly selected VPN. After switching to a correct one that message is gone and a query is executed successfylly.

Hope it may help to someone )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my query, I don't select any VPN. Can you explain further? thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't use any syntax in my query related to VPN. It is just a network setting.

In my case, a json data was coming from the web. Here is a simplified version of my query:

let

Url = "https://somesite.com/content.json",

RawData = Web.Contents(Url),

Json = Json.Document(RawData),

Value = List.First(Record.ToList(Json))

ConvertedToTable = Table.FromList(Value, Splitter.SplitByNothing(), null, null, ExtraValues.Error)

in

ConvertedToTable

This query returns a table only for one VPN setting while for another one it returns the error: DataFormat.Error: We found extra characters at the end of JSON input.

It was difficult to identify the root of the issue as simple typing in browser url returns json even with connected to "wrong" VPN.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very weird ......the same i am facing issue but this is all of a sudden . I am trying to call api from table in power bi and it was working fine like 2 weeks back and now all of sudden getting this issue of "extra characters found at the end of JSON" . Is it like any rules of JSON parser changed or anything ... Unfortunately couldnt find a solution for this ... 😞 ... Can anyone please help me with this if you have solved this issue ..... My query goes like

(Name1 as text,Name2 as text) =>

let

Source = Json.Document(Web.Contents("URL",[Headers=[Accept="Basic base64- PAT ]])),

#"Converted to Table" = Record.ToTable(Source),

Value = #"Converted to Table" {2}[Value],

#"Pivoted Column" = Table.Pivot(#"Converted to Table", List.Distinct(#"Converted to Table"[Name]), "Name", "Value")

in

#"Pivoted Column"

Please help me with this .....really struck....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This might not help everyone but thought I'd share:

I wrapped my JSON code in brackets ([...]) and it worked.

I was getting this error and couldn't find a solution. My JSON was formatted like the following:

{"name":"value"...},

{"name":"value"...}

and PBI kept throwing this error. I just added brackets and it worked like the following:

[

{"name":"value"...},

{"name":"value"...}

]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, When I want to refresh my PowerBI query I getting following error message. Could you please help me?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Continued problem w/ IoT data streamed to Azure Blob store (then get "we found extra...") when attempting to combine JSON files in PowerBI.

Here's how I solved it. Works fine for me now.

1) Don't use the Combine (double arrow that gives you the error) feature. Instead, make a query that trains a pattern on one of your json files. To do this, just make a new query against your source:

- Pick the first Json file

- Click the binary

- You should see a file icon, right click and "convert to TXT"

- Now you should see a single column, with JSON data <- but smooshed all in one col.

- Convert that column to JSON (right click col, convert, JSON)

- Now you have one good file export pattern that you can use as a function for all your others.

- Now, edit this query in the Advanced editor and include the 'let' statement that turns this query into a function (mine is below)

- Replace the example file name with the variable you created.

- Click OK and try it out - put a full path to your JSON file in and see if you can invoke it correctly.

- If it works -> now create another query and source all your JSON files. Use the "add column" -> "invoke custom function" and fill in the name of your function and click the file path as the function's source.

- It will run your function over and over - for every file and append them.

Here's my FunctionCode:

let

FxFixJson = (jsonfilepathname as text) =>

let

Source = AzureStorage.Blobs("https://MYBLOBSTOREACCT.blob.core.windows.net/"),

#"Expanded Data" = Table.ExpandTableColumn(Source, "Data", {"Content", "Name"}, {"Data.Content", "Data.Name"}),

#"Filtered Rows" = Table.SelectRows(#"Expanded Data", each ([Data.Name] = jsonfilepathname)),

#"Data Content" = #"Filtered Rows"{0}[Data.Content],

#"Imported Text" = Table.FromColumns({Lines.FromBinary(#"Data Content",null,null,1252)}),

#"Parsed JSON" = Table.TransformColumns(#"Imported Text",{},Json.Document),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Parsed JSON", "Column1", {"deviceId", "messageId", "temperature", "humidity", "EventProcessedUtcTime", "PartitionId", "EventEnqueuedUtcTime", "IoTHub"}, {"deviceId", "messageId", "temperature", "humidity", "EventProcessedUtcTime", "PartitionId", "EventEnqueuedUtcTime", "IoTHub"})

in

#"Expanded Column1"

in

FxFixJson

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response.

Below example helped me to fix the error but it only worked for one record.

When i tried to apply FxFixJson function i am confused with what the (jsonfilepathname as text) should be?

let

Source = AzureStorage.Blobs("XXXX"),

wrfm1 = Source{[Name="XXXX"]}[Data],

#"Expanded Data" = Table.ExpandTableColumn(Source, "Data", {"Content", "Folder Path", "Name"}, {"Data.Content", "Data.Folder Path", "Data.Name"}),

#"Data Content" = #"Expanded Data"{0}[Data.Content],

#"Imported Text" = Table.FromColumns({Lines.FromBinary(#"Data Content",null,null,1252)}),

#"Parsed JSON" = Table.TransformColumns(#"Imported Text",{},Json.Document),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Parsed JSON", "Column1", {"basicException", "internal", "context"}, {"Column1.basicException", "Column1.internal", "Column1.context"}),

#"Expanded Column1.internal" = Table.ExpandRecordColumn(#"Expanded Column1", "Column1.internal", {"data"}, {"internal.data"}),

#"Expanded Column1.context" = Table.ExpandRecordColumn(#"Expanded Column1.internal", "Column1.context", {"application", "data", "device", "user", "session", "operation", "location", "custom"}, {"context.application", "context.data", "context.device", "context.user", "context.session", "context.operation", "context.location", "context.custom"})

in

#"Expanded Column1.context"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Just wan tto emphasis for the developers to solv this

I get this issue independent of three sources of JSON files

Must be something that can be fixed for everyone in the provided parsing of JSON in PBI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having same issue when loading JSON from folder. Doing the first time works ok, but when updating, it appears "We found extra characters at the end of JSON INPUT.

Please correct this old error from 2016

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to enter an advanced query to resolve my problem.

I had to replace carriage returns with commas and surround the entire thing with a bracket to form a valid json doc.

let

Source = DataLake.Contents("https://SOMETHINGHERE.azuredatalakestore.net"),

TwitterData = Source{[Name="TwitterData"]}[Content],

#"TwitterStream-Output_0_9999 json" = Text.Replace(Text.Combine({"[", Text.FromBinary(TwitterData{[Name="TwitterStream-Output_0_9999.json"]}[Content]), "]" }), "#(cr)", ","),

#"Imported JSON" = Json.Document(#"TwitterStream-Output_0_9999 json",1252),

#"Imported JSON1" = #"Imported JSON"{0}

in

#"Imported JSON1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

does this have a solution? i have same error

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does anyone have solution to this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm stuck with the same problem. I'm trying to build a Power BI custom connector, and I'm not even sure that the request by Web.Contents is executed, since I've tried inserting the json as a string in the Power Query and that works, but if I place the same string as a json-file on App Service or as Blob it fails. I'm stranded with with an option on how to trace/debug what really happens.

In my case it's working beautifully in Power BI Desktop, but for some reason I can't get it to run in the Visual Studio for the custom connector project.

Any ideas and feedback is most welcome as I expect this to be a simpel error or misconfiguration on my side, more than an error in the SDK.

Brgds Brian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting the same error while importing Azure BLOB files (Created by Application Insights and stored in Azure Blob Storage) into powerbi and transforming JSON. Please help!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 114 | |

| 100 | |

| 78 | |

| 75 | |

| 52 |

| User | Count |

|---|---|

| 144 | |

| 109 | |

| 108 | |

| 88 | |

| 61 |