- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Storing and using information from a dynamic d...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Storing and using information from a dynamic data source using PBI desktop.

Hello,

This is something that I've worked in for a couple of months, and have been successfully using it for 3 months, and now would like to share with the community. If you want to know where this comes from, please visit this post.

Now I'm going to explain the whole process, as detailed as possible.

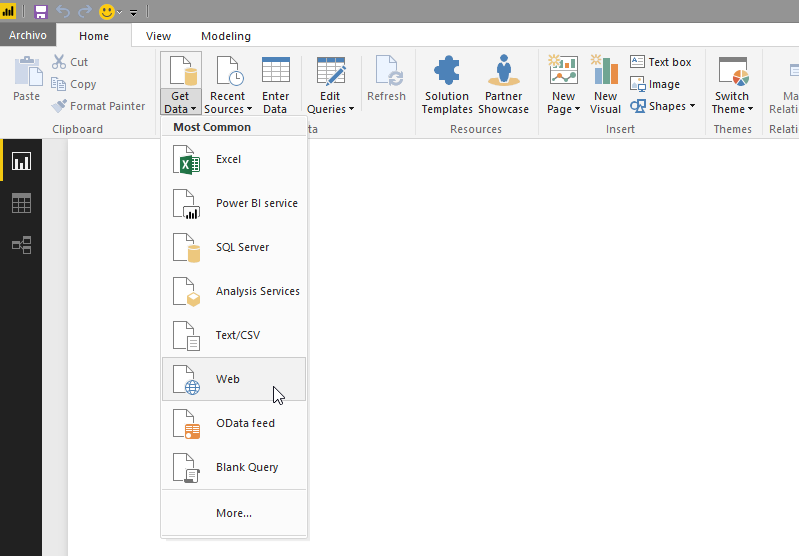

1) Connect Power BI to a data source.

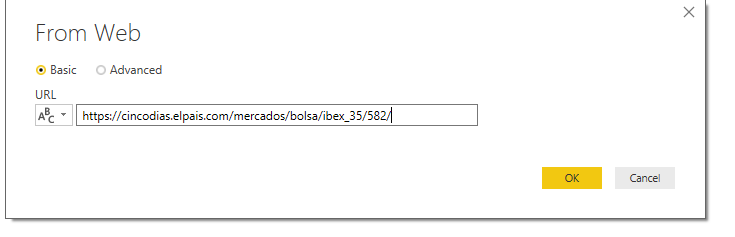

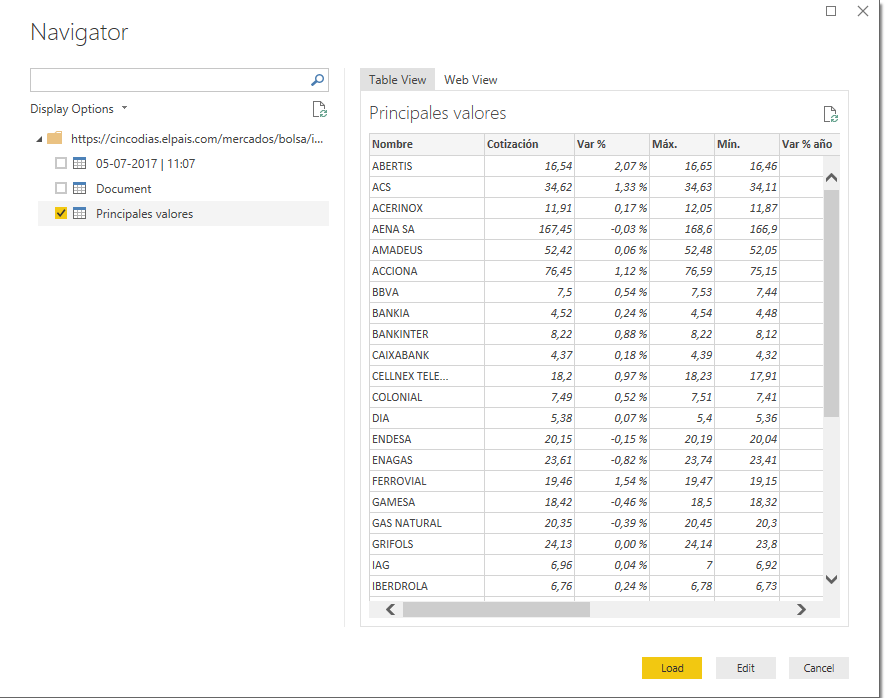

For this example, I’m going to connect to a web page that publishes information regarding IBEX 35

After that, we will create a column: Refreshment date = now ()

*For users that use regional settings different to English ones, I recommend to disable time intelligence, under Options, Data Load.

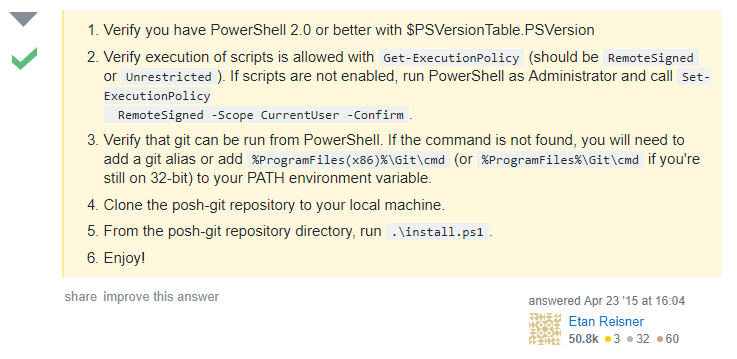

2) Prepare the system to use the script.

I use a Virtual Machine for this, but it can be done from any pc that meets the requirements.

First, you will need to install Power Shell’s Package Manager. https://www.microsoft.com/en-us/download/details.aspx?id=51451

*Edited: It seems this is not necessary in windows 10.

Second, run PowerShell as an admin, and follow these steps. (from https://stackoverflow.com/questions/29828756/the-term-install-module-is-not-recognized-as-the-name-o...)

Third, download and install SQL_AS_AMO.msi and SQL_AS_ADOMD.msi from https://www.microsoft.com/download/details.aspx?id=52676

Now, you are ready to edit the script.

3) Edit the script.

You can download it from my onedrive. Instructions are placed inside the script.

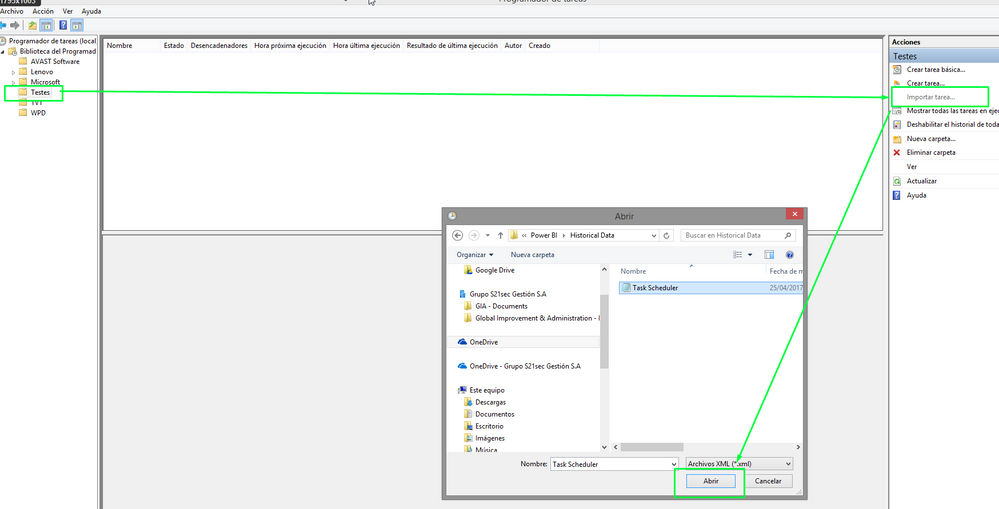

4) Use the task scheduler.

Since we don’t want to run the script manually every day (weekends included), let´s program that task!

Download this file first, then open the task scheduler.

Then, select where you want to store the task, and import it.

I have it set to 10 PM every day, you can change it. Then go to actions, and update the file path to the PowerShell script.

After that, just to check everything is working fine, I’d run the task, just in case…

5) Add the stored information to the data model.

Now we can go back to power bi. At this point, I prefer to make a copy of the pbix file, with a different name, so that the one that is being used for the script is as simple as it can be.

First, get data from a folder, should be the folder with your table(s) name, inside the “daily” folder created on your desktop.

Then, click on Edit, Combine & Edit.

We check that everything is in order…

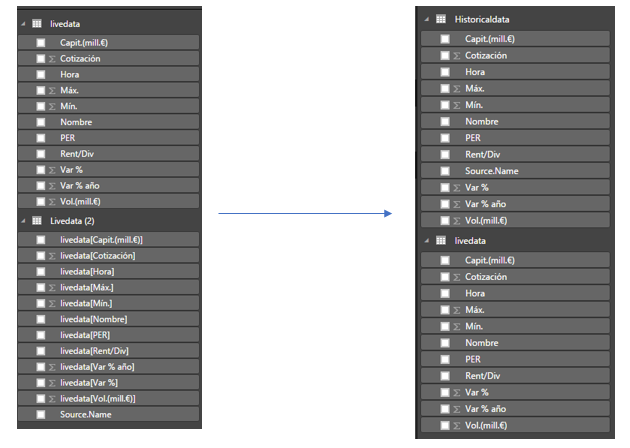

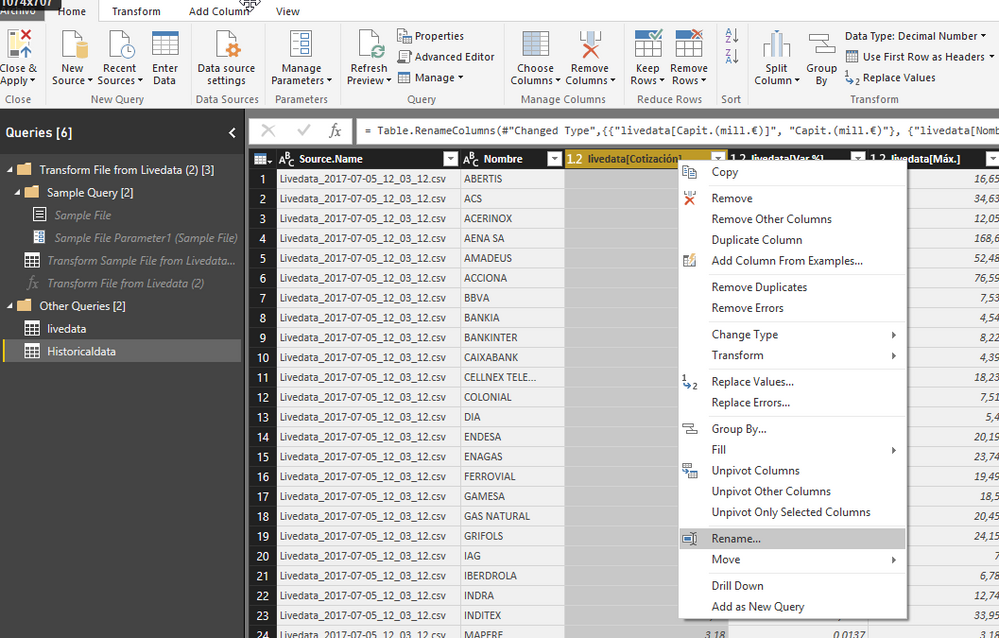

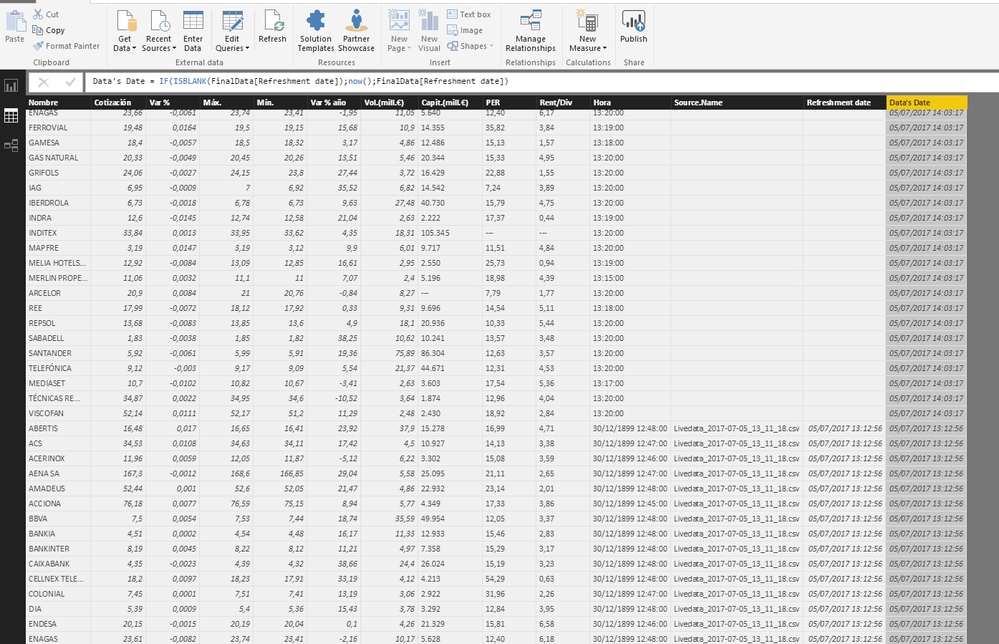

Now we have two tables, one with the information from the dynamic feed, and one from the info that we have stored. Now we need to rename the (2) table, and the columns.

I recommend doing it from the Query Editor.

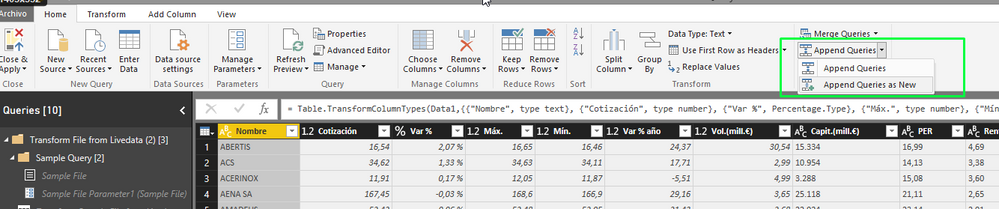

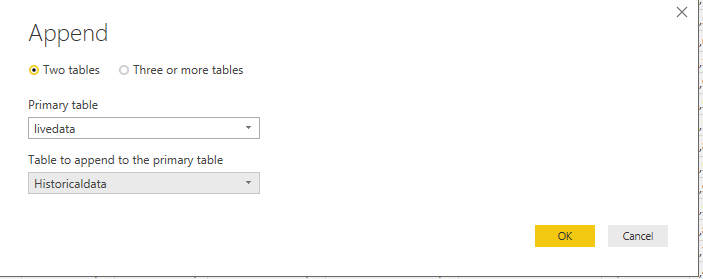

6) Appending Queries

Now is time to put all the info together, and for that we will “Append Queries as new”.

From the query editor, Append queries as new.

Now we have everything in one table, I recommend changing the appends name, and hiding the other two tables in the report view.

After that, we will create a column: Data's Date = IF (ISBLANK (FinalData [Refreshment date]); now (); FinalData [Refreshment date])

That way, we will be able to know when that data was stored.

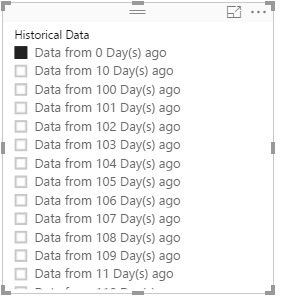

In my case, since I need to use the “live” data and the historical one, I use another column to separate it:

Historical Data = "Data from " & DATEDIFF (FinalData [Data's Date (Text)]; NOW (); DAY) & " Day(s) ago"

In case you need to establish relationships between tables, by using this script to store historical data, you will no longer have unique ids to stablish relationships in some cases, what I do to solve that issue, is to add the date to the ids.

xxxID = table1[_xxxid_value] & FORMAT(Table1[Data's Date];"MM/DD/YYYY")

If you are using power bi desktop, you don’t need to do anything else, but if you want to publish it to pbi online, you will need to set up a data gateway.

I hope you’ve enjoyed this long guide, in case you have any doubts, questions, or would like to add something, please feel free to send me an email to salva.gm@outlook.es

I would also like to give my special thanks to Jorge Diaz, for his guidance and support whilst developing this, without him, this would have probably ended as a “happy idea”, and to Imke, from thebiccountant.com, for pointing us the right direction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey everyone!

I've contacted Salvador via email and he has sent me an updated link for the scripts on steps 3 and 4: here's the link to the folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, I am having trouble following your steps as it's quite not clear in some points.

Can I do the same for data from salesforce?

How did you get the combine and edit option?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Koluman,

You can do the same with data from any source, since what you are doing, is capturing data that is already contained in your power bi desktop version.

The combine and edit appears if you select folder as your data source, if you select, for example, an excel, you will only have edit or load.

Regards,

Salvador.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Salvador how does the 'HistoricalData' table gets created?.. it was not mentioned anywhere in the ps script or steps u created?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous,

It's explained in step 5, basically it's connecting to the folder where you are going to store the csvs that register the historic data.

Regards,

Salvador.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

it was reinstalled Windows on my computer and i have an issue

the script opens the power bi which need to be refreshed and to export but something don't work and rests opened

below the PBI exporter log:

Executing PBI_Exporter.ps1 at 2019-07-16_13_58_02

Launching Power BI

Waiting 30 seconds for PBI to launch

Assuming PBI is launched and ready now

Connecting to PBI using AMO

Exception calling "LoadFile" with "1" argument(s): "The system cannot find the file specified. (Exception from HRESULT: 0x80070002)"

The system cannot find the file specified. (Exception from HRESULT: 0x80070002)

Script finished with errors at 07-16-2019_13_58_34

Closing the connection

You cannot call a method on a null-valued expression.

any idea what i have to do?

thanks,

Cosmin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Yupp, but you have to make thoose csvs available to be uploaded onto the service, I used a data gateway ^^

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Salvador Both of your files (from steps 3 and 4) show up as unavailable. Could you reupload them, please? Or please let me know if you'd rather send them to my email?

Sorry for the bother! This looks fantastic and would really help me a lot. Thank you very much for sharing!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Thank you so much for the post. This is really helpful. I am trying to create an inventory table, so I can have history data (It will replace everything on my inventory table on the database). I was following your steps and I could not get the script. It says it no longer exists.

I would really appreciate if you could re-upload or gave us scripts that need. Thank you so much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Just updated the download link, it seems it had expired :S

Regards,

Salva.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for reuping everything!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can not download your script. Could you please advise?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

+1 for no script availabe. Please re-uplaod at your convenience. Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Just tried the link, and downloaded without issues 😞

I'll paste the code here anyways ^^

#################################################################################################################

# PBI_Exporter.ps1 #

# #

# This script exports selected data tables from Power BI and generates csv files that can be reimported in #

# Power BI in order to generate historic daily and monthly data. The script also generates a log file of all #

# exports and notifies execution status via email. #

# #

# Authors: Jorge Díaz #

# Salvador Gomez Salva.gm@outlook.es #

# #

# Credits: #

# Based on https://github.com/djouallah/PowerBI_Desktop_ETL by Mimoune Djouallah https://datamonkeysite.com/ #

# #

# Notes: #

# 1.Edit custom variables to adapt the script to your context #

# 2.Avoid using paths and PBI table names with spaces since this will cause errors in the script #

# #

# #

#################################################################################################################

################## CUSTOM VARIABLES ##################

# Array of PBI tables you want to export

$TABLES = "Livedata"

# the Path your pbix file

$template = "C:\Users\sgomez\Desktop\IBEX.pbix"

# The root path where subdirectories will be created for CSV files to export

$path = “C:\Users\sgomez\Desktop\”

# The Path to PowerBI Desktop executable

$PBIDesktop = "C:\Program Files\Microsoft Power BI Desktop\bin\PBIDesktop.exe"

# The time Needed in Seconds for PowerBI Desktop to launch and open the pbix file, 60 seconds by default, you may increase it for really big files

$waitoPBD = 60

################## HELPER FUNCTIONS ##################

#Log function

Function log_message ($log_message)

{

$log = "$($path)\PBI_Exporter_log.txt"

Write-Host $log_message

Add-Content $log $log_message

}

#Calculate script duration

#Thanks to Russ Gillespie https://community.spiceworks.com/topic/436406-time-difference-in-powershell

Function calculate_duration ($date_1, $date_2)

{

$TimeDiff = New-TimeSpan $date_1 $date_2

if ($TimeDiff.Seconds -lt 0) {

$Hrs = ($TimeDiff.Hours) + 23

$Mins = ($TimeDiff.Minutes) + 59

$Secs = ($TimeDiff.Seconds) + 59

}

else {

$Hrs = $TimeDiff.Hours

$Mins = $TimeDiff.Minutes

$Secs = $TimeDiff.Seconds

}

$difference = '{0:00} hours {1:00} minutes and {2:00} seconds' -f $Hrs,$Mins,$Secs

return $difference

}

#This function is used to remove from the exported CSV files extra lines and double quotes inside Power BI text fields which may cause problems when later reimporting the CSV file into Power BI

Function Remove_unwantedChars ($csv_file)

{

$csv_input = [System.IO.File]::ReadAllText($csv_file)

# Replace all double quotes

$csv_output = $csv_input -replace "`"","~DOUBLE~QUOTE~"

# Restore double quotes only for csv separators (",")

$csv_output = $csv_output -replace "~DOUBLE~QUOTE~,~DOUBLE~QUOTE~","`",`""

# Replace all double double quotes with single quote

# Note: export_csv escapes a " with ""

$csv_output = $csv_output -replace "~DOUBLE~QUOTE~~DOUBLE~QUOTE~","`'"

# Restore double quotes at end of line (both "rn and "r and "n)

$csv_output = $csv_output -replace "~DOUBLE~QUOTE~`r`n","`"`r`n"

$csv_output = $csv_output -replace "~DOUBLE~QUOTE~`r","`"`r"

$csv_output = $csv_output -replace "~DOUBLE~QUOTE~`n","`"`n"

# Restore at the start of new line (both rn" and r" and n")

$csv_output = $csv_output -replace "`r`n~DOUBLE~QUOTE~","`r`n`""

$csv_output = $csv_output -replace "`r~DOUBLE~QUOTE~","`r`""

$csv_output = $csv_output -replace "`n~DOUBLE~QUOTE~","`n`""

# Restore the first double quote in the file (the very first character)

$csv_output = $csv_output -replace "^~DOUBLE~QUOTE~", "`""

# Replace any other double quotes by single quotes (this are line double quotes originally inside Power BI text fields)

#$csv_output = $csv_output -replace "~DOUBLE~QUOTE~","`'"

#The above is commented because actually if there is any other double quote it should be an error because export_csv escapes " with "" (Check for this situation and raise an exception if needed)

if ($csv_output -like '*~DOUBLE~QUOTE~*') {

throw "Unexpected double quotes found in file $($csv_file), original file is saved unmodified."

}

# Replace all line ends (both for rn and r and n)

$csv_output = $csv_output -replace "`r`n","~LINE~END~"

$csv_output = $csv_output -replace "`r","~LINE~END~"

$csv_output = $csv_output -replace "`n","~LINE~END~"

# Replace line ends finishing with "," with bars (this are extra lines originally inside Power BI text fields)

$csv_output = $csv_output -replace "`",`"~LINE~END~","`",`" | "

# Restore other line ends associated to a double quote separator (this are the real csv line ends)

$csv_output = $csv_output -replace "`"~LINE~END~","`"`r`n"

# Replace all other line ends with bars (this are extra lines originally inside Power BI text fields)

$csv_output = $csv_output -replace "~LINE~END~"," | "

#Overwrite the modifications onto the original file

Write-Output $csv_output > $csv_file

}

################## MAIN SCRIPT ##################

Try{

$status ="with undetermined status"

$executionDate = get-date -f yyyy-MM-dd_HH_mm_ss

$executionDate_raw = get-date

$isFirstDayOfMonth = (get-date -f dd) -eq "01"

$isLastDayOfMonth = ((get-date).AddDays(1)).Month -ne (get-date).Month

#Make directory if needed

md -Force $($path) | Out-Null

log_message "***********************************************"

log_message "Executing PBI_Exporter.ps1 at $($executionDate)"

log_message "Launching Power BI"

$app = START-PROCESS $PBIDesktop $template -PassThru

log_message "Waiting $($waitoPBD) seconds for PBI to launch"

Start-Sleep -s $waitoPBD

log_message "Assuming PBI is launched and ready now"

# get the server name and the port name of PowerBI desktop SSAS , thanks for Imke http://www.thebiccountant.com/2016/04/09/hackpowerbi/#more-1147

$pathtofile = (Get-ChildItem -Path c:\users -Filter msmdsrv.port.txt -Recurse -ErrorAction SilentlyContinue -Force | sort LastWriteTime | select -last 1).FullName

$port = gc $pathtofile

$port = $port -replace '\D',''

$dataSource = "localhost:$port"

$pathtoDataBase_Name = $pathtofile -replace 'msmdsrv.port.txt',''

$Database_Name = Get-ChildItem -Path $pathtoDataBase_Name -Filter *.db.xml -Recurse -ErrorAction SilentlyContinue -Force

$Database_Name = $Database_Name.ToString().Split(".") | select -First 1

# Connect using AMO thanks for stackexchange :)

log_message "Connecting to PBI using AMO"

[System.Reflection.Assembly]::LoadFile("C:\Program Files (x86)\Microsoft SQL Server\130\SDK\Assemblies\Microsoft.AnalysisServices.tabular.DLL")

("Microsoft.AnalysisServices") >$NULL

$server = New-Object Microsoft.AnalysisServices.tabular.Server

$server.connect($dataSource)

$database = $server.Databases.Item($Database_Name)

#Refreshing Bower BI (thanks to Marco russo http://www.sqlbi.com/articles/using-process-add-in-tabular-models/)

log_message "Trying to refresh Power BI now"

$model = $database.Model

$model.RequestRefresh("Full")

$model.SaveChanges()

$server.disconnect($dataSource)

# Connect using ADOMD.NET

log_message "Connecting to PBI using ADOMD.NET"

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices.AdomdClient")

# Create the first connection object

log_message "Quering datasource $($dataSource) for database $($Database_Name)"

$con = new-object Microsoft.AnalysisServices.AdomdClient.AdomdConnection

$con.ConnectionString = "Datasource=$dataSource; Initial Catalog=$Database_Name;timeout=0; connect timeout =0"

$con.Open()

foreach ($element in $TABLES) {

# Create a command and send a query to get the data, the dax/mdx query is defined at the top

$command = $con.CreateCommand()

$command.CommandText = "evaluate $($element)"

#$Reader = $Comand.ExecutedataReader()

$adapter = New-Object -TypeName Microsoft.AnalysisServices.AdomdClient.AdomdDataAdapter $command

$dataset = New-Object -TypeName System.Data.DataSet

$adapter.Fill($dataset)

######## Daily export ########

#make sure the directory exists (with -Force it won't complain if it already exists)

md -Force "$($path)Daily\$($element)\" | Out-Null

$filename = "$($path)Daily\$($element)\$($element)_$($executionDate).csv"

log_message "Exporting to file $($filename)"

$dataset.Tables[0] | export-csv "$($filename)" -notypeinformation -Encoding UTF8

log_message "Removing unwanted chars from $($filename)"

Remove_unwantedChars $filename

######## Beginning of month export ########

If ($isFirstDayOfMonth) {

md -Force "$($path)MonthStart\$($element)\" | Out-Null

$filename = "$($path)MonthStart\$($element)\$($element)_$($executionDate).csv"

log_message "Exporting to file $($filename)"

$dataset.Tables[0] | export-csv "$($filename)" -notypeinformation -Encoding UTF8

log_message "Removing unwanted chars from ($filename)"

Remove_unwantedChars $filename

}

######## End of month export ########

If ($isLastDayOfMonth) {

md -Force "$($path)MonthEnd\$($element)\" | Out-Null

$filename = "$($path)MonthEnd\$($element)\$($element)_$($executionDate).csv"

log_message "Exporting to file $($filename)"

$dataset.Tables[0] | export-csv "$($filename)" -notypeinformation -Encoding UTF8

log_message "Removing unwanted chars from $($filename)"

Remove_unwantedChars $filename

}

}

$status ="successfuly"

}

Catch

{

$status ="with errors"

#Error

$e = $_.Exception

$ErrorMessage = $e.Message

while ($e.InnerException) {

$e = $e.InnerException

$ErrorMessage += "`n" + $e.Message

}

log_message ($ErrorMessage)

}

Finally

{

try

{

$finishDate = get-date -f MM-dd-yyyy_HH_mm_ss

$finishDate_raw = get-date

$elapsed_time = calculate_duration $executionDate_raw $finishDate_raw

log_message "Script finished $($status) at $($finishDate)"

#We don't know where the error happened so just in case we try to clean-up the connection and process in a Finally section

log_message "Closing the connection"

$con.Close()

log_message "Stopping Power BI"

Stop-Process $app.Id

#Now report back the error through email

# switch ($status)

# {

# "successfuly" {

# send_email "Successful PBI_Exporter execution" @"

#<p><span style="color:green;"><strong>Successful</strong></span> execution of PBI_Exporter.ps1 at <strong>$($executionDate)</strong> finished after a total elapsed time of $($elapsed_time)</p>

#"@

# }

# "with errors" {

# send_email "Error in PBI_Exporter execution" @"

#<p><span style="color:red;"><strong>An error </strong></span>occurred when executing PBI_Exporter.ps1 at <strong>$($executionDate)</strong>:</p>

#<p style="color:red;">$($ErrorMessage)</p>

#"@

# }

# default {

# send_email "Undetermined PBI_Exporter execution" @"

#<p><span style="color:red;"><strong>Undertermined</strong></span> execution status of PBI_Exporter.ps1 at <strong>$($executionDate)</strong></p>

#"@

# }

# }

}

catch

{

#Error

$e = $_.Exception

$ErrorMessage = $e.Message

while ($e.InnerException) {

$e = $e.InnerException

$ErrorMessage += "`n" + $e.Message

}

log_message ($ErrorMessage)

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Salvador

i found your tutorial and i need to use to store data from a weather website

i'm stucked when i have to set powershell, can you help me please with some printscreens or explain how to do each step?

i don't know nor to see what version is

thanks a lot!

Cosmin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Cosminc,

Could you confirm if you are stuck with the steps described on https://stackoverflow.com/questions/29828756/the-term-install-module-is-not-recognized-as-the-name-o... ?

Regards,

Salva.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Salva

a friend helped me with powershell. All works fine now, i very appreciate your effort, it helps me a lot!

Now i have a chalenge to export automatically in an email as a picture or attachement - excel, pdf, ppt. It is the next step in my project after storing data, to develop reports and also some email alerts for me that all works ok or not.

Any suggestion will help me.

Thanks,

Cosmin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Cosminc,

Have you tried the subscription to dashboards/reports? https://docs.microsoft.com/en-us/power-bi/service-report-subscribe

You can subscribe to 1 email on a daily/weekly basis upon data refresh.

If I'm not mistaken, there are also some visuals that would send an email alert if they are below or above certain threshold.

Regards,

Salva

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear colleague,

Many thanks for detailed post about storing and using information from a dynamic data source. I try to do the same in a little proof of concept at our firm.

I'm struggling with running the script from PowerShell.

The log file stops with this message:

*****************************************

Trying to refresh Power BI now

Connecting to PBI using ADOMD.NET

Quering datasource localhost:61731 for database 4363995e-1009-4db5-9a93-5663e9b889e8

Der Typ [Microsoft.AnalysisServices.AdomdClient.AdomdConnection] kann nicht gefunden werden. Stellen Sie sicher, dass die Assembly, die diesen Typ enthält, geladen wird.

******************************************

I checked with the IT department and I have the rights to access the localhost.

I also verified that these file is available on my pc:

[System.Reflection.Assembly]::LoadFile("C:\Program Files (x86)\Microsoft SQL Server\130\SDK\Assemblies\Microsoft.AnalysisServices.tabular.DLL")

Does anyone have a hint where I could look further to solve this problem?

Many thanks,

Nicole

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solved by reinstalling SQL_AS_AMO.msi and SQL_AS_ADOMD.msi

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 110 | |

| 96 | |

| 77 | |

| 63 | |

| 55 |

| User | Count |

|---|---|

| 143 | |

| 109 | |

| 89 | |

| 84 | |

| 66 |