- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Rolling sum with large number of data

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rolling sum with large number of data

Hello,

I'm trying to visualize the usage of software licenses in our company.

Table below shows small sample of information, which I can get from the license server.

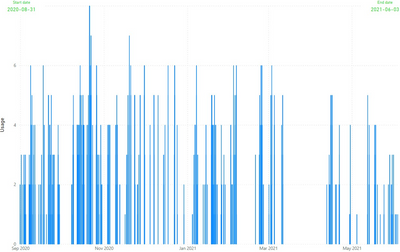

The goal is to get a graph showing how many tokens at any time have been in use.

I created a rolling sum for the tokens as a measure according to solutions, which I found in some other posts (see code below).

Everything works fine for small number of data. However, if I load data for few months, the performance drops very rapidly and at full year (around 40000 rows) I run out of memory.

I found few posts with similar problems but either there was no solution, or the purposed solutions do not work in my case.

Is there a way to make rolling sum more efficient? Or maybe there is a different approach to create visualization that I need?

Thanks in advance for help.

BP

Data:

Measure:

Tokens_Sum = CALCULATE(SUM(licenses_example[Tokens]),FILTER(ALL(licenses_example[time]),licenses_example[time] <=MAX(licenses_example[time])))

Expected result:

Raw data:

| lic | time | uesr | country | InOut | Tokens |

| lic_11 | 2020-08-31 16:01 | uesr_111 | KR | OUT: | 1 |

| lic_11 | 2020-08-31 16:01 | uesr_111 | KR | IN: | -1 |

| lic_11 | 2020-08-31 16:02 | uesr_111 | KR | OUT: | 1 |

| lic_22 | 2020-08-31 16:02 | uesr_111 | KR | OUT: | 3 |

| lic_22 | 2020-09-01 15:02 | uesr_111 | KR | IN: | -3 |

| lic_11 | 2020-09-01 15:02 | uesr_111 | KR | IN: | -1 |

| lic_33 | 2020-09-01 17:06 | uesr_222 | CN | OUT: | 5 |

| lic_44 | 2020-09-01 17:06 | uesr_222 | CN | OUT: | 2 |

| lic_55 | 2020-09-01 17:06 | uesr_222 | CN | OUT: | 1 |

| lic_33 | 2020-09-01 20:33 | uesr_222 | CN | IN: | -5 |

| lic_44 | 2020-09-01 20:33 | uesr_222 | CN | IN: | -2 |

| lic_55 | 2020-09-01 20:33 | uesr_222 | CN | IN: | -1 |

| lic_11 | 2020-09-02 18:29 | uesr_333 | JP | OUT: | 1 |

| lic_22 | 2020-09-02 18:29 | uesr_333 | JP | OUT: | 3 |

| lic_11 | 2020-09-03 00:27 | uesr_333 | JP | IN: | -1 |

| lic_22 | 2020-09-03 00:27 | uesr_333 | JP | IN: | -3 |

| lic_11 | 2020-09-03 08:00 | uesr_333 | JP | OUT: | 1 |

| lic_22 | 2020-09-03 08:00 | uesr_333 | JP | OUT: | 3 |

| lic_11 | 2020-09-03 16:09 | uesr_333 | JP | IN: | -1 |

| lic_22 | 2020-09-03 16:09 | uesr_333 | JP | IN: | -3 |

| lic_33 | 2020-09-04 02:25 | uesr_222 | CN | OUT: | 5 |

| lic_44 | 2020-09-04 02:25 | uesr_222 | CN | OUT: | 2 |

| lic_55 | 2020-09-04 02:25 | uesr_222 | CN | OUT: | 1 |

| lic_33 | 2020-09-04 13:45 | uesr_222 | CN | IN: | -5 |

| lic_44 | 2020-09-04 13:45 | uesr_222 | CN | IN: | -2 |

| lic_55 | 2020-09-04 13:45 | uesr_222 | CN | IN: | -1 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could try aggregating the data in Power Query so the grain of the table corresponds to the grain of the visual (day, lic, country).

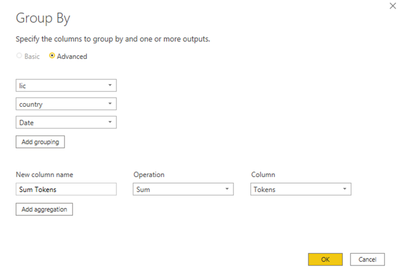

In Power Query, go to Transform --> Group By:

Here's the complete M (the relevant part begins with step AddDateColumn):

let

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

"ldOxDoJADAbgV7kwS9Jr7wC7OqkJGqOTMQ7GwcRJ4/t7EIWIV8otQI589C9pj8fsfrucrc1mGQJCDlVO1tiCoTl6XZ+P8LJ5XO/CZXPYc7jZ7DRLg8u6cfk4xCkVEZMgxeA8hwB9HH6iUizqFNhHJRrAkqH4QmzzLOo+qu+gc0kQO+h9EpSjInB7NISfHuWoCpSjKjA6OQGisRXj/Aup/cZqq0zOFCgNABkAxjIC/6L+VlThSMWKAdJ7VKFcsVmr2M9Re1QgCSPnDCCjT98OFUojp0JpO5yxxC4Gle1QoRxVgSHq6Q0=",

BinaryEncoding.Base64

),

Compression.Deflate

)

),

let

_t = ((type nullable text) meta [Serialized.Text = true])

in

type table [lic = _t, time = _t, uesr = _t, country = _t, InOut = _t, Tokens = _t]

),

ChangeType = Table.TransformColumnTypes(

Source,

{

{"lic", type text},

{"time", type datetime},

{"uesr", type text},

{"country", type text},

{"InOut", type text},

{"Tokens", Int64.Type}

}

),

AddDateColumn = Table.AddColumn(ChangeType, "Date", each DateTime.Date([time])),

ChangeType2 = Table.TransformColumnTypes(AddDateColumn, {{"Date", type date}}),

GroupRows = Table.Group(

ChangeType2,

{"lic", "country", "Date"},

{{"Sum Tokens", each List.Sum([Tokens]), type nullable number}}

)

in

GroupRows

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response. Unfortunately, this doesn't provide the results that I need.

As you can see in the data, every time a user opens a software tokens are checked OUT and there is a positive number in the "Tokens" column. When the user closes the software, tokens are checked back IN and there is same but negative number in "Tokens" column. Now assuming that users close their programs at the end of the day (as they should), all tokens will be checked back IN and the sum over the whole day will return 0.

So unfortunately, this solution would only provide information on how many tokens are in use at midnight (if some users don't close the software).

Creating a sum only of the positive tokens is unfortunately also not a solution. For example if user would open a software using for example 10 tokens, after 1h accidently close it and immediately open it again and spend another 1h to finish his work, it would mean that during these 2h he checked OUT 10 tokens twice, so the sum would be 20. But in fact, he was only using 10 tokens at any given time during these 2h. I'm interested in the latter.

So, let me explain more precisely what I am after.

The purpose is to see how many of the tokens that we buy, are actually being used, how often there are peeks and lows, etc. Also important is distribution between the countries and different kinds of licenses. Ultimately, we want to use these results to optimize out licensing costs.

So I need every change in the usage to be represented in the chart.

Here is an example:

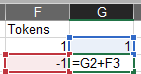

In Excel I could do a addition row by row, to get this result, but of course I don’t have the possibility to filter the results by license, country, etc.

Is there a way to “cheat” PowerBI to make this kind of row by row addition?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could try creating a calculated column to achieve row-by-row additon. This would have the advantage of pre-calculating the rolling sum, but it wouldn't have the flexibility of filtering by country, etc.

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 107 | |

| 100 | |

| 80 | |

| 63 | |

| 58 |

| User | Count |

|---|---|

| 148 | |

| 111 | |

| 94 | |

| 84 | |

| 67 |