- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Removing Null values before a dataset w/o remo...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Removing Null values before a dataset w/o removing entire rows

I'm working with data where a driver makes a run through a particular section of road each day. The raw data has a date/time, gps coordinates, and a speed but are all aggregated into a single .csv file.

DateTime Lat Long Speed

12/25/18 9:00:01 xxx xxx 25

12/25/18 9:00:02 xxx xxx 26

...

12/26/18 9:00:01 xxx xxx 24

12/26/18 9:00:02 xxx xxx 23

...

I've managed to create a new column for each date with the speed data filling the rows but there are many "null" cells I cannot remove.

Elapsed Time 12/25/18 12/26/18 ... ...

0 25 null

1 26 null

...

72 null 24

73 null 23

...

My goal is to collapse the data by shifting my data set up into a shared "elapsed time" like so:

Elapsed Time 12/25/18 12/26/18 ... ...

0 25 24

1 26 23

...

I expect each run to take a different amount of time +/- 10 seconds and don't mine having null values after the data set but I need each column to run the data I have wrt the same elapsed time counter. Any suggestions?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, everything is possible with PowerQuery (it's just a question of if it finishes on time 😉 )

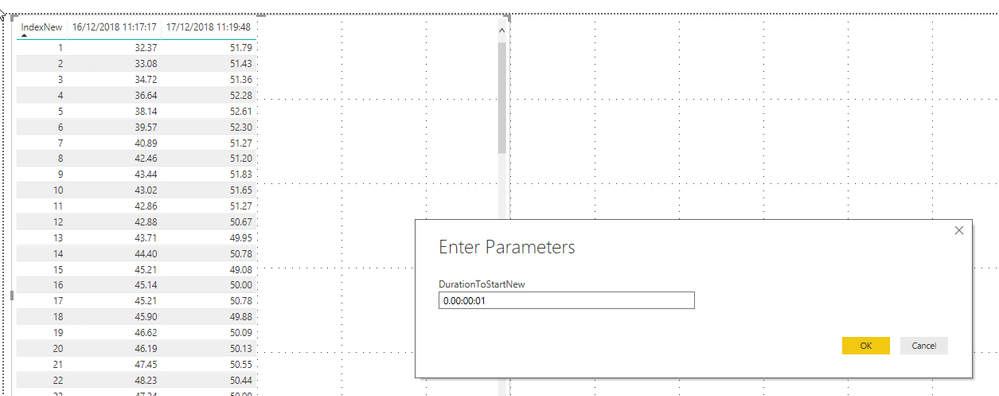

Please check enclosed file. It creates a new column if there is a gap for more than one second. There is a parameter "DurationToStartNew" that let's you edit that time interval for the next column to start:

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I took your original table and added these two columns:

Date = DATE(YEAR([DateTime]),MONTH([DateTime]),DAY([DateTime]))

Elapsed Time =

VAR __min = MINX(FILTER(ALL('Table6'),DATE(YEAR([DateTime]),MONTH([DateTime]),DAY([DateTime])) = DATE(YEAR(EARLIER([DateTime])),MONTH(EARLIER([DateTime])),DAY(EARLIER([DateTime])))),[DateTime])

RETURN DATEDIFF(__min,[DateTime],SECOND)

Then I could create a matrix that looked like what you wanted using Elapsed Time for my rows, Date for my Columns and Speed for my Value.

I attached the PBIX, you want Page 2, Table6

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does this work within power query as well?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

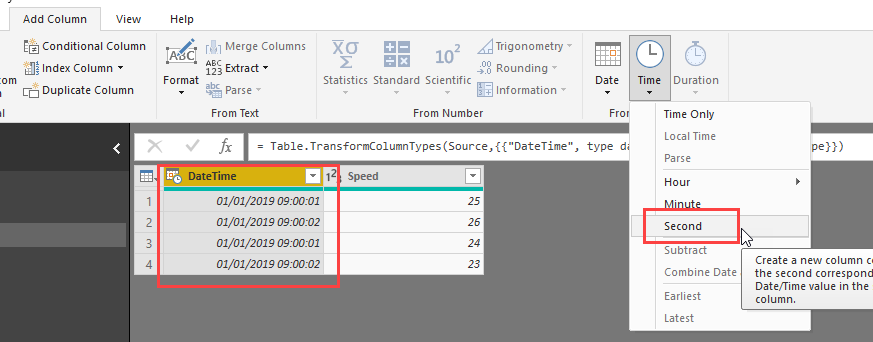

To do this in the query editor, you would first add a new column that extracts that seconds from your datetime-columns:

Then transform the DateTime-column to date and pivot on it (with Speed as value and don't summarize in the advanced options).

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the prompt responses! Your solution works great in instances when my data starts at the same second but in instances where one data log starts at 5 seconds and another at 15 this solution produces unusable data. I'm not familiar with the M code used within power query but taking the (current time) - (the initial time from each date) would produce an "elapsed time" number.

Another solution could be to test that the interval between each datetime entry is one second and having a column count upwards from 0, resetting to 0 (ie, a new column once we create a matrix) when a jump more than say... 5 seconds is detected.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, but I don't understand your request. Please provide sample data that makes your request more clear.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DateTime Speed (mph)Second

| 12/16/2018 11:17:17 AM | 32.37 | 17 |

| 12/16/2018 11:17:18 AM | 33.08 | 18 |

| 12/16/2018 11:17:19 AM | 34.72 | 19 |

| 12/16/2018 11:17:20 AM | 36.64 | 20 |

| 12/16/2018 11:17:21 AM | 38.14 | 21 |

| 12/16/2018 11:17:22 AM | 39.57 | 22 |

| 12/16/2018 11:17:23 AM | 40.89 | 23 |

| 12/16/2018 11:17:24 AM | 42.46 | 24 |

| 12/16/2018 11:17:25 AM | 43.44 | 25 |

| 12/16/2018 11:17:26 AM | 43.02 | 26 |

| 12/16/2018 11:17:27 AM | 42.86 | 27 |

| 12/16/2018 11:17:28 AM | 42.88 | 28 |

| 12/16/2018 11:17:29 AM | 43.71 | 29 |

| 12/16/2018 11:17:30 AM | 44.4 | 30 |

| 12/16/2018 11:17:31 AM | 45.21 | 31 |

| 12/16/2018 11:17:32 AM | 45.14 | 32 |

| 12/16/2018 11:17:33 AM | 45.21 | 33 |

| 12/16/2018 11:17:34 AM | 45.9 | 34 |

| 12/16/2018 11:17:35 AM | 46.62 | 35 |

| 12/16/2018 11:17:36 AM | 46.19 | 36 |

| 12/16/2018 11:17:37 AM | 47.45 | 37 |

| 12/16/2018 11:17:38 AM | 48.23 | 38 |

| 12/16/2018 11:17:39 AM | 47.24 | 39 |

| 12/16/2018 11:17:40 AM | 48.36 | 40 |

| 12/16/2018 11:17:41 AM | 47.85 | 41 |

| 12/16/2018 11:17:42 AM | 48.74 | 42 |

| 12/16/2018 11:17:43 AM | 48.47 | 43 |

| 12/16/2018 11:17:44 AM | 49.15 | 44 |

| 12/16/2018 11:17:45 AM | 49.44 | 45 |

| 12/16/2018 11:17:46 AM | 49.59 | 46 |

| 12/16/2018 11:17:47 AM | 49.12 | 47 |

| 12/16/2018 11:17:48 AM | 48.77 | 48 |

| 12/16/2018 11:17:49 AM | 48.94 | 49 |

| 12/16/2018 11:17:50 AM | 48.32 | 50 |

| 12/16/2018 11:17:51 AM | 48.63 | 51 |

| 12/16/2018 11:17:52 AM | 47.76 | 52 |

| 12/16/2018 11:17:53 AM | 47.74 | 53 |

| 12/16/2018 11:17:54 AM | 47.29 | 54 |

| 12/16/2018 11:17:55 AM | 47.18 | 55 |

| 12/16/2018 11:17:56 AM | 47.49 | 56 |

| 12/16/2018 11:17:57 AM | 47.27 | 57 |

| 12/16/2018 11:17:58 AM | 47.07 | 58 |

| 12/16/2018 11:17:59 AM | 47.11 | 59 |

| 12/16/2018 11:18:00 AM | 47.51 | 0 |

| 12/16/2018 11:18:01 AM | 49.01 | 1 |

| 12/16/2018 11:18:02 AM | 48.03 | 2 |

| 12/16/2018 11:18:03 AM | 47.83 | 3 |

| 12/16/2018 11:18:04 AM | 48.63 | 4 |

| 12/16/2018 11:18:05 AM | 47.78 | 5 |

| 12/16/2018 11:18:06 AM | 48.56 | 6 |

| 12/16/2018 11:18:07 AM | 48.65 | 7 |

| 12/16/2018 11:18:08 AM | 48.25 | 8 |

| 12/16/2018 11:18:09 AM | 48.54 | 9 |

| 12/16/2018 11:18:10 AM | 47.69 | 10 |

| 12/16/2018 11:18:11 AM | 48.07 | 11 |

| 12/16/2018 11:18:12 AM | 48.72 | 12 |

| 12/16/2018 11:18:13 AM | 48.5 | 13 |

| 12/16/2018 11:18:14 AM | 48.99 | 14 |

| 12/16/2018 11:18:15 AM | 48.27 | 15 |

| 12/16/2018 11:18:16 AM | 48.05 | 16 |

| 12/16/2018 11:18:17 AM | 48.56 | 17 |

| 12/16/2018 11:18:18 AM | 47.71 | 18 |

| 12/16/2018 11:18:19 AM | 47.49 | 19 |

| 12/16/2018 11:18:20 AM | 47.74 | 20 |

| 12/16/2018 11:18:21 AM | 48.18 | 21 |

| 12/16/2018 11:18:22 AM | 48.85 | 22 |

| 12/16/2018 11:18:23 AM | 49.53 | 23 |

| 12/16/2018 11:18:24 AM | 48.77 | 24 |

| 12/16/2018 11:18:25 AM | 48.92 | 25 |

| 12/16/2018 11:18:26 AM | 49.3 | 26 |

| 12/16/2018 11:18:27 AM | 48.16 | 27 |

| 12/16/2018 11:18:28 AM | 49.19 | 28 |

| 12/16/2018 11:18:29 AM | 48.34 | 29 |

| 12/16/2018 11:18:30 AM | 48.81 | 30 |

| 12/16/2018 11:18:31 AM | 49.12 | 31 |

| 12/16/2018 11:18:32 AM | 47.83 | 32 |

| 12/16/2018 11:18:33 AM | 47.98 | 33 |

| 12/16/2018 11:18:34 AM | 47.69 | 34 |

| 12/16/2018 11:18:35 AM | 47.69 | 35 |

| 12/16/2018 11:18:36 AM | 46.89 | 36 |

| 12/16/2018 11:18:37 AM | 46.57 | 37 |

| 12/16/2018 11:18:38 AM | 45.95 | 38 |

| 12/16/2018 11:18:39 AM | 45.37 | 39 |

| 12/16/2018 11:18:40 AM | 46.64 | 40 |

| 12/16/2018 11:18:41 AM | 46.57 | 41 |

| 12/16/2018 11:18:42 AM | 45.88 | 42 |

| 12/16/2018 11:18:43 AM | 46.82 | 43 |

| 12/16/2018 11:18:44 AM | 47.27 | 44 |

| 12/16/2018 11:18:45 AM | 46.77 | 45 |

| 12/16/2018 11:18:46 AM | 47.33 | 46 |

| 12/16/2018 11:18:47 AM | 48.72 | 47 |

| 12/16/2018 11:18:48 AM | 47.78 | 48 |

| 12/16/2018 11:18:49 AM | 48.3 | 49 |

| 12/16/2018 11:18:50 AM | 47.89 | 50 |

| 12/16/2018 11:18:51 AM | 47.98 | 51 |

| 12/16/2018 11:18:52 AM | 48.23 | 52 |

| 12/16/2018 11:18:53 AM | 48.9 | 53 |

| 12/16/2018 11:18:54 AM | 49.84 | 54 |

| 12/16/2018 11:18:55 AM | 51.16 | 55 |

| 12/16/2018 11:18:56 AM | 50.49 | 56 |

| 12/16/2018 11:18:57 AM | 51.58 | 57 |

| 12/16/2018 11:18:58 AM | 51.05 | 58 |

| 12/16/2018 11:18:59 AM | 52.97 | 59 |

| 12/16/2018 11:19:00 AM | 52.79 | 0 |

| 12/16/2018 11:19:01 AM | 52.55 | 1 |

| 12/16/2018 11:19:02 AM | 52.99 | 2 |

| 12/16/2018 11:19:03 AM | 51.72 | 3 |

| 12/16/2018 11:19:04 AM | 53.04 | 4 |

| 12/16/2018 11:19:05 AM | 51.76 | 5 |

| 12/16/2018 11:19:06 AM | 53.24 | 6 |

| 12/16/2018 11:19:07 AM | 53.08 | 7 |

| 12/16/2018 11:19:08 AM | 53.22 | 8 |

| 12/16/2018 11:19:09 AM | 52.72 | 9 |

| 12/16/2018 11:19:10 AM | 53.02 | 10 |

| 12/16/2018 11:19:11 AM | 52.59 | 11 |

| 12/16/2018 11:19:12 AM | 51.85 | 12 |

| 12/16/2018 11:19:13 AM | 51.76 | 13 |

| 12/16/2018 11:19:14 AM | 52.03 | 14 |

| 12/16/2018 11:19:15 AM | 51.65 | 15 |

| 12/16/2018 11:19:16 AM | 51.76 | 16 |

| 12/16/2018 11:19:17 AM | 51.47 | 17 |

| 12/16/2018 11:19:18 AM | 51.47 | 18 |

| 12/16/2018 11:19:19 AM | 51.79 | 19 |

| 12/16/2018 11:19:20 AM | 51.94 | 20 |

| 12/16/2018 11:19:21 AM | 52.23 | 21 |

| 12/16/2018 11:19:22 AM | 52.05 | 22 |

| 12/16/2018 11:19:23 AM | 52.01 | 23 |

| 12/16/2018 11:19:24 AM | 51.47 | 24 |

| 12/16/2018 11:19:25 AM | 52.12 | 25 |

| 12/16/2018 11:19:26 AM | 51.85 | 26 |

| 12/16/2018 11:19:27 AM | 50.87 | 27 |

| 12/16/2018 11:19:28 AM | 51.36 | 28 |

| 12/16/2018 11:19:29 AM | 51.38 | 29 |

| 12/16/2018 11:19:30 AM | 49.77 | 30 |

| 12/16/2018 11:19:31 AM | 52.46 | 31 |

| 12/16/2018 11:19:32 AM | 49.91 | 32 |

| 12/16/2018 11:19:33 AM | 49.55 | 33 |

| 12/16/2018 11:19:34 AM | 50.67 | 34 |

| 12/16/2018 11:19:35 AM | 50.29 | 35 |

| 12/16/2018 11:19:36 AM | 50.33 | 36 |

| 12/16/2018 11:19:37 AM | 50.02 | 37 |

| 12/16/2018 11:19:38 AM | 49.17 | 38 |

| 12/16/2018 11:19:39 AM | 48.54 | 39 |

| 12/16/2018 11:19:40 AM | 46.98 | 40 |

| 12/16/2018 11:19:41 AM | 49.21 | 41 |

| 12/16/2018 11:19:42 AM | 49.91 | 42 |

| 12/16/2018 11:19:43 AM | 49.12 | 43 |

| 12/16/2018 11:19:44 AM | 49.93 | 44 |

| 12/16/2018 11:19:45 AM | 49.03 | 45 |

| 12/16/2018 11:19:46 AM | 49.1 | 46 |

| 12/16/2018 11:19:47 AM | 50.89 | 47 |

| 12/16/2018 11:19:48 AM | 51.79 | 48 |

| 12/16/2018 11:19:49 AM | 51.43 | 49 |

| 12/16/2018 11:19:50 AM | 51.36 | 50 |

| 12/16/2018 11:19:51 AM | 52.28 | 51 |

| 12/16/2018 11:19:52 AM | 52.61 | 52 |

| 12/16/2018 11:19:53 AM | 52.3 | 53 |

| 12/16/2018 11:19:54 AM | 51.27 | 54 |

| 12/16/2018 11:19:55 AM | 51.2 | 55 |

| 12/16/2018 11:19:56 AM | 51.83 | 56 |

| 12/16/2018 11:19:57 AM | 51.65 | 57 |

| 12/16/2018 11:19:58 AM | 51.27 | 58 |

| 12/16/2018 11:19:59 AM | 50.67 | 59 |

| 12/16/2018 11:20:00 AM | 49.95 | 0 |

| 12/16/2018 11:20:01 AM | 50.78 | 1 |

| 12/16/2018 11:20:02 AM | 49.08 | 2 |

| 12/16/2018 11:20:03 AM | 50 | 3 |

| 12/16/2018 11:20:04 AM | 50.78 | 4 |

| 12/16/2018 11:20:05 AM | 49.88 | 5 |

| 12/16/2018 11:20:06 AM | 50.09 | 6 |

| 12/16/2018 11:20:07 AM | 50.13 | 7 |

| 12/16/2018 11:20:08 AM | 50.55 | 8 |

| 12/16/2018 11:20:09 AM | 50.44 | 9 |

| 12/16/2018 11:20:10 AM | 50.09 | 10 |

| 12/16/2018 11:20:11 AM | 51.05 | 11 |

| 12/16/2018 11:20:12 AM | 50.09 | 12 |

| 12/16/2018 11:20:13 AM | 49.97 | 13 |

| 12/16/2018 11:20:14 AM | 50.42 | 14 |

| 12/16/2018 11:20:15 AM | 51 | 15 |

| 12/16/2018 11:20:16 AM | 50.24 | 16 |

| 12/16/2018 11:20:17 AM | 49.39 | 17 |

| 12/16/2018 11:20:18 AM | 48.92 | 18 |

| 12/16/2018 11:20:19 AM | 47.98 | 19 |

| 12/16/2018 11:20:20 AM | 47.96 | 20 |

| 12/16/2018 11:20:21 AM | 47.42 | 21 |

Second would only work if the dataset is less than 60 seconds long. Is there a way to start counting seconds at 0 and continue until there is a large gap in datetime? (a few hours, or the start of the next calendar day?)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, everything is possible with PowerQuery (it's just a question of if it finishes on time 😉 )

Please check enclosed file. It creates a new column if there is a gap for more than one second. There is a parameter "DurationToStartNew" that let's you edit that time interval for the next column to start:

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That works beautifully! Thank you so much for helping.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 113 | |

| 100 | |

| 78 | |

| 76 | |

| 52 |

| User | Count |

|---|---|

| 144 | |

| 109 | |

| 108 | |

| 88 | |

| 61 |