- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Remove Duplicates & Relationsship bug?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove Duplicates & Relationsship bug?

Hi guys,

I have two tables t1 and t2. They are related through column r. In t2 the values in column r are distinct, in t1 they are not. I applied the function "Remove Duplicates" (aka Table.Distinct()) on t1.r (not on the whole table), but I still can't establish a 1:1 relationship between these tables: "The cardinality you selected isn't valid for this relationship". I counted the rows and the data shrinked. I also tried to group the table by r and count the group rows - there is no group with more than 1 row...Finally I tried to recreate this scenario with dummy data and it works like it should.

Is it possible that Power BI doesn't update the meta data and thus thinks the values in t1 are still not distinct?

My data is coming from a SQL Server (import mode).

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your patience WolfBiber,

With help of your example I finally found the problem...

- Power Query --> case sensitive

- Power Pivot / Power View --> case insensitive

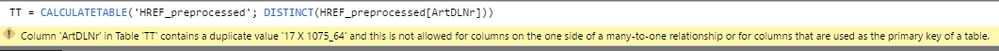

That's why I can't find "duplicates" in Power Query, but on the canvas you see duplicate values... (it even transformed small x to capital letter). just WOW Microsoft 😞

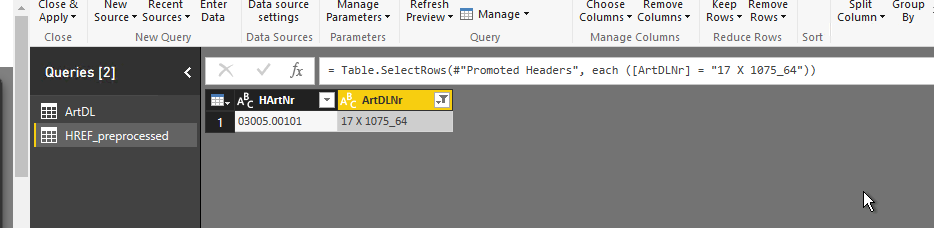

For our example:

- HArtNr "03005.00101" is assigned to ArtDLNr "17 X 1075_64"

- HArtNr "03005.00203" is assigned to ArtDLNr "17 x 1075_64"

Possible solutions/work arounds and some stuff to read:

http://www.thebiccountant.com/2015/08/17/create-a-dimension-table-with-power-query-avoid-the-bug/

http://www.thebiccountant.com/2016/10/27/tame-case-sensitivity-power-query-powerbi/

Still didn't figure out how I can fix my problem. I could remove duplicates in case-insensitive manner with

= Table.Distinct(aTable, { "aColumn", Comparer.OrdinalIgnoreCase } )but I don't want to lose this distinction. ATM I'm wondering how I can get around this. But if necessary, I will open another thread for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have NULL and Empty Values in this column?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No sir.

In the meantime I loaded the data into jupyter notebook and preprocessed it there. I loaded transformed data into Power BI and tried to establish a relationship - it's still n:1...So the problem seems not to arise from using the "Remove Duplicate" function. Still have no clue 😕

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

can you share some example Data as PBIX?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, if you tell me how do I do this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just upload the file to some cloud storage like onedrive and share the link

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

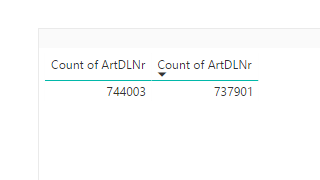

ok, as you can see you have more data rows in one table than in the other

and you have ArtDLNR in one table which isnt present in the other, and vice versa.

and you have many rows with Empty ArtDLNr (not a unique constraint),

thats not a 1:1.

You have to cleanse your data or keap it as 1:*

Ich wünsche Ihnen viel Erfolg

Viele Grüsse aus HH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

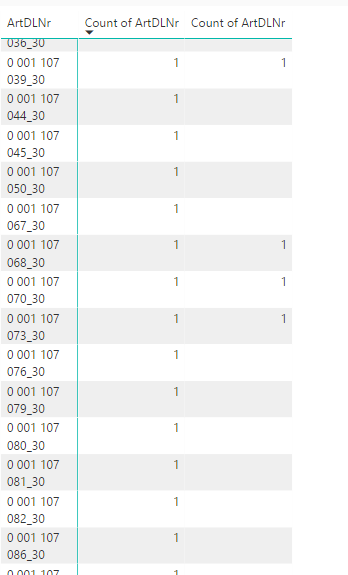

Well, first of all, thank you for taking time to help me.

I'm sorry, but I can't really understand your answer.

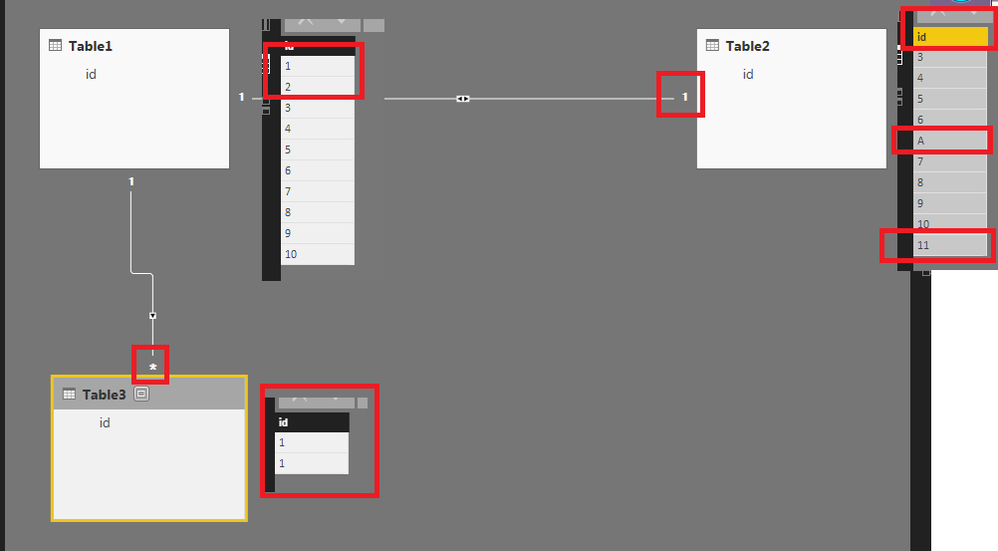

1) One-to-one relation means, there is at most one entity in the tables (defined by a id/join key). It doesn't have to be exactly one. Consider my example:

Table1 und Table2 do not have exactly same join keys. We have values in Table1, which we do not have in Table2 and vice versa. The relationship is still 1:1 because we don't have more than one same value in those tables.

Then cosndier Table3, it has duplicate join key. That's why it's one-to-many, not one-to-one.

So the only "cleanse" activity I have to do is to dump duplicates, which I did in my data.

2) What you describe in your answer - to guaranteed have a value in both tables - is called "referential integrity". This is definitely not required for one-to-one-relationship.

3) Could you tell me where in my data are null values? I'm pretty sure I have none.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good morning,

it seems that you are more expiriented than others. I just tried to explain some problems that can occur with 1:1 relationsships.

1.) and 2.) thats true, and if this is your desired result everything is fine.

3.) the main mistakes made for a 1:1 is that the undestanding that empy values "" and Null values are treated same.

4.) did you cleanse duplicates and then combined 2 Columns? Maybe thats the reason you have duplicates in your Key column in HREF

But I can't use the Query Editor cause I don't have the source files. So I have only Dax Methods to check your data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

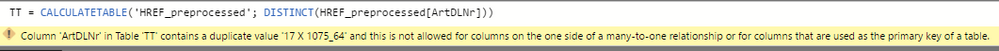

Hey,

you have duplicates e.g.: "17 X 1075_64" in HREF_preprocessed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@WolfBiber wrote:Hey,

you have duplicates e.g.: "17 X 1075_64" in HREF_preprocessed

^^

@WolfBiber wrote:Good morning,

it seems that you are more expiriented than others. I just tried to explain some problems that can occur with 1:1 relationsships.

1.) and 2.) thats true, and if this is your desired result everything is fine.

3.) the main mistakes made for a 1:1 is that the undestanding that empy values "" and Null values are treated same.

4.) did you cleanse duplicates and then combined 2 Columns? Maybe thats the reason you have duplicates in your Key column in HREF

But I can't use the Query Editor cause I don't have the source files. So I have only Dax Methods to check your data

But neither I have null values in my data, nor duplicates. That's why the topic name contains "bug?"...

4) --> I created new calculated column, concatenating two strings. I removed duplicates after the creation of the column. I also tried both to transformate my data directly in Power BI and outside of it, with Jupyter Notebooks. Same result.

Would it help you if I gave you the data as well?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BTW I can create your "TT" calculated table without an error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the example data you gave me you have duplicates:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your patience WolfBiber,

With help of your example I finally found the problem...

- Power Query --> case sensitive

- Power Pivot / Power View --> case insensitive

That's why I can't find "duplicates" in Power Query, but on the canvas you see duplicate values... (it even transformed small x to capital letter). just WOW Microsoft 😞

For our example:

- HArtNr "03005.00101" is assigned to ArtDLNr "17 X 1075_64"

- HArtNr "03005.00203" is assigned to ArtDLNr "17 x 1075_64"

Possible solutions/work arounds and some stuff to read:

http://www.thebiccountant.com/2015/08/17/create-a-dimension-table-with-power-query-avoid-the-bug/

http://www.thebiccountant.com/2016/10/27/tame-case-sensitivity-power-query-powerbi/

Still didn't figure out how I can fix my problem. I could remove duplicates in case-insensitive manner with

= Table.Distinct(aTable, { "aColumn", Comparer.OrdinalIgnoreCase } )but I don't want to lose this distinction. ATM I'm wondering how I can get around this. But if necessary, I will open another thread for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Absolutely incredible that a 6 year old bug with thousands of view and an incredible amount of threads created about it still has not been fixed.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 112 | |

| 100 | |

| 76 | |

| 74 | |

| 49 |

| User | Count |

|---|---|

| 146 | |

| 108 | |

| 106 | |

| 90 | |

| 62 |