- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- RUNNING SUM BY DATE BY GROUP BY LANE

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RUNNING SUM BY DATE BY GROUP BY LANE

Hi everyone,

I hope you are doing well.

I am struggling a lot with the following problem and will appreciate any help SO MUCH!!

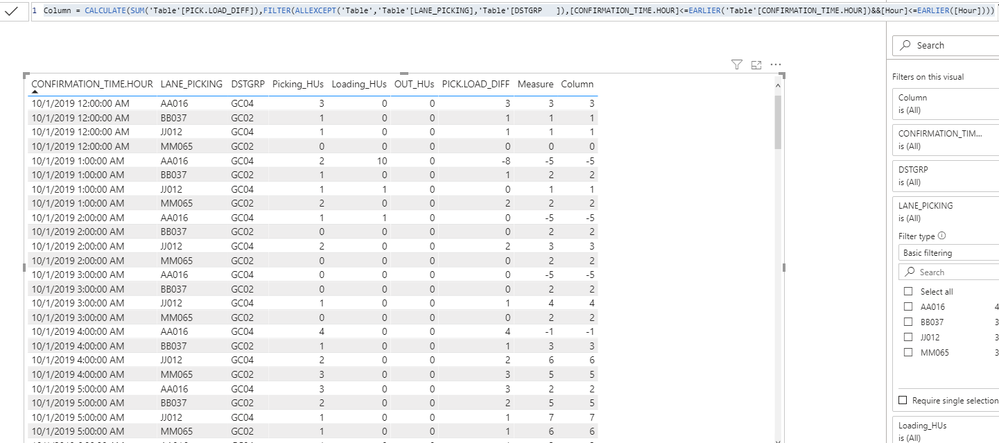

I want to calculate the running sum of PICK.LOAD_DIFF by hour by date by store by lane.

The confirmation_time.hour is the date and hour group (ex 26/10/2019 18:00:00)

The calculated column I have:

This is the dax that I tried, but it is just returning the PICK.LOAD_DIFF that I'm trying to calculate the running sum on.

I will really appreciate any help so much.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnnaSA look in the attached solution, a table called Table (ignore other tables in pbix) that contains your sample data and table visual showing running total, I did it two ways, so you can pick and choose.

I would ❤ Kudos if my solution helped. 👉 If you can spend time posting the question, you can also make efforts to give Kudos whoever helped to solve your problem. It is a token of appreciation!

Subscribe to the @PowerBIHowTo YT channel for an upcoming video on List and Record functions in Power Query!!

Learn Power BI and Fabric - subscribe to our YT channel - Click here: @PowerBIHowTo

If my solution proved useful, I'd be delighted to receive Kudos. When you put effort into asking a question, it's equally thoughtful to acknowledge and give Kudos to the individual who helped you solve the problem. It's a small gesture that shows appreciation and encouragement! ❤

Did I answer your question? Mark my post as a solution. Proud to be a Super User! Appreciate your Kudos 🙂

Feel free to email me with any of your BI needs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AnnaSA

Kindly check below results, pbix attached.

Measure = CALCULATE(SUM('Table'[PICK.LOAD_DIFF]),FILTER(ALL('Table'),[CONFIRMATION_TIME.HOUR]<=MAX('Table'[CONFIRMATION_TIME.HOUR])&&[Hour]<=MAX([Hour])),VALUES('Table'[LANE_PICKING]),VALUES('Table'[DSTGRP ]))

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AnnaSA

Please use this one:

Column = CALCULATE(SUM('Table'[PICK.LOAD_DIFF]),FILTER(ALLEXCEPT('Table','Table'[LANE_PICKING],'Table'[DSTGRP ]),[CONFIRMATION_TIME.HOUR]<=EARLIER('Table'[CONFIRMATION_TIME.HOUR])&&[Hour]<=EARLIER([Hour])))Pbix attached.

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnnaSA it will be helpful if you put sample data in excel file with expected output and share it here and that can be use to put together a solution.

Subscribe to the @PowerBIHowTo YT channel for an upcoming video on List and Record functions in Power Query!!

Learn Power BI and Fabric - subscribe to our YT channel - Click here: @PowerBIHowTo

If my solution proved useful, I'd be delighted to receive Kudos. When you put effort into asking a question, it's equally thoughtful to acknowledge and give Kudos to the individual who helped you solve the problem. It's a small gesture that shows appreciation and encouragement! ❤

Did I answer your question? Mark my post as a solution. Proud to be a Super User! Appreciate your Kudos 🙂

Feel free to email me with any of your BI needs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @parry2k

I apologize, I am new to PowerBI and this community.

Will this help?

So, I have multiple stores. One store can be assigned to 2 lanes and there can be more than one store in a lane. There are units going in and out throughout the day. And I have to calculate how many units was in a specific hour group on a day in a specific lane for a specific store.

I will truly appreciate any help.

Please let me know if you need more information?

| DATE | HOUR | STORE | LANE | IN | OUT | DIFF | CUMTOTAL |

| 02/02/2020 | 08:00:00 | STORE 1 | LANE A | 3 | 2 | 1 | 1 |

| 02/02/2020 | 09:00:00 | STORE 1 | LANE A | 5 | 4 | 1 | 2 |

| 02/02/2020 | 10:00:00 | STORE 1 | LANE A | 6 | 1 | 5 | 7 |

| 02/02/2020 | 11:00:00 | STORE 1 | LANE A | 1 | 7 | -6 | 1 |

| 02/02/2020 | 12:00:00 | STORE 1 | LANE A | 10 | 1 | 9 | 10 |

| 02/02/2020 | 13:00:00 | STORE 1 | LANE A | 6 | 1 | 5 | 15 |

| 02/02/2020 | 14:00:00 | STORE 1 | LANE A | 0 | 0 | 0 | 15 |

| 02/02/2020 | 15:00:00 | STORE 1 | LANE B | 0 | 9 | -9 | 0 |

| 02/02/2020 | 16:00:00 | STORE 1 | LANE B | 7 | 2 | 5 | 5 |

| 03/02/2020 | 17:00:00 | STORE 1 | LANE B | 5 | 3 | 2 | 7 |

| 03/02/2020 | 18:00:00 | STORE 1 | LANE B | 3 | 0 | 3 | 10 |

| 03/02/2020 | 19:00:00 | STORE 2 | LANE C | 7 | 0 | 7 | 0 |

| 03/02/2020 | 20:00:00 | STORE 2 | LANE C | 6 | 2 | 4 | 4 |

| 03/02/2020 | 21:00:00 | STORE 2 | LANE C | 10 | 8 | 2 | 6 |

| 03/02/2020 | 22:00:00 | STORE 2 | LANE C | 2 | 1 | 1 | 7 |

| 03/02/2020 | 23:00:00 | STORE 2 | LANE C | 8 | 1 | 7 | 14 |

| 03/02/2020 | 00:00:00 | STORE 2 | LANE C | 0 | 1 | -1 | 13 |

| 03/02/2020 | 01:00:00 | STORE 2 | LANE C | 8 | 1 | 7 | 20 |

| 03/02/2020 | 02:00:00 | STORE 2 | LANE C | 1 | 1 | 0 | 20 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| DATE | HOUR | STORE | LANE | IN | OUT | DIFF | CUMTOTAL |

| 02/02/2020 | 08:00:00 | STORE 1 | LANE A | 3 | 2 | 1 | 1 |

| 02/02/2020 | 09:00:00 | STORE 1 | LANE A | 5 | 4 | 1 | 2 |

| 02/02/2020 | 10:00:00 | STORE 1 | LANE A | 6 | 1 | 5 | 7 |

| 02/02/2020 | 11:00:00 | STORE 1 | LANE A | 1 | 7 | -6 | 1 |

| 02/02/2020 | 12:00:00 | STORE 1 | LANE A | 10 | 1 | 9 | 10 |

| 02/02/2020 | 13:00:00 | STORE 1 | LANE A | 6 | 1 | 5 | 15 |

| 02/02/2020 | 14:00:00 | STORE 1 | LANE A | 0 | 0 | 0 | 15 |

| 02/02/2020 | 15:00:00 | STORE 1 | LANE B | 10 | 9 | 1 | 1 |

| 02/02/2020 | 16:00:00 | STORE 1 | LANE B | 7 | 2 | 5 | 6 |

| 03/02/2020 | 17:00:00 | STORE 1 | LANE B | 5 | 3 | 2 | 8 |

| 03/02/2020 | 18:00:00 | STORE 1 | LANE B | 3 | 0 | 3 | 11 |

| 03/02/2020 | 19:00:00 | STORE 2 | LANE C | 7 | 0 | 7 | 7 |

| 03/02/2020 | 20:00:00 | STORE 2 | LANE C | 6 | 2 | 4 | 11 |

| 03/02/2020 | 21:00:00 | STORE 2 | LANE C | 10 | 8 | 2 | 13 |

| 03/02/2020 | 22:00:00 | STORE 2 | LANE C | 2 | 1 | 1 | 14 |

| 03/02/2020 | 23:00:00 | STORE 2 | LANE C | 8 | 1 | 7 | 21 |

| 03/02/2020 | 00:00:00 | STORE 2 | LANE C | 0 | 1 | -1 | 20 |

| 03/02/2020 | 01:00:00 | STORE 2 | LANE C | 8 | 1 | 7 | 27 |

| 03/02/2020 | 02:00:00 | STORE 2 | LANE C | 1 | 1 | 0 | 27 |

I see there is a slight error in the previous table. This one is correct

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnnaSA look in the attached solution, a table called Table (ignore other tables in pbix) that contains your sample data and table visual showing running total, I did it two ways, so you can pick and choose.

I would ❤ Kudos if my solution helped. 👉 If you can spend time posting the question, you can also make efforts to give Kudos whoever helped to solve your problem. It is a token of appreciation!

Subscribe to the @PowerBIHowTo YT channel for an upcoming video on List and Record functions in Power Query!!

Learn Power BI and Fabric - subscribe to our YT channel - Click here: @PowerBIHowTo

If my solution proved useful, I'd be delighted to receive Kudos. When you put effort into asking a question, it's equally thoughtful to acknowledge and give Kudos to the individual who helped you solve the problem. It's a small gesture that shows appreciation and encouragement! ❤

Did I answer your question? Mark my post as a solution. Proud to be a Super User! Appreciate your Kudos 🙂

Feel free to email me with any of your BI needs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you VERY much for the help @parry2k . I really appreciate it so much!!

When I try that measure the table visual crashes, and the store(DSTGRP) is also not included.

Here is a snap of the actual table (filtered on a few days on a few stores).

PICK.LOAD_DIFF = Picking_HUs - Loading_HUs - OUT_HUs

The RT will have to by by hour by day by lane by dstgrp, which makes it difficult for me to find a solution 😞

Do you maybe know how I can create a calculated column in this table for the running total for PICK.LOAD_DIFF?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| CONFIRMATION_TIME.HOUR | DSTGRP | LANE_PICKING | Picking_HUs | Loading_HUs | OUT_HUs | PICK.LOAD_DIFF |

| 2019/10/01 00:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 00:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 00:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/01 00:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 01:00 | GC04 | JJ012 | 1 | 1 | 0 | 0 |

| 2019/10/01 01:00 | GC04 | AA016 | 2 | 10 | 0 | -8 |

| 2019/10/01 01:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 01:00 | GC02 | MM065 | 2 | 0 | 0 | 2 |

| 2019/10/01 02:00 | GC04 | AA016 | 1 | 1 | 0 | 0 |

| 2019/10/01 02:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 02:00 | GC04 | JJ012 | 2 | 0 | 0 | 2 |

| 2019/10/01 02:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 03:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/01 03:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 03:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 03:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 04:00 | GC04 | AA016 | 4 | 0 | 0 | 4 |

| 2019/10/01 04:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 04:00 | GC04 | JJ012 | 2 | 0 | 0 | 2 |

| 2019/10/01 04:00 | GC02 | MM065 | 3 | 0 | 0 | 3 |

| 2019/10/01 05:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/01 05:00 | GC02 | BB037 | 2 | 0 | 0 | 2 |

| 2019/10/01 05:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 05:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/01 06:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/01 06:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 06:00 | GC04 | JJ012 | 4 | 0 | 1 | 3 |

| 2019/10/01 06:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 07:00 | GC02 | BB037 | 1 | 0 | 1 | 0 |

| 2019/10/01 07:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/01 07:00 | GC04 | AA016 | 1 | 0 | 1 | 0 |

| 2019/10/01 07:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/01 08:00 | GC04 | AA016 | 3 | 0 | 1 | 2 |

| 2019/10/01 08:00 | GC02 | BB037 | 3 | 0 | 0 | 3 |

| 2019/10/01 08:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 08:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 09:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/01 09:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/01 09:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 09:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 10:00 | GC04 | AA016 | 2 | 0 | 0 | 2 |

| 2019/10/01 10:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 10:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 10:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 11:00 | GC04 | AA016 | 2 | 0 | 0 | 2 |

| 2019/10/01 11:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 11:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/01 11:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/01 12:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/01 12:00 | GC02 | BB037 | 1 | 0 | 1 | 0 |

| 2019/10/01 12:00 | GC04 | JJ012 | 3 | 0 | 0 | 3 |

| 2019/10/01 12:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 13:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 13:00 | GC04 | AA016 | 0 | 1 | 0 | -1 |

| 2019/10/01 13:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/01 13:00 | GC02 | MM065 | 0 | 0 | 1 | -1 |

| 2019/10/01 14:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/01 14:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 14:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 17:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/01 17:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 17:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 17:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 18:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 18:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/01 19:00 | GC04 | AA016 | 2 | 0 | 0 | 2 |

| 2019/10/01 19:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 20:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/01 20:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 20:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 20:00 | GC02 | MM065 | 0 | 3 | 0 | -3 |

| 2019/10/01 21:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/01 21:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/01 21:00 | GC02 | BB037 | 0 | 4 | 0 | -4 |

| 2019/10/01 21:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 22:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/01 22:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 22:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/01 22:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/01 23:00 | GC04 | AA016 | 1 | 2 | 0 | -1 |

| 2019/10/01 23:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/01 23:00 | GC04 | JJ012 | 1 | 1 | 0 | 0 |

| 2019/10/01 23:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 00:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/02 00:00 | GC02 | BB037 | 2 | 0 | 0 | 2 |

| 2019/10/02 00:00 | GC04 | JJ012 | 2 | 0 | 0 | 2 |

| 2019/10/02 00:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/02 01:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/02 01:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 01:00 | GC04 | JJ012 | 2 | 0 | 0 | 2 |

| 2019/10/02 01:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/02 02:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/02 02:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 02:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 04:00 | GC04 | AA016 | 5 | 0 | 0 | 5 |

| 2019/10/02 04:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 04:00 | GC04 | JJ012 | 4 | 0 | 0 | 4 |

| 2019/10/02 04:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 05:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/02 05:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 05:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 05:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 06:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 06:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/02 06:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 06:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 07:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 07:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 07:00 | GC04 | AA016 | 2 | 0 | 0 | 2 |

| 2019/10/02 07:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 08:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/02 08:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 08:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 08:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 09:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 09:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/02 09:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 09:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 10:00 | GC04 | AA016 | 3 | 0 | 0 | 3 |

| 2019/10/02 10:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 10:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 10:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 11:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/02 11:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 11:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 11:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 12:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 12:00 | GC04 | AA016 | 1 | 0 | 0 | 1 |

| 2019/10/02 12:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 12:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 13:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 13:00 | GC04 | AA016 | 2 | 0 | 1 | 1 |

| 2019/10/02 13:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 13:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 14:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/02 14:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/02 14:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 14:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 15:00 | GC04 | JJ012 | 0 | 0 | 0 | 0 |

| 2019/10/02 15:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/02 15:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 15:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 16:00 | GC04 | AA016 | 2 | 0 | 0 | 2 |

| 2019/10/02 16:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/02 17:00 | GC02 | MM065 | 1 | 0 | 0 | 1 |

| 2019/10/02 18:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 18:00 | GC04 | AA016 | 0 | 0 | 0 | 0 |

| 2019/10/02 18:00 | GC02 | BB037 | 1 | 0 | 0 | 1 |

| 2019/10/02 18:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

| 2019/10/02 19:00 | GC02 | BB037 | 0 | 0 | 0 | 0 |

| 2019/10/02 19:00 | GC04 | JJ012 | 1 | 3 | 0 | -2 |

| 2019/10/02 19:00 | GC04 | AA016 | 0 | 17 | 0 | -17 |

| 2019/10/02 20:00 | GC04 | JJ012 | 1 | 0 | 0 | 1 |

| 2019/10/02 20:00 | GC02 | MM065 | 0 | 0 | 0 | 0 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AnnaSA

Kindly check below results, pbix attached.

Measure = CALCULATE(SUM('Table'[PICK.LOAD_DIFF]),FILTER(ALL('Table'),[CONFIRMATION_TIME.HOUR]<=MAX('Table'[CONFIRMATION_TIME.HOUR])&&[Hour]<=MAX([Hour])),VALUES('Table'[LANE_PICKING]),VALUES('Table'[DSTGRP ]))

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh my goodness @v-diye-msft , THANK YOU so much. It does work.

Unfortunately, for the purpose of my analysis, I will need that as a calculated column since I will have to max it / find the 90th percentile etc...

Do you maybe know what alterations I can make to have that as a calculated column?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AnnaSA

Please use this one:

Column = CALCULATE(SUM('Table'[PICK.LOAD_DIFF]),FILTER(ALLEXCEPT('Table','Table'[LANE_PICKING],'Table'[DSTGRP ]),[CONFIRMATION_TIME.HOUR]<=EARLIER('Table'[CONFIRMATION_TIME.HOUR])&&[Hour]<=EARLIER([Hour])))Pbix attached.

If this post helps, then please consider Accept it as the solution to help the other members find it more

quickly.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 114 | |

| 99 | |

| 83 | |

| 70 | |

| 61 |

| User | Count |

|---|---|

| 149 | |

| 114 | |

| 107 | |

| 89 | |

| 67 |