- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Power query tricky question

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power query tricky question

Dear community.

I have a table whose coloumns are below

| Source.Name | Fiscal year | Account | AccountChannel | AccountSegment | AccountSubSegment | AccountSector | AccountSubSector | 1Area | 1Region | GCSP | 1Country | ServiceLine | SubServiceLine | TER_FYTD_USD | TER_FYTD_Constant | RevenuePlanFY_Constant | NER_FYTD_Constant | MarginFYTD_Constant | MarginPFYTD_Constant | TER_PFYTD_Constant | SaleFYTD_constant | PipelineWeightedOpen_constant | Region |

I need to create additional coloumn with a condition that, if all of my row data matches except for category, then i want that to show it as duplicate

For instance, if there are 3 duplicate rows, then it should show 2 rows as duplicate and 1 row as original.

let me know how to achieve this in power query

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

A solution would be the one attached below, however I am not that sure how doable it is considering you have a lot of columns by which to group by.

Steps would be to :

1. create an index for the rows in your table (ideal for the rows to be sorted so that the 1st row is the original one - in case it matters or there is a rule for this)

2. group by all columns except the category one and return count rows and also the previously created index from the new table created - so you know which rows are duplicated (the ones that have count > 1)

3. group again by the new count column and all the column except the category one and create min index inside each duplicated rows.

4. create a new column by comparing initial index with min index inside a group to check which one is the 1st duplicated row and assign text either Duplicate or Original.

5. Remove not needed fields

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUUoEYkelWJ1oJSMgKwmIncA8YyArGYidwTyYSicUHlAuFgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Column1 = _t, Column2 = _t, Column3 = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", Int64.Type}, {"Column2", type text}, {"Column3", type text}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1),

#"Grouped Rows" = Table.Group(#"Added Index", {"Column1", "Column2"}, {{"Count", each Table.RowCount(_), type number}, {"Details", each _, type table}}),

#"Subtracted from Column" = Table.TransformColumns(#"Grouped Rows", {{"Count", each _ - 1, type number}}),

#"Expanded Details" = Table.ExpandTableColumn(#"Subtracted from Column", "Details", {"Column3", "Index"}, {"Details.Column3", "Details.Index"}),

#"Renamed Columns" = Table.RenameColumns(#"Expanded Details",{{"Details.Index", "Duplicates Index"}}),

#"Grouped Rows1" = Table.Group(#"Renamed Columns", {"Column1", "Column2", "Count"}, {{"Min", each List.Min([Duplicates Index]), type number}, {"Details", each _, type table}}),

#"Expanded Details1" = Table.ExpandTableColumn(#"Grouped Rows1", "Details", {"Details.Column3", "Duplicates Index"}, {"Details.Details.Column3", "Details.Duplicates Index"}),

#"Renamed Columns1" = Table.RenameColumns(#"Expanded Details1",{{"Min", "Min Index"}, {"Details.Duplicates Index", "Index"}}),

#"Added Conditional Column" = Table.AddColumn(#"Renamed Columns1", "Is Duplicate", each if [Min Index] = [Index] then "Original" else "Duplicate"),

#"Removed Columns" = Table.RemoveColumns(#"Added Conditional Column",{"Index", "Min Index", "Count"})

in

#"Removed Columns"

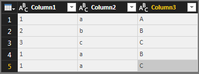

I tested on the following data set:

where Column1 and Column 2 can be duplicated rows, while Column3 can have different values (like your mentioned category column)

Hope it helps,

ElenaN

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ElenaN @Anonymous

superhelpful & both the solution works

i have another tricky question on the same topic

i have a table "cross-Domain extract file" and 3 coloumns stated below

1) “ClientSectorDesc”

2) "Sector as per Account list"

3) "Account Name_Lookup"

In the table "cross-Domain extract file"

Filter Banking &Capital market in coloumn “ClientSectorDesc” &

Select Null in “Sector as per Account list” column - Replace Null with BCM

and also With same filters on Replace null in coloumn“Account Name_Lookup” with Others.

after the changes made, unfilter everything (like we do in excel)

how do i achieve this, i wasn't sucessfull using this find and replace method

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

thanks

Not sure, if this helps., i have 3 coloumns as mentioned in my post

can you let me know the steps or can you replicate this and share the pbix?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a rule of thumb, each "row" in powerquery cannot reference to "other rows". Especially as there's not the concept of "next" and "previous" rows.

BUT if your objective is to remove duplicate rows, you can simply remove the Category column and then group data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

so you are telling there is no way we can achieve this?

my objective is to identify those rows which are duplicate and show that in different coloumn as "Duplicate" or "Original" but i don't want to remove it for obvious reasons

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, then for doing something like that I suggest DAX as you can easily create a calculated column with a FILTER on all the rows of a group and then use a RANK to sort them. I don't know exactly how you can decide which one of the three is the original and which one is a duplicate, though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Can you help with the DAX? i am not familiar with DAX.

also on the duplicate, it doesn't matter at all, for instance you have 3 rows repeating the values, we can mark 2 rows as duplicate & it doesn't matter which row

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe Elena's solutions will work, anyway

in DAX create a calclulated column

Ordinal =

VAR thisColumn1=YourTable[Column1]

VAR thisColumn2=YourTable[Column2]

-- create a var for each column that you have to group by

RETURN

RANKX(

FILTER(YourTable,

YourTable[Column1]=thisColumn1 &&

YourTable[Column2]=thisColumn2

-- && repeat for each variable

), YourTable[Category]

)

This will create a number from 1 to N for each row of the same "group". Then you add a secondary conditional column where if this column is 1 then is the valid one otherwise is duplicate.

There is also another solution that should provide exactly your result

IsDuplicate =

VAR thisColumn1 = YourTable[Column1]

VAR thisColumn2 = YourTable[Column2]

-- create a var for each column that you have to group by

RETURN

IF (

CALCULATE (

MIN ( YourTable[Category] ),

FILTER (

ALL ( YourTable ),

YourTable[Column1] = thisColumn1

&& YourTable[Column2] = thisColumn2

-- repeat for all variables

)

)

= MIN ( YourTable[Category] ),

"Original",

"Duplicate"

)

These solutions however won't probably work if your categories might be the same as rankx and max will find the same values. If so follow Elena's suggestion and create an Index column, and RANKX on that one instead of the category

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 98 | |

| 77 | |

| 66 | |

| 54 |

| User | Count |

|---|---|

| 144 | |

| 104 | |

| 100 | |

| 86 | |

| 64 |