- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Power Query M - keeping just top rows based on...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power Query M - keeping just top rows based on few columns

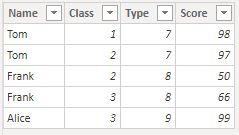

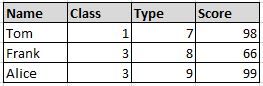

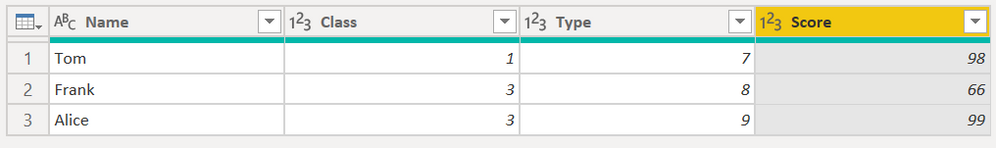

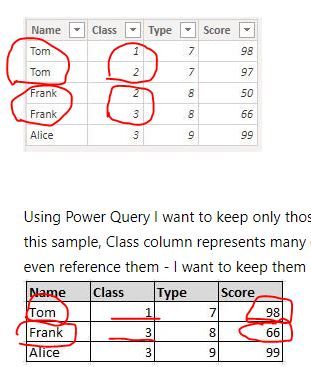

This is my data:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETaGCpuZgYUdczKTU6HCliBsqRQbCwA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}})

in

#"Changed Type"

Using Power Query I want to keep only those rows which have max Score for every Name and Type. In this sample, Class column represents many other columns which I have, but I don't want to affect or even reference them - I want to keep them as is. This is how the resulting table should look:

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you - @MFelix , @FrankAT

I made a solution myself, merging your ideas - I added an index column after performing a sort (MFelix idea). Later I made a join (FrankAT idea) based on the new index column.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETYGqQRiMzOwsGNOZnIqkrClpVJsLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Name", Order.Ascending}, {"Score", Order.Ascending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1, Int64.Type),

#"Grouped Rows" = Table.Group(#"Added Index", {"Name", "Type"}, {{"IndexOfMaxScore", each List.Max([Index]), type number}}),

#"Merged Queries" = Table.NestedJoin(#"Added Index", {"Index"}, #"Grouped Rows", {"IndexOfMaxScore"}, "MergeResults", JoinKind.Inner),

#"Removed Columns" = Table.RemoveColumns(#"Merged Queries",{"Index", "MergeResults"})

in

#"Removed Columns"

@v-lili6-msft - thank you! You are right, I did not know I could do it! I changed

#"Merged Queries" = Table.NestedJoin(#"Whole Table", {"Score"}, #"Grouped Rows", {"Max"}, "Whole Table", JoinKind.Inner),with

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"Score", "Name", "Type"}, #"Grouped Rows", {"Max", "Name", "Type"}, "Whole Table", JoinKind.Inner),

So, the full working version then would be this:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETYGqQRiMzOwsGNOZnIqkrClpVJsLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name", "Type"}, {{"Max", each List.Max([Score]), type nullable number}}),

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"Score", "Name", "Type"}, #"Grouped Rows", {"Max", "Name", "Type"}, "Whole Table", JoinKind.Inner),

#"Removed Columns" = Table.RemoveColumns(#"Merged Queries",{"Whole Table"})

in

#"Removed Columns"

Thank you all!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you - @MFelix , @FrankAT

I made a solution myself, merging your ideas - I added an index column after performing a sort (MFelix idea). Later I made a join (FrankAT idea) based on the new index column.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETYGqQRiMzOwsGNOZnIqkrClpVJsLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Name", Order.Ascending}, {"Score", Order.Ascending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1, Int64.Type),

#"Grouped Rows" = Table.Group(#"Added Index", {"Name", "Type"}, {{"IndexOfMaxScore", each List.Max([Index]), type number}}),

#"Merged Queries" = Table.NestedJoin(#"Added Index", {"Index"}, #"Grouped Rows", {"IndexOfMaxScore"}, "MergeResults", JoinKind.Inner),

#"Removed Columns" = Table.RemoveColumns(#"Merged Queries",{"Index", "MergeResults"})

in

#"Removed Columns"

@v-lili6-msft - thank you! You are right, I did not know I could do it! I changed

#"Merged Queries" = Table.NestedJoin(#"Whole Table", {"Score"}, #"Grouped Rows", {"Max"}, "Whole Table", JoinKind.Inner),with

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"Score", "Name", "Type"}, #"Grouped Rows", {"Max", "Name", "Type"}, "Whole Table", JoinKind.Inner),

So, the full working version then would be this:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETYGqQRiMzOwsGNOZnIqkrClpVJsLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name", "Type"}, {{"Max", each List.Max([Score]), type nullable number}}),

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"Score", "Name", "Type"}, #"Grouped Rows", {"Max", "Name", "Type"}, "Whole Table", JoinKind.Inner),

#"Removed Columns" = Table.RemoveColumns(#"Merged Queries",{"Whole Table"})

in

#"Removed Columns"

Thank you all!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Zyg_D

with your sample data I get the following solution:

// Query

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETaGCpuZgYUdczKTU6HCliBsqRQbCwA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name"}, {{"Max", each List.Max([Score]), type nullable number}}),

#"Whole Table" = #"Changed Type",

#"Merged Queries" = Table.NestedJoin(#"Whole Table", {"Score"}, #"Grouped Rows", {"Max"}, "Whole Table", JoinKind.Inner),

#"Removed Columns" = Table.RemoveColumns(#"Merged Queries",{"Whole Table"})

in

#"Removed Columns"

With kind regards from the town where the legend of the 'Pied Piper of Hamelin' is at home

FrankAT (Proud to be a Datanaut)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@FrankAT - Thank you for the answer. I like that it does not reference the Class column. However it has 2 issues. I was able to fix one, but not the second.

1. Since I wanted "to keep only those rows which have max Score for every Name and Type", I changed

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name"}, {{"Max", each List.Max([Score]), type nullable number}}),

with

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name", "Type"}, {{"Max", each List.Max([Score]), type nullable number}}),

2. I think the major problem is that while merging the code takes into account only the max value. If in two separate Name and Type combinations there were identical max values, the code would fail.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @Zyg_D

I think FrankAT's solution should work for you.

1.Since I wanted "to keep only those rows which have max Score for every Name and Type", I changed

In your first post, you just want to get the max Score for every Name not group by Type

2. If in two separate Name and Type combinations there were identical max values, the code would fail.

When you merge, just add more key conditional in the code manually, then it will work.

If you still have the problem, please share more sample data and your expected output.

Regards,

Lin

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Zyg_D ,

You need to do a group by but then change the code of the group to a index column and then filter the values for number 1.

So when you do a group by you get the following code:

= Table.Group(#"Changed Type", {"Name"}, {{"Count", each _, type table [Name=nullable text, Class=nullable number, Type=nullable number, Score=nullable number]}})In this code the part after the each_ needs to be replace with the following code:

Table.AddIndexColumn(Table.Sort(_,{{"Score", Order.Descending}} ), "Index",1,1)This code is sorting the information based on the score and adding and index so the final code will be:

= Table.Group(#"Changed Type", {"Name"}, {{"MAX_Score", each Table.AddIndexColumn(Table.Sort(_,{{"Score", Order.Descending}} ), "Index",1,1)}})

Now just expand the column and filter by index = 1.

Check full code below:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCsnPVdJRMgRicyC2tFCK1YEJGsEEzcGCbkWJedlQYQsgNjVAETaGCpuZgYUdczKTU6HCliBsqRQbCwA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Class = _t, Type = _t, Score = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Class", Int64.Type}, {"Type", Int64.Type}, {"Score", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name"}, {{"MAX_Score", each Table.AddIndexColumn(Table.Sort(_,{{"Score", Order.Descending}} ), "Index",1,1)}}),

#"Expanded MAX_Score" = Table.ExpandTableColumn(#"Grouped Rows", "MAX_Score", {"Class", "Type", "Score", "Index"}, {"Class", "Type", "Score", "Index"}),

#"Filtered Rows" = Table.SelectRows(#"Expanded MAX_Score", each ([Index] = 1)),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Index"})

in

#"Removed Columns"

Regards

Miguel Félix

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Check out my blog: Power BI em Português- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MFelix - your answer has 1 problem which I fixed and another which I cannot fix.

1. Since I wanted "to keep only those rows which have max Score for every Name and Type", I changed

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name"}, {{"MAX_Score", each Table.AddIndexColumn(Table.Sort(_,{{"Score", Order.Descending}} ), "Index",1,1)}}),

#"Expanded MAX_Score" = Table.ExpandTableColumn(#"Grouped Rows", "MAX_Score", {"Class", "Type", "Score", "Index"}, {"Class", "Type", "Score", "Index"}),with

#"Grouped Rows" = Table.Group(#"Changed Type", {"Name", "Type"}, {{"MAX_Score", each Table.AddIndexColumn(Table.Sort(_,{{"Score", Order.Descending}} ), "Index",1,1)}}),

#"Expanded MAX_Score" = Table.ExpandTableColumn(#"Grouped Rows", "MAX_Score", {"Class", "Score", "Index"}, {"Class", "Score", "Index"}),

2. I did not want to reference the Class column, because if I do, I will have to always come back to this code in case my data gets more fields. I see references to this column which is kind of a problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Zyg_D ,

Not really understangin what you mean in the point 2. "I did not want to reference the Class column, because if I do, I will have to always come back to this code in case my data gets more fields. I see references to this column which is kind of a problem. "

The reference to class that you have is only the presentation of the column when you expand the table you can take it out from the expande table step.

Or do you have any additional question on this, the dataset that you provide is very small so it's dificcult to get the full expectations in terms of results to present or not.

Regards

Miguel Félix

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Check out my blog: Power BI em Português- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MFelix wrote:Not really understangin what you mean in the point 2. "I did not want to reference the Class column, because if I do, I will have to always come back to this code in case my data gets more fields. I see references to this column which is kind of a problem. "

My original data comes from Power BI dataflow. In the Power Query, there are no references to any column name in the Source step. There is no Change Type step. So, I get all the columns which are supplied by the dataflow. If more columns are added to the dataflow, I automatically get them. In the subsequent steps, if I mention anywhere the exact column names (references), I will be limited on actions which affect only those specific columns. Any additional columns which were added to the dataflow after I used your code will not appear in my final table unless I correct the code. I do not want to go back to the code all the time when more columns are added to the dataflow, so I need a solution without explicit column names in the code.

@MFelix wrote:The reference to class that you have is only the presentation of the column when you expand the table you can take it out from the expande table step.

I want to be able to "expand" or do anything what is required to get the final desired table, but without telling the exact column names in the code. If I remove that column name ("Class"), I simply do not get that column, which is not what I want - I need that column.

Maybe there are other solutions which do not use expansion, because I don't think I can use this functionality, as it simply requires column names to be hardcoded...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Zyg_D ,

I believe that @ImkeF can help you out, she's the reference on M language in the community.

@ImkeF Can you take a look at this.

Regards

Miguel Félix

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Check out my blog: Power BI em PortuguêsHelpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 110 | |

| 94 | |

| 82 | |

| 66 | |

| 58 |

| User | Count |

|---|---|

| 151 | |

| 121 | |

| 104 | |

| 87 | |

| 67 |