- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Measure Returning Dynamic Last Transaction for...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Measure Returning Dynamic Last Transaction for Customer ID based on Current Date in Filter Context

Hi everyone,

I'm currently trying to solve a problem that comes up in various forms at my work. To simplify, I have a table of transactions by customer ID or some other type of ID, each record with a customer/other ID, transaction date, transaction type ID, and a cumulative transaction number containing an integer denoting the "nth" transaction for each customer.

My problems typically require one of two solutions, a table or a scalar value.

- The table solution requires returning a table with a series of records that contains the last transaction for each customer based on a dynamic date range coming from the filter context (NOT the absolute last transaction for each customer) and each of those last transactions meets certain criteria—typically a transaction type flag of some sort.

- The scalar value solution typically involves returning a count of those records in the above mentioned table or a DISTINCTCOUNT of customer IDs . This measure would be then put on a visual like a matrix or a line chart.

It is essentially a 'for each' type of problem. I've struggled with this concept in DAX, especially when working with grouping and nested filter/iterator contexts.

From a simplified model perspective, our table is CustomerTransactions and our columns are Customer_id, TransactionAddedDate, CustomerActivityCode_id, and Transaction_num.

The data would look something like this, sorted by TransactionAddedDate (here I sorted by Customer_id so that you can see how customers have multiple transactions):

So in the filter context date range (think a matrix with year-month or year-month-day), for each Customer_id I want to:

- Derive a table where TransactionAddedDate >= FIRSTDATE(Date[Date]) && TransactionAddedDate <= LASTDATE(Date[Date])

- Reduce that set to records containing MAX(Transaction_num) for each Customer_id IF AND ONLY IF the CustomerActivityCode_id is certain value—let's say 2. So logically and implicitly something like AND(IsLastCustomerTransaction_num = TRUE, CustomerActivityCode_id = 2)

- Count the number of records and/or Customer_ids for that reduced set.

I've tried the following and other attempts in DAX Studio to get a working table:

EVALUATE

VAR QueryStartDate = FIRSTDATE(Date[Date]) VAR QueryEndDate = LASTDATE(Date[Date]) RETURN CALCULATETABLE( SUMMARIZE( FILTER( SUMMARIZECOLUMNS( CustomerTransactions[Customer_id], CustomerTransactions[TransactionAddedDate], CustomerTransactions[CustomerActivityCode_id], "MaxCustomerTransNum", MAX(CustomerTransactions[Transaction_num]), "MaxCustomerActivityCode_id", MAX(CustomerTransactions[CustomerActivityCode_id]) ), CustomerTransactions[TransactionAddedDate] >= QueryStartDate && CustomerTransactions[TransactionAddedDate] <= QueryEndDate ), CustomerTransactions[Customer_id], "MaxCustomerTransNum", MAX(CustomerTransactions[Transaction_num]), "MaxCustomerActivityCode_id", MAX(CustomerTransactions[CustomerActivityCode_id]) ), USERELATIONSHIP(Date[Date], CustomerTransactions[TransactionAddedDate]), CustomerTransactions[CustomerActivityCode_id] = 2 )

I'm guessing the nested SUMMARIZE and SUMMARIZECOLUMNS are redundant to some degree; I was frustrated by this point and couldn't figure out if I was on the right track. I guess you can't filter by the virtual, non-instantiated columns like the <name> argument in SUMMARIZE and SUMMARIZECOLUMNS—please correct me if I am wrong! I get confused about what filters and calculations are happening at what particular layer and in what order.

I would appreciate any help and insight for this problem. Thank you!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In case anyone is interested, I believe I may have solved this problem on my own. I am in the process of verifying results so this solution is tentative at this point.

For the table solution:

MyTableAlgorithm =

VAR QueryStartDate = FIRSTDATE(Date[Date])

VAR QueryEndDate = LASTDATE(Date[Date])

RETURN

CALCULATETABLE (

SUMMARIZE (

FILTER (

SUMMARIZE ( // SUMMARIZECOLUMNS won't work here.

CustomerTransactions,

CustomerTransactions[Customer_id],

CustomerTransactions[TransactionAddedDate],

CustomerTransactions[CustomerActivityCode_id]

),

CustomerTransactions[TransactionAddedDate] >= QueryStartDate

&& CustomerTransactions[TransactionAddedDate] <= QueryEndDate

),

CustomerTransactions[Customer_id],

"MaxFeatureTransNum", MAX ( CustomerTransactions[num] ),

"MaxCustomerActivityCode_id", MAX ( CustomerTransactions[CustomerActivityCode_id] )

),

USERELATIONSHIP ( Date[Date], CustomerTransactions[TransactionAddedDate] ),

CustomerTransactions[CustomerActivityCode_id] = 2

)

I did read over at SQLBI.com that "A counterintuitive limitation in DAX is that you can group by extension columns, but you cannot perform meaningful calculations grouping by extension columns," thus confirming my suspicion that the additional arguments of SUMMARIZE or ADDCOLUMNS cannot be filtered against.

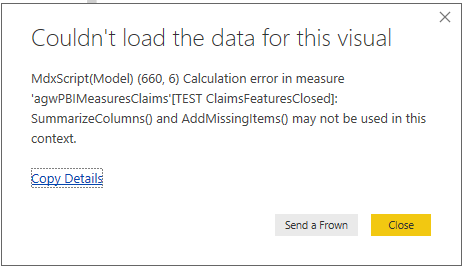

Marco gives a workaround but I haven't completely wrapped my head around it just yet. Further, there's been the addition of SUMMARIZECOLUMNS; my first attempts tried using this function (see first post) but on final implementation in Power BI it craps out with an error like this:

I thought the Microsoft Devs had fixed the issue where SUMMARIZECOLUMNS can't be used in a measure, but it may have something to do with layered filter contexts. Don't know.

Furthermore, after trying to work with that broken algorithm and fooling around in Power BI I ended getting some kind of corrupt memory error I've never seen before and haven't been able to replicate (I have no desire to attempt replicating it, either). I don't know if the two are related, but hey, there you go.

You'll notice in the above algorithm that the extension columns originally in the innermost SUMMARIZECOLUMNS of the first post have been removed. Their inclusion and omission don't seem to effect the outcome; this likely has to do with Marco's observations on lineage and virtual extension columns having no relationship with the physical data model.

Here's how I think the algorithm works: the innermost SUMMARIZE generates the initial set of data to work with. FILTER narrows the set to the dynamic date range set by the filter context. Somehow CALCULATETABLE works magic with its inverted order of evaluation with the outer SUMMARIZE statement. This is the one part I'm questioning whether or not it is calculating the result that I am expecting it to return. If CALCULATETABLE inverts evaluation order then is it imposing a CustomerTransactions[CustomerActivityCode_id] = 2 filter on the data from the start? This would not be the behavior I am looking for as it is functioning the same as a simple SQL WHERE clause. Pending the verification of my results I may need to invert the order somewhere or nest CALCULATETABLE functions. If you have any ideas please share!

The scalar solution:

Simply wrap the above in COUNTROWS:

MyScalarAlgorithm =

VAR QueryStartDate = FIRSTDATE(Date[Date])

VAR QueryEndDate = LASTDATE(Date[Date])

RETURN

COUNTROWS (

CALCULATETABLE (

SUMMARIZE (

FILTER (

SUMMARIZE (

CustomerTransactions,

CustomerTransactions[Customer_id],

CustomerTransactions[TransactionAddedDate],

CustomerTransactions[CustomerActivityCode_id]

),

CustomerTransactions[TransactionAddedDate] >= QueryStartDate

&& CustomerTransactions[TransactionAddedDate] <= QueryEndDate

),

CustomerTransactions[Customer_id],

"MaxFeatureTransNum", MAX ( CustomerTransactions[num] ),

"MaxCustomerActivityCode_id", MAX ( CustomerTransactions[CustomerActivityCode_id] )

),

USERELATIONSHIP ( Date[Date], CustomerTransactions[TransactionAddedDate] ),

CustomerTransactions[CustomerActivityCode_id] = 2

)

)

Please let me know if you see issues with my logic or understanding. I would love to learn from my errors and failures!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Everyone,

I've been in discussion with the folks over at SQLBI and here's a proposed solution using TREATAS to apply a filter between two virtual tables created in the same date context:

MyScalarAlgorithm = CALCULATE (

// Computes table containing last transaction for each Customer_id

// in the current filter context: VAR LastCustomerTransactionDate = ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxFeatureTransNum", CALCULATE ( MAX ( CustomerTransactions[Transaction_num] ) ) )

// Use the above VAR to establish filter context using TREATAS and computes

// the last activity status for the MAX(Transaction_num]) in the same date context: VAR LastCustomerTransactionState = CALCULATETABLE ( ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxCustomerActivityCode_id", CALCULATE ( MAX ( CustomerTransactions[CustomerActivityCode_id] ) ) ), TREATAS ( LastCustomerTransactionDate, CustomerTransactions[Customer_id], CustomerTransactions[Transaction_num] ) )

// Count records from the above table where the last status

// within the current date context is 2: VAR Result = COUNTROWS ( FILTER ( LastCustomerTransactionState, [MaxCustomerActivityCode_id] = 2 ) ) RETURN Result, USERELATIONSHIP ( PBIDate[Date], CustomerTransactions[TransactionAddedDate] ) )

// "Please note that all the variables are within a CALCULATE just to activate the

// right relationship for PBIDate, so the filter over time is applied everywhere.

// The evaluation is in multiple steps:

// 1) Compute the last transaction number for each customer

// 2) Use the result of 1) as filter context using TREATAS and compute the last status at that date

// (assuming that if there are multiple statuses in the same date, MAX is the way to go.

// If you assume there is only one state per date use VALUES instead of MAX,

// so if the assumption is wrong you will get an error – please note that the code currently breaks

// if I use VALUES, so there are different states in the same date for the same customer in at least one case.

// 3) Count of many of the customer states computed at 2) have a status equal to 2"

REMEMBER: our goal is to CALCULATE the number of records in a given filter context where the last/max record for a given customer is a certain status. Talking over the logic it appears that semantically the above query should CALCULATE the correct value. However, I'm running into some quirky behavior and at this point can't seem to figure out why the measure is generating the result that it is.

For example:

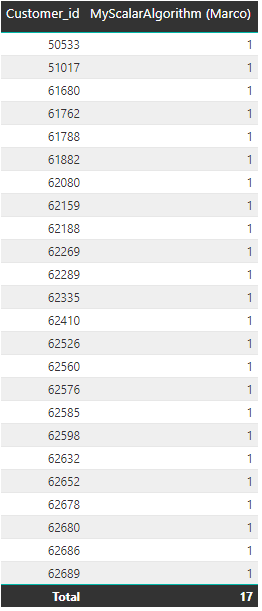

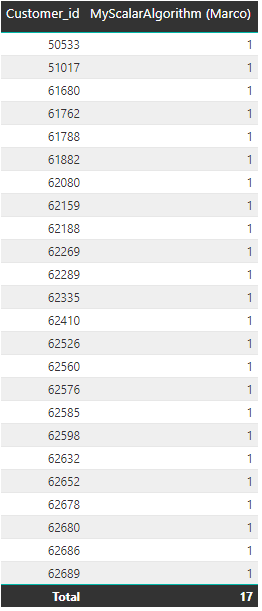

If I put a single date on a slicer or set a date filter in the filters panel to a single day, and then put Customer_id on a visual like a table, I get the correct amount of records from each line in the table according to a comparison with the database (in this case, 24 results); however, the "total" row has less than the aggregate of the rows:

Obviously something is going on whereby the filter context for the "total" is different than the filter context where Customer_id is put on the visual. I haven't quite figured out why yet.

I have a few theories which may or may not be correct assumptions.

The first is that it has something to do with customers who have additional transactions beyond the current date context. I created a calculated column at the ETL level in Power Query that functions as flag signifying whether or not a given record is the very last transaction for a customer in the context of ALL(Date[Date]) (so all transactions for all customers- see the image below where it's called "IsMaxTransactionRecord"). Note in this example that in the current date context the customers with 0 indicate customers that have more transactions beyond this date, and customers with a 1 indicate that on this day this is truly the ultimate MAX transaction for that customer. What's striking is that if you exclude customers who have a 0, you get a total of 17—the same as the scalar algorithm!:

![MarcoTREATASdiscrepancy2.PNG Note 17 results where [IsMaxTransactionRecord] = 1 that are truly the ultimate MAX transaction for a given customer and have occurred on the day in the current filter context](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/107107i21617EC596369780/image-size/large?v=v2&px=999)

My second theory is that it may have something to do with duplicate values. When I export the data to excel and apply some conditional formatting, I'm able to filter on both max transaction for each Customer_id and also filter by duplicate/not duplicate values. If I filter out duplicates and filter only to max transactions, I get 17 results also.

It's kind of quirky and I'm not 100% sure what's going on. Perhaps there's something going on beyond the scenes with TREATAS that I'm not catching. If you have any insight into using TREATAS, please share your feedback with me. Microsoft's documentation is rather poor and bare bones (as usual), and the function spec post-dates the first edition of The Definitive Guide to DAX. Furthermore, the article on TREATAS at SQLBI.com deals with filtering data at different time granularities, not calculating or grouping dynamic states.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In case anyone is interested, I believe I may have solved this problem on my own. I am in the process of verifying results so this solution is tentative at this point.

For the table solution:

MyTableAlgorithm =

VAR QueryStartDate = FIRSTDATE(Date[Date])

VAR QueryEndDate = LASTDATE(Date[Date])

RETURN

CALCULATETABLE (

SUMMARIZE (

FILTER (

SUMMARIZE ( // SUMMARIZECOLUMNS won't work here.

CustomerTransactions,

CustomerTransactions[Customer_id],

CustomerTransactions[TransactionAddedDate],

CustomerTransactions[CustomerActivityCode_id]

),

CustomerTransactions[TransactionAddedDate] >= QueryStartDate

&& CustomerTransactions[TransactionAddedDate] <= QueryEndDate

),

CustomerTransactions[Customer_id],

"MaxFeatureTransNum", MAX ( CustomerTransactions[num] ),

"MaxCustomerActivityCode_id", MAX ( CustomerTransactions[CustomerActivityCode_id] )

),

USERELATIONSHIP ( Date[Date], CustomerTransactions[TransactionAddedDate] ),

CustomerTransactions[CustomerActivityCode_id] = 2

)

I did read over at SQLBI.com that "A counterintuitive limitation in DAX is that you can group by extension columns, but you cannot perform meaningful calculations grouping by extension columns," thus confirming my suspicion that the additional arguments of SUMMARIZE or ADDCOLUMNS cannot be filtered against.

Marco gives a workaround but I haven't completely wrapped my head around it just yet. Further, there's been the addition of SUMMARIZECOLUMNS; my first attempts tried using this function (see first post) but on final implementation in Power BI it craps out with an error like this:

I thought the Microsoft Devs had fixed the issue where SUMMARIZECOLUMNS can't be used in a measure, but it may have something to do with layered filter contexts. Don't know.

Furthermore, after trying to work with that broken algorithm and fooling around in Power BI I ended getting some kind of corrupt memory error I've never seen before and haven't been able to replicate (I have no desire to attempt replicating it, either). I don't know if the two are related, but hey, there you go.

You'll notice in the above algorithm that the extension columns originally in the innermost SUMMARIZECOLUMNS of the first post have been removed. Their inclusion and omission don't seem to effect the outcome; this likely has to do with Marco's observations on lineage and virtual extension columns having no relationship with the physical data model.

Here's how I think the algorithm works: the innermost SUMMARIZE generates the initial set of data to work with. FILTER narrows the set to the dynamic date range set by the filter context. Somehow CALCULATETABLE works magic with its inverted order of evaluation with the outer SUMMARIZE statement. This is the one part I'm questioning whether or not it is calculating the result that I am expecting it to return. If CALCULATETABLE inverts evaluation order then is it imposing a CustomerTransactions[CustomerActivityCode_id] = 2 filter on the data from the start? This would not be the behavior I am looking for as it is functioning the same as a simple SQL WHERE clause. Pending the verification of my results I may need to invert the order somewhere or nest CALCULATETABLE functions. If you have any ideas please share!

The scalar solution:

Simply wrap the above in COUNTROWS:

MyScalarAlgorithm =

VAR QueryStartDate = FIRSTDATE(Date[Date])

VAR QueryEndDate = LASTDATE(Date[Date])

RETURN

COUNTROWS (

CALCULATETABLE (

SUMMARIZE (

FILTER (

SUMMARIZE (

CustomerTransactions,

CustomerTransactions[Customer_id],

CustomerTransactions[TransactionAddedDate],

CustomerTransactions[CustomerActivityCode_id]

),

CustomerTransactions[TransactionAddedDate] >= QueryStartDate

&& CustomerTransactions[TransactionAddedDate] <= QueryEndDate

),

CustomerTransactions[Customer_id],

"MaxFeatureTransNum", MAX ( CustomerTransactions[num] ),

"MaxCustomerActivityCode_id", MAX ( CustomerTransactions[CustomerActivityCode_id] )

),

USERELATIONSHIP ( Date[Date], CustomerTransactions[TransactionAddedDate] ),

CustomerTransactions[CustomerActivityCode_id] = 2

)

)

Please let me know if you see issues with my logic or understanding. I would love to learn from my errors and failures!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Everyone,

I've been in discussion with the folks over at SQLBI and here's a proposed solution using TREATAS to apply a filter between two virtual tables created in the same date context:

MyScalarAlgorithm = CALCULATE (

// Computes table containing last transaction for each Customer_id

// in the current filter context: VAR LastCustomerTransactionDate = ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxFeatureTransNum", CALCULATE ( MAX ( CustomerTransactions[Transaction_num] ) ) )

// Use the above VAR to establish filter context using TREATAS and computes

// the last activity status for the MAX(Transaction_num]) in the same date context: VAR LastCustomerTransactionState = CALCULATETABLE ( ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxCustomerActivityCode_id", CALCULATE ( MAX ( CustomerTransactions[CustomerActivityCode_id] ) ) ), TREATAS ( LastCustomerTransactionDate, CustomerTransactions[Customer_id], CustomerTransactions[Transaction_num] ) )

// Count records from the above table where the last status

// within the current date context is 2: VAR Result = COUNTROWS ( FILTER ( LastCustomerTransactionState, [MaxCustomerActivityCode_id] = 2 ) ) RETURN Result, USERELATIONSHIP ( PBIDate[Date], CustomerTransactions[TransactionAddedDate] ) )

// "Please note that all the variables are within a CALCULATE just to activate the

// right relationship for PBIDate, so the filter over time is applied everywhere.

// The evaluation is in multiple steps:

// 1) Compute the last transaction number for each customer

// 2) Use the result of 1) as filter context using TREATAS and compute the last status at that date

// (assuming that if there are multiple statuses in the same date, MAX is the way to go.

// If you assume there is only one state per date use VALUES instead of MAX,

// so if the assumption is wrong you will get an error – please note that the code currently breaks

// if I use VALUES, so there are different states in the same date for the same customer in at least one case.

// 3) Count of many of the customer states computed at 2) have a status equal to 2"

REMEMBER: our goal is to CALCULATE the number of records in a given filter context where the last/max record for a given customer is a certain status. Talking over the logic it appears that semantically the above query should CALCULATE the correct value. However, I'm running into some quirky behavior and at this point can't seem to figure out why the measure is generating the result that it is.

For example:

If I put a single date on a slicer or set a date filter in the filters panel to a single day, and then put Customer_id on a visual like a table, I get the correct amount of records from each line in the table according to a comparison with the database (in this case, 24 results); however, the "total" row has less than the aggregate of the rows:

Obviously something is going on whereby the filter context for the "total" is different than the filter context where Customer_id is put on the visual. I haven't quite figured out why yet.

I have a few theories which may or may not be correct assumptions.

The first is that it has something to do with customers who have additional transactions beyond the current date context. I created a calculated column at the ETL level in Power Query that functions as flag signifying whether or not a given record is the very last transaction for a customer in the context of ALL(Date[Date]) (so all transactions for all customers- see the image below where it's called "IsMaxTransactionRecord"). Note in this example that in the current date context the customers with 0 indicate customers that have more transactions beyond this date, and customers with a 1 indicate that on this day this is truly the ultimate MAX transaction for that customer. What's striking is that if you exclude customers who have a 0, you get a total of 17—the same as the scalar algorithm!:

![MarcoTREATASdiscrepancy2.PNG Note 17 results where [IsMaxTransactionRecord] = 1 that are truly the ultimate MAX transaction for a given customer and have occurred on the day in the current filter context](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/107107i21617EC596369780/image-size/large?v=v2&px=999)

My second theory is that it may have something to do with duplicate values. When I export the data to excel and apply some conditional formatting, I'm able to filter on both max transaction for each Customer_id and also filter by duplicate/not duplicate values. If I filter out duplicates and filter only to max transactions, I get 17 results also.

It's kind of quirky and I'm not 100% sure what's going on. Perhaps there's something going on beyond the scenes with TREATAS that I'm not catching. If you have any insight into using TREATAS, please share your feedback with me. Microsoft's documentation is rather poor and bare bones (as usual), and the function spec post-dates the first edition of The Definitive Guide to DAX. Furthermore, the article on TREATAS at SQLBI.com deals with filtering data at different time granularities, not calculating or grouping dynamic states.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tomislav,

Did you try using Marco Russo's algorithm? I think it will work pending on what you are trying to accomplish. It's been a while since I've been on this problem and I've been meaning to revisit it. I believe Marco's algorithm worked for this use case, but I had some edge cases I could not figure out at the time, and since have been occupied with other problems at work. I would try the following DAX first; please let me know if you have any questions and I'll dig up my notes from Marco:

MarcoTreatAsAlgorithm = CALCULATE (

// Computes table containing last transaction for each Customer_id (or whatever ID of interest)

// in the current filter context: VAR LastCustomerTransactionDate = ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxFeatureTransNum", CALCULATE ( MAX ( CustomerTransactions[Transaction_num] ) ) )

// Use the above VAR to establish filter context using TREATAS and computes

// the last activity status for the MAX(Transaction_num]) in the same date context: VAR LastCustomerTransactionState = CALCULATETABLE ( ADDCOLUMNS ( VALUES ( CustomerTransactions[Customer_id] ), "MaxCustomerActivityCode_id", CALCULATE ( MAX ( CustomerTransactions[CustomerActivityCode_id] ) ) ), TREATAS ( LastCustomerTransactionDate, CustomerTransactions[Customer_id], CustomerTransactions[Transaction_num] ) )

// Count records from the above table where the last status

// within the current date context is 2 (change "2" to whatever filter value you need): VAR Result = COUNTROWS ( FILTER ( LastCustomerTransactionState, [MaxCustomerActivityCode_id] = 2 ) ) RETURN Result, USERELATIONSHIP ( PBIDate[Date], CustomerTransactions[TransactionAddedDate] ) )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thanks a lot!

Regards

Maggie

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 99 | |

| 77 | |

| 66 | |

| 54 |

| User | Count |

|---|---|

| 144 | |

| 104 | |

| 102 | |

| 87 | |

| 64 |