- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- LOOKUPVALUE - "A table of multiple values was supp...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LOOKUPVALUE - "A table of multiple values was supplied where a single value was expected"

Hello all,

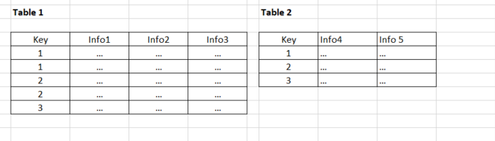

i'm trying to connect two tables with a commun key. Layout as shown here:

Usually i don't have any struggle to connect tables like this. However, everytime i try to call up information by LOOKUPVALUE in Table2 from Table1, i get an error about not expected multiple values...

Both tables are already related via the key and i acutally have made sure there aren't any doubles in Table2.

Some tips or tricks where i should look on more precisely or another way to check that there aren't any doubles?

Thanks in advance 🙂

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

consider following code for Power Query

Table2

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUUoEYqVYnWglIyAjCcYxBjJSisG8WAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Key = _t, #"Info 4" = _t, #"Info 5" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Key", Int64.Type}, {"Info 4", type text}, {"Info 5", type text}}), #"Merged Queries" = Table.NestedJoin(#"Changed Type",{"Key"},Table1,{"Key"},"Table1",JoinKind.LeftOuter),

#"Added Custom" = Table.AddColumn(#"Merged Queries", "Custom", each [Table1][Info 1]),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"Table1"}),

#"Extracted Values" = Table.TransformColumns(#"Removed Columns", {"Custom", each Text.Combine(List.Transform(_, Text.From), ","), type text}),

#"Split Column by Delimiter" = Table.SplitColumn(#"Extracted Values", "Custom", Splitter.SplitTextByDelimiter(",", QuoteStyle.Csv), {"Custom.1", "Custom.2", "Custom.3"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Custom.1", Int64.Type}, {"Custom.2", Int64.Type}, {"Custom.3", Int64.Type}})

in

#"Changed Type1"you should replace the blue part with your syntax, other consideration is the #"Split Column by Delimiter" - it creates hardcoded number of columns (although if you open the edit window of that step it will reevaluate the number of columns), so it may be good to create some surplus there

for reference - Table1 that I used

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSAWOlWB0IxwjGATEMkDmGyBxjGMcYLhMLAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Key = _t, #"Info 1" = _t, #"Info 2" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Key", Int64.Type}, {"Info 1", type text}, {"Info 2", type text}})

in

#"Changed Type"

Thank you for the kudos 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i think was able to figure out a solution now:

Adding an index to Table1 (5-figure as my key is 4-figure).

Adding 7 colums to Table1 for options 1-7. Option1 = IF(Info1=Option1;Key;Index) and so on.

Table2 also adding 7 columns and recall data per each option via LOOKUPVALUE. Option1Check = LOOKUPVALUE(Info1;Option1;Key) and recall data only if length=4.

Quite a low level solution, but works fine for me for now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the issue is actually perfectly explained in your picture - say you lookup 2 from Table 2 in Table 1 - there are 2 (so multiple) records that meet that criteria

so the question is how you aggregate the multiple entries from Table1

if the join is there and you call it from Table2 as calculated column you could use womething like this:

Column = CONCATENATEX(RELATEDTABLE(Table1),Table1[Info 1],UNICHAR(10))

UNICHAR(10) is line break delimiter, you could put there anything you want

Thank you for the kudos 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for your quick assistance, now it's totally clear to me why i can't lookup from table2 in table1, there i was a bit slow on the uptake 🙂

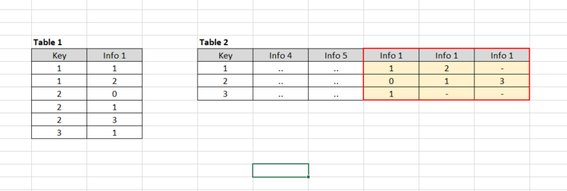

to be more accurate with my issue / my objective, i need to transform my data into following layout:

so in the best case, there will be added new columns automatically in the proper quantity of existing in table1.

Or maybe it could be easier to realize like this: Info1 could have 7 different options or could occur not more than 7 times, so there should be added 7 columns and be filled up based on the quantity in table1 and all the remainder will be left blank?

Could you give me some guidance with that?

Thanks a lot!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this would beeasier to do in Power Query - is that an option, or do you have to use DAX?

Thank you for the kudos 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no, Power Query is an option as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

consider following code for Power Query

Table2

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUUoEYqVYnWglIyAjCcYxBjJSisG8WAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Key = _t, #"Info 4" = _t, #"Info 5" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Key", Int64.Type}, {"Info 4", type text}, {"Info 5", type text}}), #"Merged Queries" = Table.NestedJoin(#"Changed Type",{"Key"},Table1,{"Key"},"Table1",JoinKind.LeftOuter),

#"Added Custom" = Table.AddColumn(#"Merged Queries", "Custom", each [Table1][Info 1]),

#"Removed Columns" = Table.RemoveColumns(#"Added Custom",{"Table1"}),

#"Extracted Values" = Table.TransformColumns(#"Removed Columns", {"Custom", each Text.Combine(List.Transform(_, Text.From), ","), type text}),

#"Split Column by Delimiter" = Table.SplitColumn(#"Extracted Values", "Custom", Splitter.SplitTextByDelimiter(",", QuoteStyle.Csv), {"Custom.1", "Custom.2", "Custom.3"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Custom.1", Int64.Type}, {"Custom.2", Int64.Type}, {"Custom.3", Int64.Type}})

in

#"Changed Type1"you should replace the blue part with your syntax, other consideration is the #"Split Column by Delimiter" - it creates hardcoded number of columns (although if you open the edit window of that step it will reevaluate the number of columns), so it may be good to create some surplus there

for reference - Table1 that I used

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSAWOlWB0IxwjGATEMkDmGyBxjGMcYLhMLAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Key = _t, #"Info 1" = _t, #"Info 2" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Key", Int64.Type}, {"Info 1", type text}, {"Info 2", type text}})

in

#"Changed Type"

Thank you for the kudos 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As i'm quite new to PowerBI, i'm not very experienced in the PowerQuery coding. So i have a hard time using your code and customize it to my own architecture. I draw my data from Sharepoint online lists , therefore my struggle even starts because there aren't listed the individual elements at all.

Is there a possibility to use the PowerQuery interface and to run the used steps manually to get the proper code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i think was able to figure out a solution now:

Adding an index to Table1 (5-figure as my key is 4-figure).

Adding 7 colums to Table1 for options 1-7. Option1 = IF(Info1=Option1;Key;Index) and so on.

Table2 also adding 7 columns and recall data per each option via LOOKUPVALUE. Option1Check = LOOKUPVALUE(Info1;Option1;Key) and recall data only if length=4.

Quite a low level solution, but works fine for me for now.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 115 | |

| 99 | |

| 86 | |

| 70 | |

| 62 |

| User | Count |

|---|---|

| 151 | |

| 120 | |

| 103 | |

| 87 | |

| 68 |