- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: JSON Joining Records in Groups

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

JSON Joining Records in Groups

Hi Community,

I am trying to retrieve records from an API that returns a Json formatted output in max groups of 1,000 records per request.

I have 10,000 journal records to get so i query the API to get each group of 1,000 records via incrementing the SKIP step.

Query1

let

Source = Json.Document(Web.Contents("http://localhost:8080/AccountRight/FileID/GeneralLedger/JournalTransaction/?$top=1000"))

in

Source

Query2

let

Source = Json.Document(Web.Contents("http://localhost:8080/AccountRight/FileID/GeneralLedger/JournalTransaction/?$top=1000&$skip=1000"))

in

Source

So i ended up with 9 queries for each group of 1000 records and then a Table.Combine to consolidated tables into one master listing....it was probably an inefficent way to do this.

I was reading Chris Webb and ImkeF posts about solving types of issues with API pagination limits and using functions to automate the get data part but i need some help with getting the list of journals to combine.

I wasnt sure best way to complete next step.

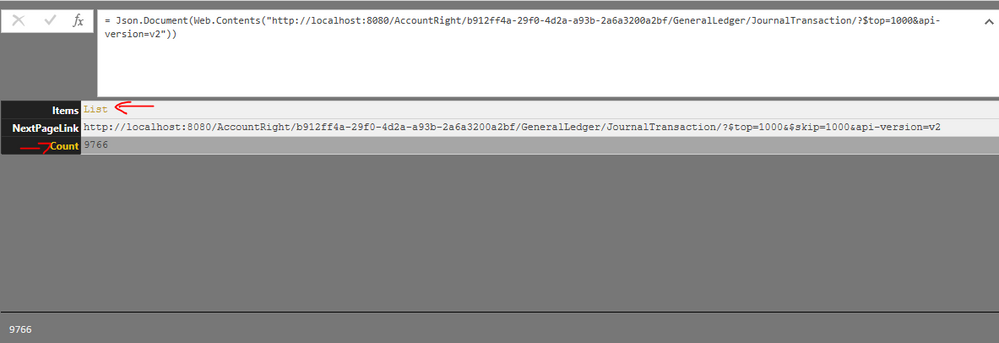

I am given then number count of records and nextpageLink for the URI for next 1000 journals.

I can generate a query to return the number of records

let

Source = Json.Document(Web.Contents("http://localhost:8080/AccountRight/FileId/GeneralLedger/JournalTransaction/")),

Items = Source[Count]

in

Items

This returns a value of 9766 shown in the source datawindow above. So if i divide this by 1000 i get the number of page calls i need to make to get all journal transactions.

I can now create a function to grab records by entering a SkipValue, so that seems to work okay...

(SkipValue as text) =>

let

Source = Json.Document(Web.Contents("http://localhost:8080/AccountRight/FileId/GeneralLedger/JournalTransaction/?$stop=1000&$skip=" & SkipValue "))

in

Source

How do i integrate the function with data so i can combine this into 1 table dynamically ?

How do i get the Skip values required for each source page of data lists?

Can anyone point me in right direction?

Thanks again for any assistance...and Merry Xmas everyone

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @THEG72,

After research, there is a blog discribed how to implement REST API pagination in Power Query, and similar thread for your reference.

How To Do Pagination In Power Query

Creating a loop for fetching paginated data from a REST API

Best Regards,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

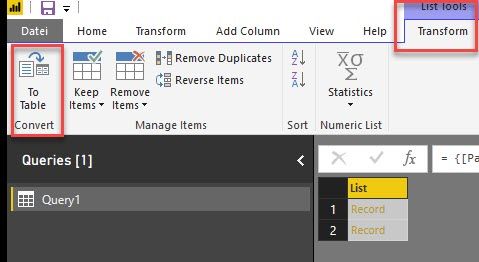

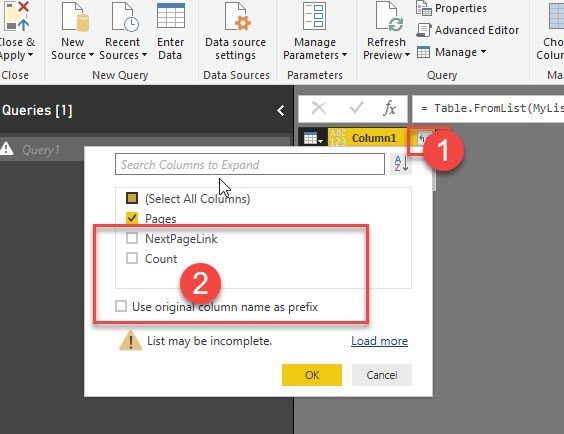

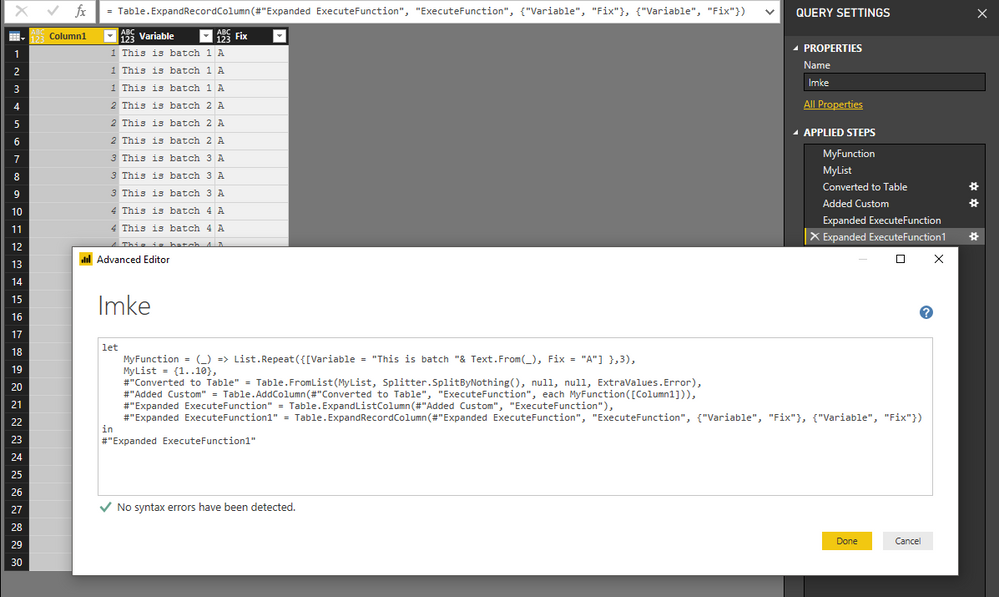

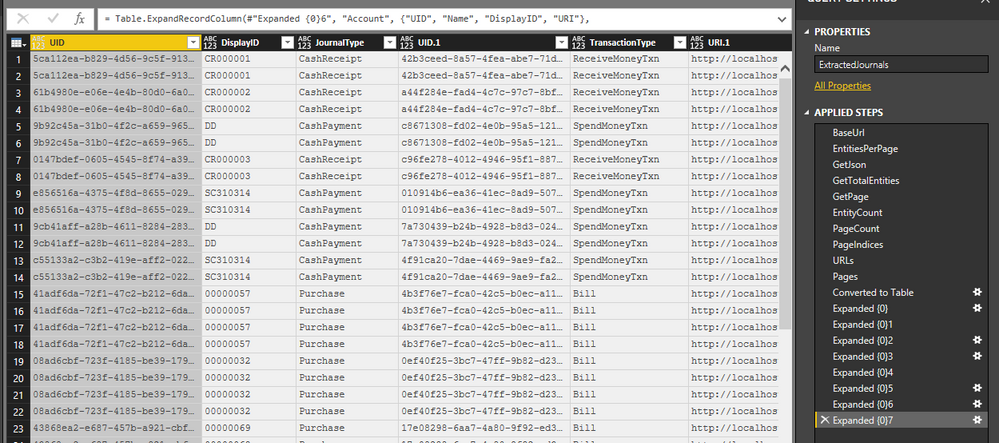

Hi Garry, thanks for the pics. If your list contains all the data already, there is no need to use the other technique I've suggested. Just do the following:

1) Delete the steps after "Pages"

2) Transform your list to a table:

3) Expand the column by clicking on the arrows (1) and deselect the other fields (2) like this:

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

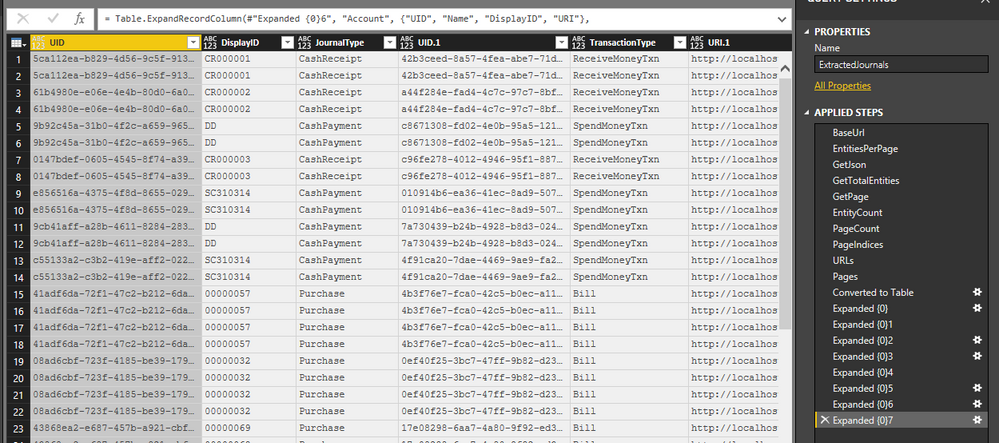

Thanks for this....it combined as you have advised and i have expanded the records containing the data to reveal all the 9.766 records....You are a STAR :)...I got lost a bit on this as you are right this structured Json file is odd....

I had to expand the data many times to extract the final information

Here is the final code

let

BaseUrl = "http://localhost:8080/AccountRight/FileID/GeneralLedger/",

EntitiesPerPage = 1000,

GetJson = (Url) =>

let Rawdata = Web.Contents(Url),

Json = Json.Document(RawData)

in Json,

GetTotalEntities = () =>

let Json = Json.Document(Web.Contents("http://localhost:8080/AccountRight/fileID/GeneralLedger/")),

Items = Json[Count]

in

Items,

GetPage = (Index) =>

let skip = "$skip=" & Text.From(Index * EntitiesPerPage),

Url = BaseUrl & "&" & skip

in Url,

EntityCount = List.Max({EntitiesPerPage, GetTotalEntities()}),

PageCount = Number.RoundUp(EntityCount / EntitiesPerPage),

PageIndices = {0 .. PageCount - 1},

URLs = List.Transform(PageIndices, each GetPage(_)),

Pages = List.Transform(URLs, each GetJson(_)),

#"Converted to Table" = Table.FromList(Pages, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded {0}" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"Items"}, {"Column1.Items"}),

#"Expanded {0}1" = Table.ExpandListColumn(#"Expanded {0}", "Column1.Items"),

#"Expanded {0}2" = Table.ExpandRecordColumn(#"Expanded {0}1", "Column1.Items", {"UID", "DisplayID", "JournalType", "SourceTransaction", "DateOccurred", "DatePosted", "Description", "Lines", "URI", "RowVersion"}, {"UID", "DisplayID", "JournalType", "SourceTransaction", "DateOccurred", "DatePosted", "Description", "Lines", "URI", "RowVersion"}),

#"Expanded {0}3" = Table.ExpandRecordColumn(#"Expanded {0}2", "SourceTransaction", {"UID", "TransactionType", "URI"}, {"UID.1", "TransactionType", "URI.1"}),

#"Expanded {0}4" = Table.ExpandListColumn(#"Expanded {0}3", "Lines"),

#"Expanded {0}5" = Table.ExpandRecordColumn(#"Expanded {0}4", "Lines", {"Account", "Amount", "IsCredit", "Job", "LineDescription", "ReconciledDate"}, {"Account", "Amount", "IsCredit", "Job", "LineDescription", "ReconciledDate"}),

#"Expanded {0}6" = Table.ExpandRecordColumn(#"Expanded {0}5", "Job", {"UID", "Number", "Name", "URI"}, {"UID.2", "Number", "Name", "URI.2"}),

#"Expanded {0}7" = Table.ExpandRecordColumn(#"Expanded {0}6", "Account", {"UID", "Name", "DisplayID", "URI"}, {"UID.3", "Name.1", "DisplayID.1", "URI.3"})

in

#"Expanded {0}7"

I did a count of the UID's and it returned 9,766 dated from 2014 till November 2017 and the file size is about 2.7meg.

@ImkeF just another question if I can. would you recommend this method to capture the accounting transactions for historical periods or summarise this data? I know the loading of historical data can increase load time....If it can be summarised is this a better approach as more transactions are added?

Again, thanks for your expertise on the matter.....and Happy New Year everyone!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Garry,

I had a different understanding of your actual data.

I believe you just need to test out different versions and see for yourself which one is faster at the end.

Imke Feldmann

www.TheBIccountant.com -- How to integrate M-code into your solution -- Check out more PBI- learning resources here

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just posting some additional info for others....Imke has helped me get further with this on Power Query post...

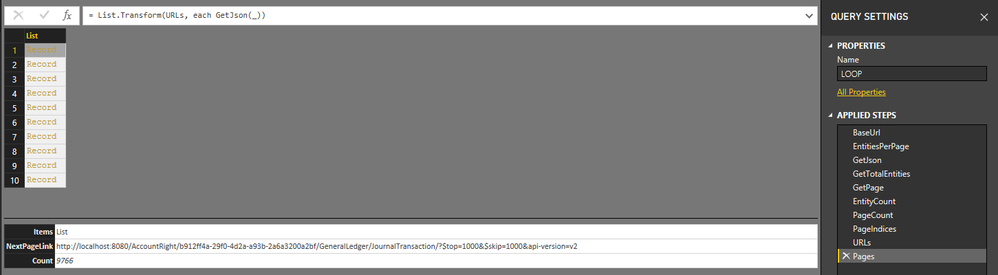

My lastest code is here...

let

BaseUrl = "http://localhost:8080/AccountRight/b912ff4a-29f0-4d2a-a93b-2a6a3200a2bf/GeneralLedger/JournalTransaction/?api-version=v2&$top=1000",

EntitiesPerPage = 1000,

GetJson = (Url) =>

let RawData = Web.Contents(Url),

Json = Json.Document(RawData)

in Json,

GetTotalEntities = () =>

let Json = Json.Document(Web.Contents("http://localhost:8080/AccountRight/b912ff4a-29f0-4d2a-a93b-2a6a3200a2bf/GeneralLedger/JournalTransaction/?api-version=v2")),

Items = Json[Count]

in

Items,

GetPage = (Index) =>

let skip = "$skip=" & Text.From(Index * EntitiesPerPage),

Url = BaseUrl & "&" & skip

in Url,

EntityCount = List.Max({EntitiesPerPage, GetTotalEntities()}),

PageCount = Number.RoundUp(EntityCount / EntitiesPerPage),

PageIndices = {0 .. PageCount - 1},

URLs = List.Transform(PageIndices, each GetPage(_)),

Pages = List.Transform(URLs, each GetJson(_))

in

Pages

I now end up with a list of 10 records which look to hold the right information for the 10,000 records.

How do i get the Items List (contain each set of 1,000 records)..? by manually expanding each record and then item list with data....How do i go back to get the 2nd to 10th records table data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @THEG72,

After research, there is a blog discribed how to implement REST API pagination in Power Query, and similar thread for your reference.

How To Do Pagination In Power Query

Creating a loop for fetching paginated data from a REST API

Best Regards,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

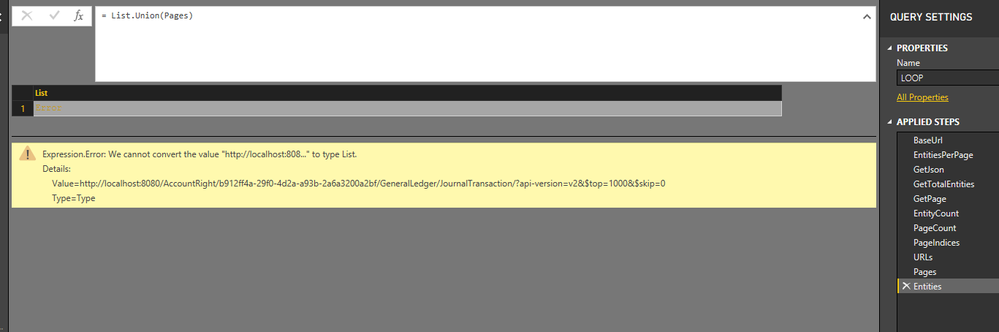

Thanks for the links Angelia,

I managed to get most of the code working on my version...but i ended up with an error at the List.Union step.

Here is the error message: 'Expression.Error: We cannot convert the value "http://localhost:808..." to type List."

The previous step returns the pages which i tested and work fine in a browser...BUT Does the list values themselves shown below need to be in quotes? like so "http://localhost:8080......" in the list so the List.Union step works?

Here is my code to date:

let

BaseUrl = "http://localhost:8080/AccountRight/Fileid/GeneralLedger/JournalTransaction/$top=1000",

EntitiesPerPage = 1000,

GetJson = (Url) =>

let RawData = Web.Contents(Url),

Json = Json.Document(RawData)

in Json,

GetTotalEntities = () =>

let Json = Json.Document(Web.Contents("http://localhost:8080/AccountRight/filed/GeneralLedger/JournalTransaction/")),

Items = Json[Count]

in

Items,

GetPage = (Index) =>

let Skip = "$skip=" & Text.From(Index * EntitiesPerPage),

Url = BaseUrl & "&" & Skip

in Url,

EntityCount = List.Max({EntitiesPerPage, GetTotalEntities()}),

PageCount = Number.RoundUp(EntityCount / EntitiesPerPage),

PageIndices = {0 .. PageCount - 1},

URLs = List.Transform(PageIndices, each GetPage(_)),

Pages = List.Transform(PageIndices, each GetPage(_)),

Entities = List.Union(Pages)

in

Entities

When i look at the first entry point for the journal data it shows the first 1,000 records in the list of items, the nextpage and count which is helpful...maybe i cant use the exact method you have linked this post to however i may be able to use these variables to reference the right information required.

As i work through the applied steps, each of the steps seem to return the expected results...Entity count, page count, base URL, PageIndicies and URLs for each of required pages work...

Anyway, thanks for the information to date!

Cheers Garry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @THEG72,

You're welcome, it's my pleasure to help you. But I am not a expert in Power Query area. For Power Query statement, you can post the case to Power Query statement to get dedicated support.

Thanks,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply @v-huizhn-msft

I posted and got further with this here.

Thanks to assistance from Imke Feldmann - MVP Data Platform (PowerBI) - http://www.TheBIccountant.com

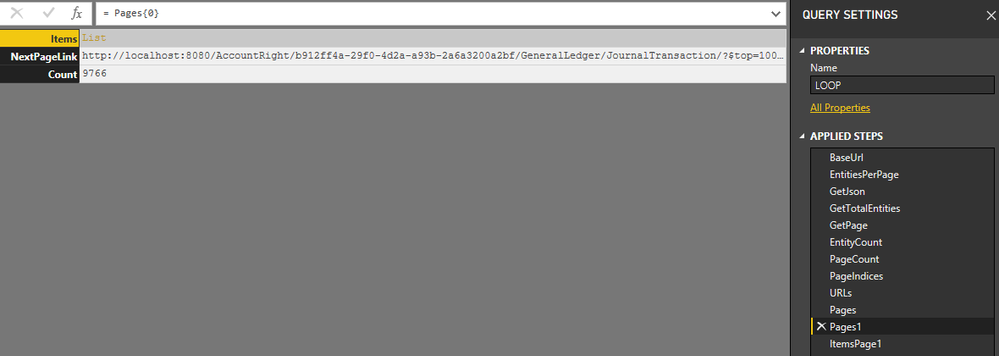

I now have a list of ten records for each url.

Each Record (1-10) shows:

Items List (Expandale data records)

NextPageLink (Url for next set of 1,000 records)

Count 9766 (shows total records)

I need the work out how to grab each records Items List(holding 1,000 journal transactions each) and Expand and combine?

I can expand first record and get first set of 10,000 records

How do i go back to get Records 2 to 10 and Expand then combine all 10 Record lists...?

Okay Imke , has advised i need to "call your function in a Table.AddColumn-command" but i am still unsure on how to apply this code to my previous code so i will have a look further into the instructions provided by this Super Contributor...Thanks again for your help and Imke

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Garry, thanks for the pics. If your list contains all the data already, there is no need to use the other technique I've suggested. Just do the following:

1) Delete the steps after "Pages"

2) Transform your list to a table:

3) Expand the column by clicking on the arrows (1) and deselect the other fields (2) like this:

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for this....it combined as you have advised and i have expanded the records containing the data to reveal all the 9.766 records....You are a STAR :)...I got lost a bit on this as you are right this structured Json file is odd....

I had to expand the data many times to extract the final information

Here is the final code

let

BaseUrl = "http://localhost:8080/AccountRight/FileID/GeneralLedger/",

EntitiesPerPage = 1000,

GetJson = (Url) =>

let Rawdata = Web.Contents(Url),

Json = Json.Document(RawData)

in Json,

GetTotalEntities = () =>

let Json = Json.Document(Web.Contents("http://localhost:8080/AccountRight/fileID/GeneralLedger/")),

Items = Json[Count]

in

Items,

GetPage = (Index) =>

let skip = "$skip=" & Text.From(Index * EntitiesPerPage),

Url = BaseUrl & "&" & skip

in Url,

EntityCount = List.Max({EntitiesPerPage, GetTotalEntities()}),

PageCount = Number.RoundUp(EntityCount / EntitiesPerPage),

PageIndices = {0 .. PageCount - 1},

URLs = List.Transform(PageIndices, each GetPage(_)),

Pages = List.Transform(URLs, each GetJson(_)),

#"Converted to Table" = Table.FromList(Pages, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded {0}" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"Items"}, {"Column1.Items"}),

#"Expanded {0}1" = Table.ExpandListColumn(#"Expanded {0}", "Column1.Items"),

#"Expanded {0}2" = Table.ExpandRecordColumn(#"Expanded {0}1", "Column1.Items", {"UID", "DisplayID", "JournalType", "SourceTransaction", "DateOccurred", "DatePosted", "Description", "Lines", "URI", "RowVersion"}, {"UID", "DisplayID", "JournalType", "SourceTransaction", "DateOccurred", "DatePosted", "Description", "Lines", "URI", "RowVersion"}),

#"Expanded {0}3" = Table.ExpandRecordColumn(#"Expanded {0}2", "SourceTransaction", {"UID", "TransactionType", "URI"}, {"UID.1", "TransactionType", "URI.1"}),

#"Expanded {0}4" = Table.ExpandListColumn(#"Expanded {0}3", "Lines"),

#"Expanded {0}5" = Table.ExpandRecordColumn(#"Expanded {0}4", "Lines", {"Account", "Amount", "IsCredit", "Job", "LineDescription", "ReconciledDate"}, {"Account", "Amount", "IsCredit", "Job", "LineDescription", "ReconciledDate"}),

#"Expanded {0}6" = Table.ExpandRecordColumn(#"Expanded {0}5", "Job", {"UID", "Number", "Name", "URI"}, {"UID.2", "Number", "Name", "URI.2"}),

#"Expanded {0}7" = Table.ExpandRecordColumn(#"Expanded {0}6", "Account", {"UID", "Name", "DisplayID", "URI"}, {"UID.3", "Name.1", "DisplayID.1", "URI.3"})

in

#"Expanded {0}7"

I did a count of the UID's and it returned 9,766 dated from 2014 till November 2017 and the file size is about 2.7meg.

@ImkeF just another question if I can. would you recommend this method to capture the accounting transactions for historical periods or summarise this data? I know the loading of historical data can increase load time....If it can be summarised is this a better approach as more transactions are added?

Again, thanks for your expertise on the matter.....and Happy New Year everyone!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to hear & a Happy New Year to you as well!

With regards to the summarisation: It really depends on what you want to do with the data.

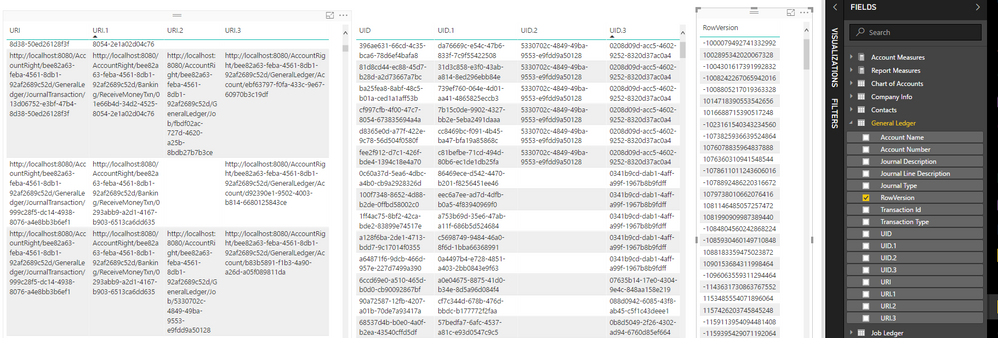

What I would recommend to you instead is to expand fewer columns:

1) Don't expand your keys multiple times. Those are the fields that combine the different record fields like: UID and URI

2) Just expand those field from which you are sure that you need them in your reports. In Power BI there is absolutely no need to "keep" columns in case you need them later: If you need them later, just go to the query editor and select them. They will be there, just that they have not been displayed in the first time.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ImkeF what is the significance of the URI versus UID versus ROW Version?

When i expanded everything i ended up with a UID, UID.1, UID.2 and UID.3 same with the URI's....so four versions but different outputs.....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Garry,

I had a different understanding of your actual data.

I believe you just need to test out different versions and see for yourself which one is faster at the end.

Imke Feldmann

www.TheBIccountant.com -- How to integrate M-code into your solution -- Check out more PBI- learning resources here

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF

I needed the Job and Account Details which meant i had to expand to levels i went to but i can remove the columns not required. I also managed to work this into a template so i can just change the API source for this to work on different accounting files.

I am looking at doing various Profit and Loss Statements and Balance Sheets etc by Project and sub job.

There are several ledgers that make up the general ledger so its a matter I think of working through each of the sub-ledger systems ...I know that loading of historical data in this regard can increase the wait time depending how many years back you want to retrieve historical data from.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @THEG72,

Thanks for sharing, more people will benefit from here.

Best Regards,

Angelia

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 117 | |

| 107 | |

| 69 | |

| 68 | |

| 43 |

| User | Count |

|---|---|

| 148 | |

| 103 | |

| 103 | |

| 88 | |

| 66 |