- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Histogram of number of occurrences of text bas...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Histogram of number of occurrences of text based and / or numerical measures

Does anyone have advice on easier ways to do what I have done and am trying to do below?

I am analyzing the depth of coverage (measured primarily by the average number of unique local stories) across a large news site (patch.com), which has ~2000 local websites, ~30m monthly unique readers, and different types of author roles (5 = journalist, for example, 7 = local business, etc).

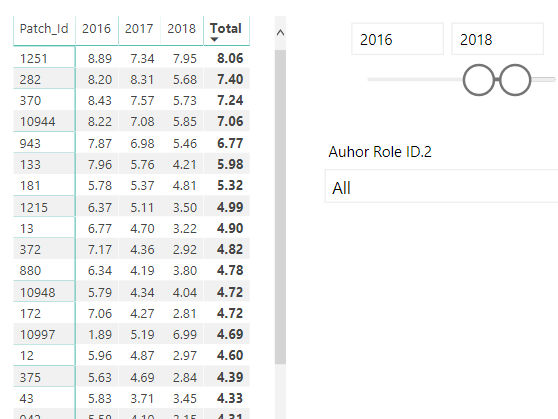

Here's a sample of the data set.

TABLE: all_articles_ever

| Date | CleansedTitleDateAuthor | Patch_Id | Author_Id | Author_Role_Id |

| 12/21/2018 | $339kcinnaminsonhomeisanentertainersdream;12/21/2018;93127 | 817 | 93127 | 5 |

| 11/29/2018 | $319kcinnaminsonhomesitsonhalfanacre;11/29/2018;93127 | 817 | 93127 | 5 |

| 11/28/2018 | $220sneakersstolenfromunlockedvehicleinbrynmawrpolice;11/28/2018;22896833 | 628 | 22896833 | 5 |

| 11/5/2018 | $57mundevelopedestateupforsaleinmclean;11/5/2018;22924509 | 848 | 22924509 | 5 |

| 10/25/2018 | $1millionpowerballticketsoldinsouthjersey;10/25/2018;93127 | 817 | 93127 | 5 |

| 10/12/2018 | 10contemporarycahomestogetyoudreaming;10/12/2018;21854731 | 67 | 21854731 | 5 |

| 9/28/2018 | $4600ofpropertystolenfromofficeinbeachwoodblotter;9/28/2018;22896693 | 834 | 22896693 | 5 |

| 8/30/2018 | $1millionpowerballticketsoldinwiltonstillunclaimed;8/30/2018;103600 | 14 | 103600 | 5 |

| 8/27/2018 | $550kmeadowviewvillagehomeavailableincinnaminson;8/27/2018;93127 | 817 | 93127 | 5 |

| 8/23/2018 | $11mautorepaircollegeopensinbloomfield(photos);8/23/2018;22844250 | 473 | 22844250 | 5 |

| 8/20/2018 | $1360incounterfeitmoneyfoundincrashedcarinbeachwood;8/20/2018;22896693 | 834 | 22896693 | 5 |

| 8/15/2018 | $5millionhomefeaturesviewscountryclubliving;8/15/2018;21854731 | 67 | 21854731 | 5 |

| 8/14/2018 | $500kbloomfieldhomeonmorseavenuehasenormouspool;8/14/2018;22844250 | 473 | 22844250 | 5 |

| 8/3/2018 | $5sandwiches|burglarysuspectsmanhattanbeachtopstories;8/3/2018;22942913 | 54 | 22942913 | 5 |

| 7/30/2018 | $24mgladwynehomehaswinecellarfireplaces2garagesmore;7/30/2018;22896833 | 628 | 22896833 | 5 |

| 7/19/2018 | $199msaleonmcleansgoldcoastsetsrecord;7/19/2018;22924509 | 848 | 22924509 | 5 |

| 7/11/2018 | $1000rewardofferedinfatalsouthaustinshootingcase;7/11/2018;22872998 | 10936 | 22872998 | 5 |

| 7/5/2018 | 10+newlawsthatcanchangeyourlifeinctstartingnow;7/5/2018;22824890 | 12 | 22824890 | 5 |

| 6/28/2018 | &pizzatoopenatthemallatprincegeorges;6/28/2018;22891861 | 240 | 22891861 | 5 |

| 6/27/2018 | $14millionchetsecuritybreachuncovered;6/27/2018;22824890 | 12 | 22824890 | 5 |

| 6/21/2018 | 10burlingtoncountytownscouldbeeliminatedunderstateplan;6/21/2018;93127 | 817 | 93127 | 5 |

| 6/19/2018 | 100thdirtcheapstoretoopeninarlingtonthursday;6/19/2018;22969719 | 11273 | 22969719 | 5 |

| 6/18/2018 | $753klottoticketsoldinpomptonplains;6/18/2018;22896301 | 44 | 22896301 | 5 |

| 6/13/2018 | $20millionschoolsecuritygrantprogramproposedinburlco;6/13/2018;93127 | 817 | 93127 | 5 |

6/8/2018 | $41kgameshowwin|150ftfallmanhattanbeachtopstories;6/8/2018;22942913 | 54 | 22942913 | 5 |

Since we generally get what we inspect, not what we expect (!), I am inspecting how many of the ~2000 sites get what number of daily unique journalist-produced articles on average. I've largely gotten there, except for visual display of the information, which I am finding to be a tricky problem.

Here's what I have done so far, and how I have done it:

A. My first goal was to understand average number of local stories per Patch. I did this in three steps, below, which could probably be done more elegantly.

1. I created a measure for Distinct articles to avoid counting dupes by first creating in M Query an amalgum of article title,date,Author and then doing a measure for distinct count of non blanks. Measure below.

#Loc.Article_Distinct =

CALCULATE(

DISTINCTCOUNT(all_articles_ever[CleansedTitleDateAuthor]) ,

all_articles_ever[CleansedTitleDateAuthor] <> BLANK ()

)

2. Next, I made a measure to calculate the average number of articles over time:

__DailyLocAvg = VAR _Days = COUNTROWS(DimDate) //Note my date table is called DimDate. It is a standard date table RETURN [#Loc.Article_Distinct] / _Days

//Side note: the above measure is a straight average, meaning that if a site measured over a year and 364 days with no articles and one day with 365, then the yearly average would be one article a day. A straight average for now is okay, although an ideal measure probably looks at the percent of days over X articles a day.

3. I realized that average articles per site measure, above, needed to have an explicit filter becaue I want to track by site. Accordingly, I made a new measure that does that, below.

__DailyLocAvgPerPatchID = AVERAGEX( KEEPFILTERS(VALUES(all_articles_ever[Patch_Id])), CALCULATE([__DailyLocAvg]) )

//Side note #2: I struggled to combine steps 2 and 3, above, into a single measure and ultimately moved on -- anyone have any bright ideas for more efficient measures here?

Anyway, so far so good. My steps may be clumsy, but I am getting the results I should be: average stories per Patch per year:

HOWEVER: here's the issue with which I have been struggling despite thorough message board crawling. What is the best way to take the measure [__DailyLocAvgPerSiteID] and get a histogram of the results to see what % of Patches get what number of articles? I have tried the following:

1. Summarize Columns to product a table of the above output, which I could then graph...

Summary = SUMMARIZECOLUMNS(

all_articles_ever[Patch_Id],

all_articles_ever[Date],

"Distinct Count", DISTINCTCOUNT(all_articles_ever[CleansedTitleDateAuthor])

)...but get the error "The expression refers to multiple columns. Multiple columns cannot be converted to a scalar value."

2. I have tried creating a bucket in DAX, which works...

__AvgStories DaxBucketed =

IF ( [#Loc.Article_Distinct] > 0,

SWITCH(

TRUE()

,[__DailyLocAvg] > .01 && [__DailyLocAvg]< 1, "A. <1"

,[__DailyLocAvg] >= 1 && [__DailyLocAvg] < 2, "B. >=1 <2"

,[__DailyLocAvg] >= 2 && [__DailyLocAvg] < 4, "C. >=2 <4"

,[__DailyLocAvg] >=4 && [__DailyLocAvg] < 8, "D. >=4 <8"

,[__DailyLocAvg] >=8 && [__DailyLocAvg] < 999999, "E. >=8"

,"0. 0"

),

"Null" ) ...but find myself then unable to find a graph that takes the textual bucket measures and produces a chart that groups by them -- only ones that group by columns. Additionally, I have to filter out a lot of nulls because the KEEPFILTERS seems like a pretty clumsy way to deal with per Site_ID.

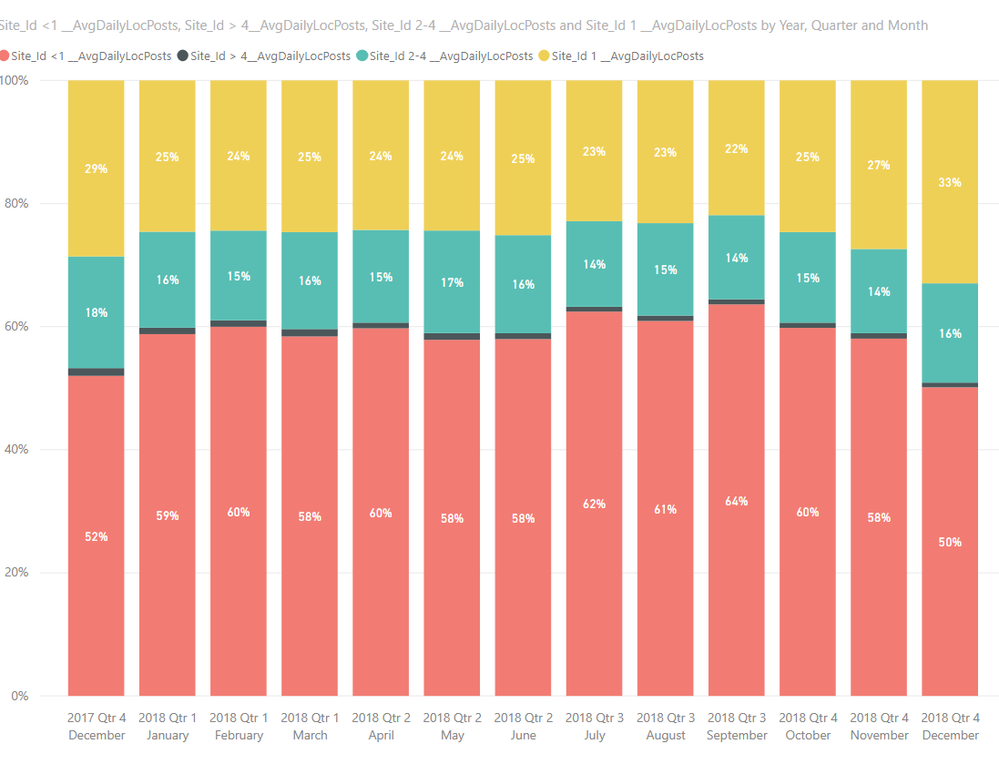

Note - here's an example of the chart I've used in the past when I've had a column reference on a summary table that shows, for any given period, the count of stories.

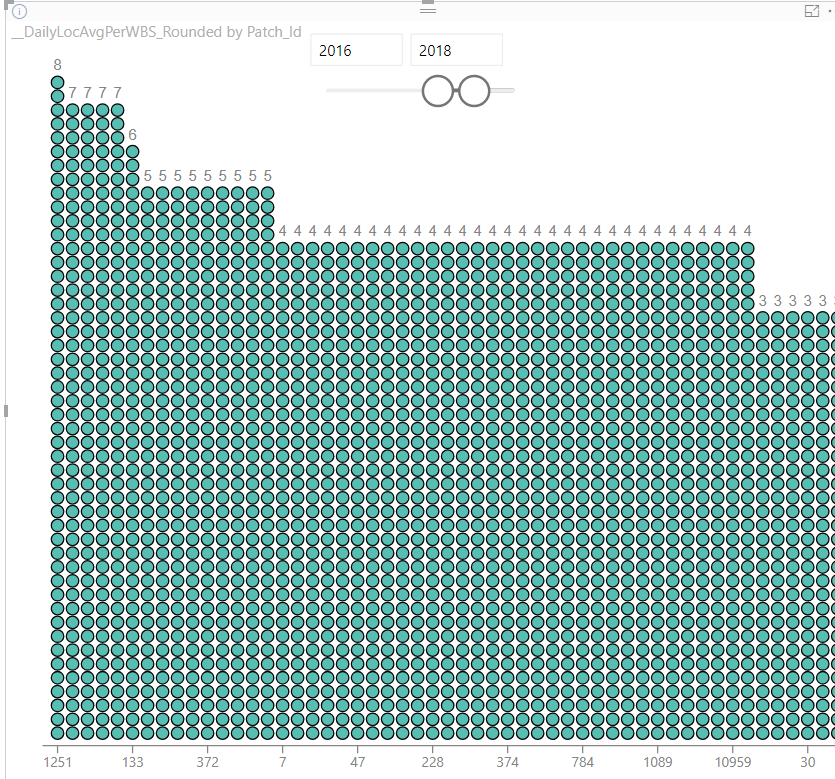

3. Finally, I have tried rounding the measure [__DailyLocAvg] so I can graph that. However, the closest to the above desired chart I am able to get is the Data Plot chart 1.6, which shows information by Patch_Id but doesnt give the data display I am trying to get to, above.

Oh PowerBI and DAX masters out there, any advice?

Thank you.

Charlie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be general, could you change [#Loc.Article_Distinct] and [__DailyLocAvg] into calculate column? Becaue the problem will be more complicated when you use refer to one measure in another measure.

Community Support Team _ Jimmy Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree. Since the daily average would be per day and the table isnt per day, I have been trying to use SUMMARIZECOLUMNS for that (and probably with a Date.[day] in it to summarize by day). Any idea why my SUMMARIZECOLUMNS measure is breaking? Thank you, @v-yuta-msft

PS - I'd think the SUMMARIZECOLUMNS would look like this for any given date array, and then since the __AvgStoriesDaxBucketed is a column, I should be able to graph it as % of total.

| Patch_Id | __AvgStories DaxBucketed |

| 2 | B. >=1 <2 |

| 3 | B. >=1 <2 |

| 4 | B. >=1 <2 |

| 6 | C. >=2 <4 |

| 7 | C. >=2 <4 |

| 8 | B. >=1 <2 |

| 10 | C. >=2 <4 |

| 11 | D. >=4 <8 |

| 12 | D. >=4 <8 |

| 13 | D. >=4 <8 |

| 14 | C. >=2 <4 |

| 15 | B. >=1 <2 |

| 16 | C. >=2 <4 |

| 17 | C. >=2 <4 |

| 18 | C. >=2 <4 |

| 19 | C. >=2 <4 |

| 20 | C. >=2 <4 |

| 21 | B. >=1 <2 |

| 22 | B. >=1 <2 |

| 23 | B. >=1 <2 |

| 24 | A. <1 |

| 25 | C. >=2 <4 |

| 26 | B. >=1 <2 |

| 27 | B. >=1 <2 |

| 28 | B. >=1 <2 |

| 29 | B. >=1 <2 |

| 30 | C. >=2 <4 |

| 32 | C. >=2 <4 |

| 33 | C. >=2 <4 |

| 34 | B. >=1 <2 |

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 113 | |

| 99 | |

| 80 | |

| 70 | |

| 59 |

| User | Count |

|---|---|

| 149 | |

| 114 | |

| 107 | |

| 89 | |

| 67 |