- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Handling CSV with accumulated rows

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Handling CSV with accumulated rows

Hi all,

we are a Microsoft Partner and want to analyse the Azure Costs of our customers that have a CSP subscription from us. For this purpose our distributor provides us a CSV export in our blob storage. The csv export has the following folder structure:

Folder 1: CustomerName

Folder 2: TimePeriod (e.g. 01/01/21 - 01/31/21)

Content: up to 31 csv files - one per day

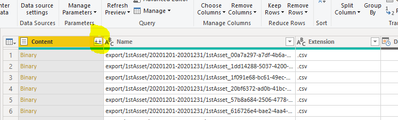

I imported the whole Azure blob storage and transformed the data to the "BINARIES" level, so that i can work with the content of the csv files.

The csv content of the first day within a TimePeriod looks like:

| Date | Duration of uptime in h |

| 01/01/21 | 19 |

The csv content of the 2nd day within a TimePeriod looks like:

| Date | Duration of uptime in h |

| 01/01/21 | 19 |

| 01/02/21 | 24 |

So when I do a sum(Duration of uptime in h) the result is 62. The correct result would be 43.

And this is aggravated by the fact that in the next timeperiod (in my example "February") the accumulation starts from 0 again.

I'm totally confused how i can solve that. Even don't know if it should be solved by transforming or DAX.

Thanks for you help in advance

Manuel

PS: Have already posted a similar post 30 mins ago, but it didn't appear in the list. So please excuse if this appears twice now.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem is solved... thank you very much for your fast help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @cavok

When you imported the data to the "BINARIES" level, do you mean you do something like this?

Table.PromoteHeaders( Csv.Document([Content]))

Then you combin tables together, it looks like you have duplicated rows, remove them should be working:

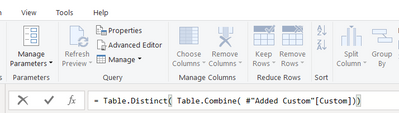

Table.Distinct( Table.Combine( #"Added Custom"[Custom]))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Vera_33 ,

after adding the blob storage folder i've seen the file level in PowerQuery. The only thing I did was a "Click" on the "CONTENT ICON" in the binary column (see snip).

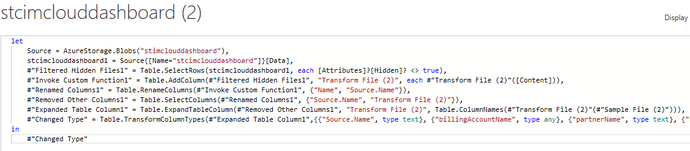

I do not join any tables. It is only one big table. The advanced editor has this code after clicking the "CONTENT ICON":

But yes I think the solution would be, deleting all rows that have the same data in every column. How can i manipulate the import with a DISTINCT so that these rows aren't imported?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @cavok

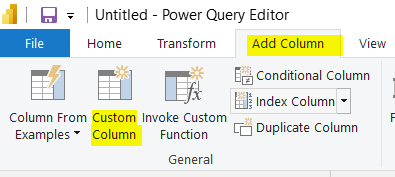

Do not click the botton when you are at the snip, add a custom column instead,

Go to Add Coumn, then put Table.PromoteHeaders( Csv.Document([Content])), you will see a table for each .csv in this new column

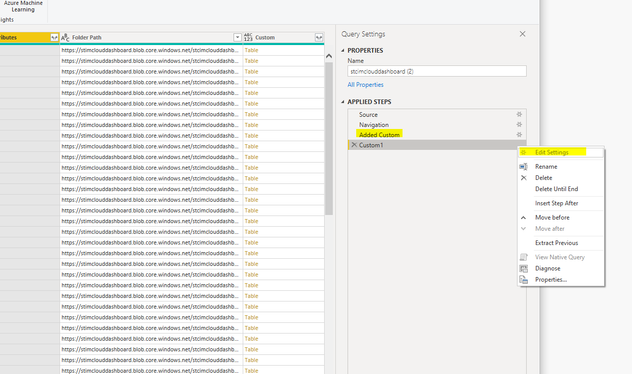

Then go to Applied steps, right click to "Insert Step After" with the code: Table.Distinct( Table.Combine( #"Added Custom"[Custom]))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Vera_33 ,

i think we're on the right way - tried a different solution, that worked, but doubled the amound data 😄 - I added a new column which contains every data of every column in one string. After that i clicked "delete duplicate rows". Don't think that this is the best solution.

Tried your way, but I can't insert the "Distinct Code":

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem is solved... thank you very much for your fast help.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 114 | |

| 98 | |

| 86 | |

| 70 | |

| 62 |

| User | Count |

|---|---|

| 151 | |

| 120 | |

| 103 | |

| 87 | |

| 68 |