- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Get Data from Azure Data Lake Sore Gen2 (Not Dataf...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

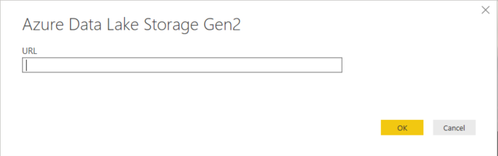

Get Data from Azure Data Lake Sore Gen2 (Not Dataflow)

Hello,

When using the new feature to Get Data - Azure Data Lake Store Gen2, I realize I have to put the URL Path same as the URL Path when we connect it from Power BI Dataflow (which is on PBI Service side)

This is the (only) input after we choose the Get Data feature:

And this is the input if we Add CDM folder within Power BI Dataflow in PBI Services :

In above screen, they give us a clue on how the path should be, which is we need to put the model.json file path.

When we take a look at the our data in ADLSg2 of Azure portal which created from Power BI Services Dataflow process, they have that file, so I can go to it's properties and just copy it.

My question is, if for example we have our own data, in their own separate CDM folder in the same Data Lake Store, but it is not from Power BI Service Dataflow, lets say from Azure Data Factory process, how to have this model.json file ?

I hope I can describe clear enough for this scenario.

So basically I want to access my CDM folder (ADLSg2 data) using the Get Data feature in Power BI Dekstop, but for now I don't have that model.json file in my CDM folder.

Any advice on this case?

Thanks in advance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Thanks,

I think I found the solution already. It turns out, we don't need "model.json" when using this new feature to connect to ADLSg2.

Just put the full URL up to filename path. So for example if I have subfolder \ExternalDB then the file is customer.csv, I just put the URL like this :

https://mydatalake.dfs.core.windows.net/powerbi/ExternalDB\customer.csv

The problem is only I need to give acess one by one per file in Azure Storage Explorer. It is quite tedious.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @admin_xlsior ,

I have not tried connect with Azure Data Lake Store Gen2 connector without Dataflow in power bi desktop.

You may could try connect it with Azure Data Lake Store REST API.

In addition, you could refer to the comment of Kanad Chatterjee which should be helpful.

Best Regards,

Cherry

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Thanks,

I think I found the solution already. It turns out, we don't need "model.json" when using this new feature to connect to ADLSg2.

Just put the full URL up to filename path. So for example if I have subfolder \ExternalDB then the file is customer.csv, I just put the URL like this :

https://mydatalake.dfs.core.windows.net/powerbi/ExternalDB\customer.csv

The problem is only I need to give acess one by one per file in Azure Storage Explorer. It is quite tedious.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @admin_xlsior ,

Glad to hear the issue is resolved, you can accept your reply as solution, that way, other community members would benefit from your solution.

Best Regards,

Cherry

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi actually not really solved in a way, I need to give access the file one by one. What if I have thousands ?

Thanks,

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 96 | |

| 77 | |

| 66 | |

| 54 |

| User | Count |

|---|---|

| 144 | |

| 104 | |

| 102 | |

| 88 | |

| 63 |