- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Equivalent to a new column usign OVER function...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Equivalent to a new column usign OVER functionality

Two columns : one is an ID column, with non-unique values. The second column SIZES contains integers. I want to create a third column with the maximum value of SIZES for each value of ID, without dropping any rows.

So I want to go from :

ID NUMS

1 0

1 4

1 7

2 0

2 0

And I want to produce:

ID NUMS MAXNUMS

1 0 7

1 4 7

1 7 7

2 0 0

2 0 0

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

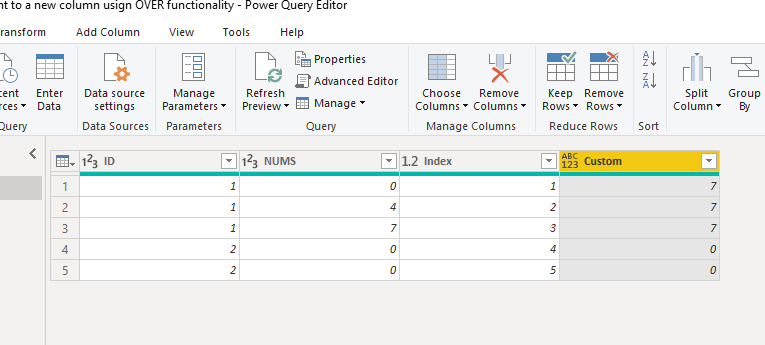

Using below M code:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlQ4tACOlHSUIEwDpVgdXHImeOTMwXJGeMzEKRcLAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [ID = _t, NUMS = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"NUMS", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 1, 1),

#"Added Custom" = Table.AddColumn(#"Added Index", "Custom", each let i = [ID]

in

Table.Max(Table.SelectRows(#"Changed Type",each [ID] = i),"NUMS")[NUMS])

in

#"Added Custom"

And you will see:

For the related .pbix file,pls click here.

Kelly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've come up with a performant solution - the ones so far did not work well on 120k rows. Basically, I created a (temporary) table of the grouped data, then merged this back into the main table. Something like this:

let

Source = whatever

Grouped = Table.Group(#"Source", col1, max(number))

Merged = Table.Merge(#"Source", #"Grouped", using col1)

Because this uses table processing rather than row processing, it runs pretty quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

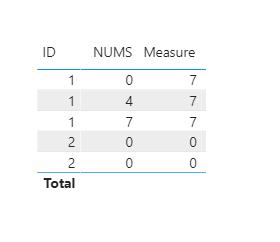

As line 4 and line 5 have the same values in each column,so when the data is put in a table visual ,you need to create an index column first,otherwise the 2 lines will be aggregated:

Go to query editor>add column>index column;

Then create a measure as below:

Measure = MAXX(FILTER(ALL('Table'),'Table'[ID]=SELECTEDVALUE('Table'[ID])),'Table'[ NUMS])

And you will see:

Or you can create a calculated column as below:

Column = CALCULATE(MAX('Table'[ NUMS]),ALLEXCEPT('Table','Table'[ID]))

And you will see:

For the related .pbix file,pls click here.

Kelly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it possible to do the same in M, so that I can push it into a Dataflow ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Using below M code:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlQ4tACOlHSUIEwDpVgdXHImeOTMwXJGeMzEKRcLAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [ID = _t, NUMS = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"NUMS", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 1, 1),

#"Added Custom" = Table.AddColumn(#"Added Index", "Custom", each let i = [ID]

in

Table.Max(Table.SelectRows(#"Changed Type",each [ID] = i),"NUMS")[NUMS])

in

#"Added Custom"

And you will see:

For the related .pbix file,pls click here.

Kelly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've come up with a performant solution - the ones so far did not work well on 120k rows. Basically, I created a (temporary) table of the grouped data, then merged this back into the main table. Something like this:

let

Source = whatever

Grouped = Table.Group(#"Source", col1, max(number))

Merged = Table.Merge(#"Source", #"Grouped", using col1)

Because this uses table processing rather than row processing, it runs pretty quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous add following measure

Max Nums = CALCULATE ( MAX ( Table[Nums] ), ALLEXCEPT ( Table, Table[Id] ) )Subscribe to the @PowerBIHowTo YT channel for an upcoming video on List and Record functions in Power Query!!

Learn Power BI and Fabric - subscribe to our YT channel - Click here: @PowerBIHowTo

If my solution proved useful, I'd be delighted to receive Kudos. When you put effort into asking a question, it's equally thoughtful to acknowledge and give Kudos to the individual who helped you solve the problem. It's a small gesture that shows appreciation and encouragement! ❤

Did I answer your question? Mark my post as a solution. Proud to be a Super User! Appreciate your Kudos 🙂

Feel free to email me with any of your BI needs.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 107 | |

| 93 | |

| 77 | |

| 65 | |

| 53 |

| User | Count |

|---|---|

| 147 | |

| 106 | |

| 104 | |

| 87 | |

| 61 |