- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Dax Technical Analysis for trading (RSI, STOCH RSI...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dax Technical Analysis for trading (RSI, STOCH RSI, EMA, MA, ...)

Hi,

I'm currently working on a report to analyze a few technical trading indicators. The major ones are RSI, stoch RSI, EMA and MA.

The table looks like this:

Symbol | TimeStamp | TimeFrame | Open | Close | Low | High | Volume From | Volume To

TimeStamp: date and time

TimeFrame: I could have deducted this with a measure but this would create more load, so decided to use SQL & powerquery to make seperate rows for timeframes 1h, 3h, 6h, 12h, 1d, 1w, 1m (all based on 1h).

... all others are self explanatory I guess.

I would like to start with the RSI (of last 14 records). Currenty I have the change between open & close:

Open: OpenX = OpenX = FIRSTNONBLANK(CoinData[Open],1)

Close: CloseX = CloseX = LASTNONBLANK(CoinData[Close],1)

Change: ChangeX = CALCULATE([CloseX]-[OpenX])

GainX = CALCULATE(if([ChangeX] >= 0 , [ChangeX],BLANK()))

LossX = CALCULATE(if([ChangeX] <= 0 , [ChangeX],BLANK()))

=> Based on the above, how do I get the averagex of the last 14 gainx records (including the current). Preferrably fixed per timeframe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am looking to do the same thing as well, did you ever make any progress on this?

Also, how did you approach bringing your data in and storing it? Did you use SQL or did you do it through API or other services?

Personally I pulled my data in through API, 300 different tickers and I Have their entire history (daily). Takes about 2 min to refresh (always open to faster options)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How difficult was it to set up the webservice? I dont have any experience doing that

I was able to get a pretty solid database by generating .CSVs into folders. I have 2 types of folders, Historical and Current. The historical data loads 1x a day in the morning and current updates every 5 seconds.

The whole database takes about 2-3 minutes to generate.

From there I developed an SSIS package which loads the data into SQL staging, then from Staging I have stored procedures and jobs that complete a few calculations and clean the data.

Historical data loads 1x a day into production (Incremental load)

Current data loads every 15 minutes during market hours. (flash and fill technique)

My goal is to get the current data in a PUSH API environment (hence me asking about the webservice). I would IDEALLY like to get the current data to load into a Power BI dashboard -- as close to "live" as possible. Then I would create drill downs for each ticker (I have 500 of them) and I can drill into each ticker and get the historical analysis for each ticker on demand.

I also plan on running some Python or R along with this historical data so I can generate a rating system.

Please let me know what you did with the webservice, maybe we can bat some ideas off each other, sounds like we are in the same game here.

"Historical performance is not indicative of future results"

No... but it is much much better than guessing! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @wlknsn,

Based on current information, I am not clear about what you were trying to achieve. Please follow this blog to provide more detailed information, such as sample dataset, desired output.

Regards,

Yuliana Gu

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let's say I'm looking for a calculation as in the table below in the last column. So per timeframe, I want the average of the previous 3 [close] values. The sorting is always by the [Date] field.

| Date | TimeFrame | Close | AverageCloseLast3records |

| 01/01/2018 00:00 | Hour | 4 | |

| 01/01/2018 01:00 | Hour | 4,5 | |

| 01/01/2018 02:00 | Hour | 7 | 5,166666667 |

| 01/01/2018 03:00 | Hour | 8 | 6,5 |

| 01/01/2018 04:00 | Hour | 9 | 8 |

| 01/01/2018 05:00 | Hour | 10 | 9 |

| 01/01/2018 06:00 | Hour | 11 | 10 |

| 01/01/2018 00:00 | Day | 4 | |

| 01/02/2018 00:00 | Day | 12 | |

| 01/03/2018 00:00 | Day | 20 | 12 |

| 01/04/2018 00:00 | Day | 28 | 20 |

| 01/05/2018 00:00 | Day | 36 | 28 |

| 01/06/2018 00:00 | Day | 44 | 36 |

| 01/07/2018 00:00 | Day | 52 | 44 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume a filter combining:

EARLIER(table[timestamp]>=table[timestamp])

EARLIER(table[timeframe]=table[timeframe])

TOPN(3,table,table[timestamp],desc) and EARLIER.

So the average of the last 3 records (current and previous two) based on timestamp for each timeframe. But how to combine these?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forget the previous post:

AverageLast3recordsClose = calculate(average(close),allexcept(table, table[timeframe]]

That gives the average per timeframe, but now this has to be limited to the current and last 2 records (sorted by timeframe).

Appreciate your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Anyone?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

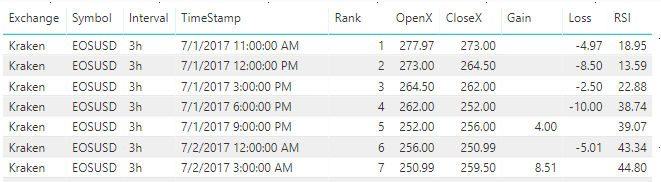

This is what I have so far, but still one question remaining in terms of RSI (PS: the formula below covers pretty much everything from the subject line when you tweak this.

1) you need to create a calculated rank column (not measure), which will rank every record grouped by "Exchange", "Symbol" & "Interval".

Rank = RANKX ( CALCULATETABLE ( AllCandleData, ALLEXCEPT ( AllCandleData, AllCandleData[Exchange], AllCandleData[Symbol], AllCandleData[Interval] ) ), AllCandleData[TimeStamp], , ASC )

2) Based on this rank, you can now start to calculate the RSI over for example 14-records.

RSI =

VAR

GainCalc = abs(CALCULATE (

AVERAGEx(AllCandleData, [Gain]),

filter(ALLEXCEPT ( AllCandleData,AllCandleData[Symbol],AllCandleData[Exchange] ),

AllCandleData[Rank] > (MAX(AllCandleData[Rank]) - 14 )

&& AllCandleData[Rank] <= MAX(AllCandleData[Rank])

)

)

)

VAR

LossCalc = abs(CALCULATE (

AVERAGEx(AllCandleData,[Loss]),

filter(ALLEXCEPT ( AllCandleData,AllCandleData[Symbol],AllCandleData[Exchange] ),

AllCandleData[Rank] > (MAX(AllCandleData[Rank]) - 14 )

&& AllCandleData[Rank] <= MAX(AllCandleData[Rank])

)

)

)

RETURN

if(LossCalc=0 , 100 , 100-(100/(1+DIVIDE(

GainCalc,LossCalc,BLANK()))))This results in a table like this:

Now for my final question: what needs to be changed in the current RSI formula so I get the rsi per interval (fixed). So Seperate measures. As if you would apply (FILTER(ALL(table[Interval]), table[Interval] = 3h). Unfortunately, I don't seem to be able to add this in combination with the ALLEXCEPT. The result should be similar to be below (each column is seperate measure).

The result should be that on whatever timestamp I see in the column, I would have all the various RSI-interval values shown side by side as in the above.

PS: the RSI formula is rather CPU consuming. If you have any recommendations for optimizing this ... always welcome!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking to do these types of calculations under the same conditions. Any further progress to report?

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 115 | |

| 99 | |

| 88 | |

| 70 | |

| 62 |

| User | Count |

|---|---|

| 151 | |

| 120 | |

| 103 | |

| 87 | |

| 68 |