- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Data Modeling Issue/Fun?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data Modeling Issue/Fun?

OK, this will take a bit to explain so I hope somebody stays with me for this because I'm really interested in the solution to this problem. At issue is the proper calculation of "roll up" calculations based upon a set of attribute scores for multiple customers.

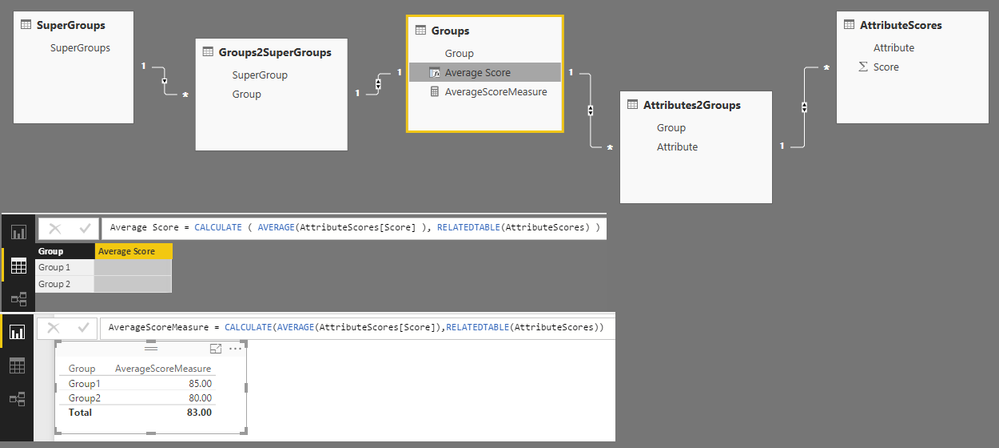

I will first present the data model as created (and working) for a single customer:

Given the following tables:

[SuperGroups] 1-* [Groups2SuperGroups] 1-1 [Groups] 1-* [Attributes2Groups] 1-* [AttributeScores]

With the following data:

AttributeScores

Attribute Score

Attribute1 100

Attribute2 75

Attribute3 80

Attribute4 100

Attribute5 60

Attributes2Groups

Group Attribute

Group1 Attribute1

Group1 Attribute2

Group1 Attribute3

Group2 Attribute4

Group2 Attribute5

Groups

Group

Group1

Group2

Groups2SuperGroups

SuperGroup Group

SuperGroup1 Group1

SuperGroup1 Group2

SuperGroups

SuperGroup

SuperGroup1

Given this, I can create a calculated column in Groups such that:

AverageScore = CALCULATE(AVERAGE(AttributeScores[Score]),RELATEDTABLE(AttributeScores))

I then have the following in the Groups table:

Groups

Group AverageScore

Group1 85

Group2 80

I can then create a custom column in the SuperGroups table such that:

MinScore = CALCULATE(MIN(Groups[AverageScore]),RELATEDTABLE(Groups))

I then have the following in the SuperGroups table:

SuperGroups

SuperGroup MinScore

SuperGroup1 80

This is all correct.

However, when I revise my AttributeScores table to include Customer:

AttributeScores

Attribute Score Customer

Attribute1 100 Customer1

Attribute2 75 Customer1

Attribute3 80 Customer1

Attribute4 100 Customer1

Attribute5 60 Customer1

Attribute1 50 Customer2

Attribute2 25 Customer2

Attribute3 30 Customer2

Attribute4 50 Customer2

Attribute5 10 Customer2

My calculations no longer work as columns with RLS because the columns calculate their result based upon all of the customers instead of just one of the customers.

In my Groups table, I have:

Groups

Group AverageScore

Group1 60

Group2 55

And in SuperGroup table I have

SuperGroup

SuperGroup1 55

Even if I apply RLS and limit to a single customer, I get these values versus the values for the individual customer, which should be:

Customer1: AverageScore = 85 and 80 and MinScore = 80

Customer2: AverageScore = 35 and 30 and MinScore = 30

This is not entirely unexpected given my understanding of how custom columns work. So, my solution was to calculate AverageScore as a Measure thus:

AverageScoreMeasure = CALCULATE(AVERAGE(AttributeScores[Score]),RELATEDTABLE(AttributeScores))

This works like a champ for AverageScore with RLS, no problem.

The issue that I am running into is the final step, how do I get the MIN of a measure?

I thought that perhaps I could use SUMMARIZE to create a new table that essentially was my Group table but with the measure in it but I can't seem to use a measure with SUMMARIZE.

I am hoping that I am just missing something simple here and that this is not a fundamental road block. Anyone have any ideas?

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what happen using MINX

MinScoreMeasure = MINX ( SUMMARIZECOLUMNS ( Groups[Group] , "AVG",[AverageScoreMeasure] ), [AVG] )

Lima - Peru

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what happen using MINX

MinScoreMeasure = MINX ( SUMMARIZECOLUMNS ( Groups[Group] , "AVG",[AverageScoreMeasure] ), [AVG] )

Lima - Peru

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

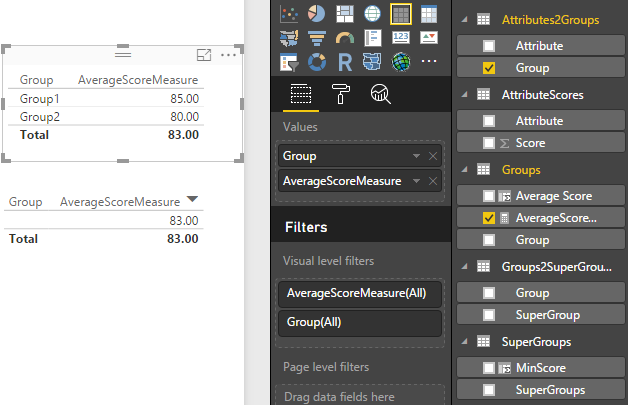

Okay I'm tyring to get to @Vvelarde's solution

However I've stumbled onto this - the Calculated Column AverageScore does NOT return anything - but the Measure works

I think I've set up everything as it should be especially since the Measure seems to work...

Any ideas...

EDIT:

If use a [Group] column from any other table other than Attributes2Groups

I get only the overall average 83 not per Group!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Brilliant @Vvelarde! Many thanks!

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Vvelarde one last issue that perhaps you have an answer for. When I place the measure in a card visualization, it works great. However, any other visualization returns the following error:

Calculation error in measure 'SuperGroups'[MinScoreMeasure]: SummarizeColumns() and AddMissingItems() may not be used in this context.

Any thoughts? Full error details below:

Feedback Type:

Frown (Error)

Timestamp:

2017-02-09T13:41:56.8581058Z

Local Time:

2017-02-09T08:41:56.8581058-05:00

Product Version:

2.41.4581.361 (PBIDesktop) (x64)

Release:

November 2016

IE Version:

11.576.14393.0

OS Version:

Microsoft Windows NT 10.0.14393.0 (x64 en-US)

CLR Version:

4.6.2. or later [Release Number = 394802]

Workbook Package Info:

1* - en-US, fastCombine: Disabled.

Peak Working Set:

418 MB

Private Memory:

322 MB

Peak Virtual Memory:

34 GB

Error Message:

MdxScript(Model) (2, 😎 Calculation error in measure 'SuperGroups'[MinScoreMeasure]: SummarizeColumns() and AddMissingItems() may not be used in this context.

User ID:

215d25e7-bd06-4ec3-bb13-adc1514f7021

Session ID:

f6259b9c-5791-41c0-b251-6b4870939a3b

Telemetry Enabled:

True

Model Default Mode:

Import

Snapshot Trace Logs:

C:\Users\gdeckler\AppData\Local\Microsoft\Power BI Desktop\FrownSnapShot1757248223.zip

Performance Trace Logs:

C:\Users\gdeckler\AppData\Local\Microsoft\Power BI Desktop\PerformanceTraces.zip

Enabled Preview Features:

PBI_RedShift

PBI_Impala

PBI_Snowflake

PBI_shapeMapVisualEnabled

PBI_allowBiDiCrossFilterInDirectQuery

PBI_clusteringEnabled

PBI_esriEnabled

PBI_mobileReportAuthoringEnabled

Enabled DirectQuery Options:

DirectQuery_Unrestricted

Cloud:

GlobalCloud

Activity ID:

f6259b9c-5791-41c0-b251-6b4870939a3b

Time:

Thu Feb 09 2017 08:41:09 GMT-0500 (Eastern Standard Time)

Version:

2.41.4581.361 (PBIDesktop)

Error Code:

rsDataShapeProcessingError

OData Error Message:

Failed to execute the query.

DPI Scale:

200%

Formulas:

section Section1;

shared AttributeScores = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WciwpKcpMKi1JNVTSUTI0MACSzqXFJfm5qUWGSrE6SAqMgFLmprjljYFSFnj0mxCyAGS2GR55kAtNkeWNMB1oZIpbHuRAYzz6TQiYbwr2AKp8LAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Attribute = _t, Score = _t, Customer = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Score", Int64.Type}})

in

#"Changed Type";

shared Groups = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45Wci/KLy0wVIrVgTKNlGJjAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Group = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Group", type text}})

in

#"Changed Type";

shared Attributes2Groups = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45Wci/KLy0wVNJRciwpKcpMKi1JNVSK1cEmboRD3BghboQsboJD3FQpNhYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Group = _t, Attribute = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Group", type text}, {"Attribute", type text}})

in

#"Changed Type";

shared SuperGroups = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCi4tSC1yL8ovLTBUio0FAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [SuperGroup = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SuperGroup", type text}})

in

#"Changed Type";

shared Groups2SuperGroups = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WCi4tSC1yL8ovLTBU0lGCMmJ1sEoYKcXGAgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [SuperGroup = _t, Group = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SuperGroup", type text}, {"Group", type text}})

in

#"Changed Type";

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Summarizecolumns have some problem working with filter context.

https://www.sqlbi.com/articles/introducing-summarizecolumns/

The SUMMARIZECOLUMNS function is still not fully supported in a modified filter context. For example, it cannot be used within a measure in an expression of ADDCOLUMNS. Please, evaluate whether SUMMARIZE can work in all the conditions you want to support before using it in a measure.

You can change the measure to this:

MinScoreMeasure = MINX(SUMMARIZE(Groups,Groups[Group],"AVG";[AverageScoreMeasure]),[AVG])

Lima - Peru

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 110 | |

| 97 | |

| 78 | |

| 64 | |

| 55 |

| User | Count |

|---|---|

| 143 | |

| 109 | |

| 89 | |

| 84 | |

| 66 |