- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- DAX running total optimization : Using date column...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DAX running total optimization : Using date column from dimension vs date from fact

Problem : I have a fact table called Productivity. It tracks actual units of work performed and actual hours it took to perform the work. It also includes forecast hours and forecast units. It is connected to three dimensions as shown below. Note that those dimensions are shared between multiple fact tables which are not relevant to this question. The productivity fact table tracks data on daily baisis i.e. data is tracked for each date. I am unable to roll it up because of the business need. I need to calculate divide running total for units by running total for hours.

Total number of rows in fact table : 32931185

I have slicer on each dimension. I have added filters to slicer so that if one slicer is selected, it narrows down values in other slicers.

Here is the measure I am trying to compute:

To Date U/M =

VAR MaxDate = MAX('Date'[Date]) //Maximum visibile date

VAR ActualUnitsToDate = CALCULATE(

SUM(Productivity[Units]) // Sum of units

,ALL('Date'[Date]) // Ignore filters over date

,'Date'[Date]<=MaxDate // All filter on the date

)

VAR ActualHoursToDate = CALCULATE(SUM(Productivity[ActualHours]) //Sum of hours

,ALL('Date'[Date]) // Ignore filters over date

,'Date'[Date]<=MaxDate) // All filter on the date

RETURN DIVIDE(ActualUnitsToDate,ActualHoursToDate,0)

This measure runs forever. So to scale down the problem, I tried calculating only ActualUnitsToDate.

When I use date column from dimension table, ActualUnitsToDate takes forever. When I use date column from productivity fact table, it takes about 25000 ms. Still not really acceptable but atleast it returns the result.

Questions :

- What does date column from fact perform better than the one from date dimension?

- What couldd cause the simple running total calculation so slow. I understand that this is intensive considering the size of the dataset ( 32931185 rows). What can I do to improve the performance?

EDIT : It seems that performance is also impaced by which column I use in visual. Date from fact vs date from dimension.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tvaishnav You could try this:

To Date U/M =

VAR MaxDate = MAX('Date'[Date]) //Maximum visibile date

VAR __Table = FILTER(ALL('Productivity'), [Date] < MaxDate)

VAR ActualUnitsToDate = SUMX(__Table, [Units]) // Sum of units

VAR ActualHoursToDate = SUMX(__Table, [ActualHours]) //Sum of hours

RETURN DIVIDE(ActualUnitsToDate,ActualHoursToDate,0)@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

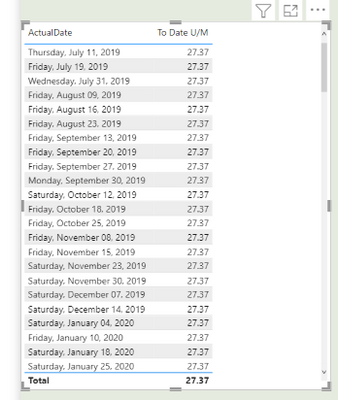

@Greg_Deckler Your measure returns same value for each date. I can see why. VAR __Table gives a intermediate table. SUMX performs sum over the entire __Table without breaking it by date. Here is how it looks:

I think I need to use calculate function around SUMX. But I am unable to change context on __Table via calculate. Any thoughts on how I can accomplish this?

I do understand what you are trying to do here. It is certainly faster.

However, this measure also performs pooly when my date column in the visual comes from date dimension. It returns results instantly if I use date column from fact table in the visual. Why would that be? It is a simple one to many unidirectional relationship.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tvaishnav So, probably something I'm not understanding about your data model or where the values in your visual are coming from. If you get your max date in context and then FILTER ALL of your fact table where the date column in that fact table is less than that date, then I don't know why you would get the same value for all dates unless something else is wonky. If you have a 1:* relationship between your date table and fact table and it is single direction, then if you used the date column from the fact table in that visual, then the MAX of 'date'[date] would return the largest date in the date table and that would explain it. So, if that is the case, use MAX('Productivity'[Date]) instead in the measure.

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greg_Deckler I will try to explain this. I can attach a sample PBI but it won't replicate the scale of the problem.

My understanding of best practice is as follows. Slicers / Filters should always come from dimensions and numbers that we use for computation should come from fact tables. Going by that logic, I always try to pull date column from date dimension when used in slicer or used as a column in visual. So I think VAR MaxDate = MAX(Date_Dim[Date]) is fine.

When I used date column from date dimension and use the measure you gave me, it just runs forever. However, when I use date column from fact table, it returns results pretty instantly but with repeated values (as in the case in my previous reply). I don't really want to use date from fact but I was just testing how things out.

Does that explanation help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tvaishnav Right, so if you use date column from the fact table in the visual, then the measure should be:

To Date U/M =

VAR MaxDate = MAX('Productivity'[Date]) //Maximum visibile date

VAR __Table = FILTER(ALL('Productivity'), [Date] < MaxDate)

VAR ActualUnitsToDate = SUMX(__Table, [Units]) // Sum of units

VAR ActualHoursToDate = SUMX(__Table, [ActualHours]) //Sum of hours

RETURN DIVIDE(ActualUnitsToDate,ActualHoursToDate,0)Otherwise, because of the unidirectional relationship the other version would always return the maximum date in the date table with no filters (so whatever the biggest date is in your date table)

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greg_Deckler Thank you so much for your time. Few questions :

Is it a good practice to use dates from fact table instead of dimension table? My understanding is that is should not matter because they are connected but what is the best practice here?

When I used date from productivity fact table and use Max(Productivity[Date]), measure seems to be running forever. So I guess I am back at the starting point. The operations ended with this message :

Details :

Feedback Type:

Frown (Error)

Timestamp:

2022-08-22T13:53:50.2656188Z

Local Time:

2022-08-22T09:53:50.2656188-04:00

Session ID:

4301c49c-83dc-4605-b6a1-ae8400c41578

Release:

August 2022

Product Version:

2.108.825.0 (22.08) (x64)

Error Message:

There's not enough memory to complete this operation. Please try again later when there may be more memory available.

OS Version:

Microsoft Windows NT 10.0.19044.0 (x64 en-US)

CLR Version:

4.8 or later [Release Number = 528372]

Peak Virtual Memory:

54.9 GB

Private Memory:

984 MB

Peak Working Set:

1.22 GB

IE Version:

11.789.19041.0

User ID:

618d1710-c07c-4212-80d4-377575658fee

Workbook Package Info:

1* - en-US, Query Groups: 0, fastCombine: Disabled, runBackgroundAnalysis: False.

Telemetry Enabled:

True

Snapshot Trace Logs:

C:\Users\tvaishnav\Microsoft\Power BI Desktop Store App\FrownSnapShot3f16b3a4-2eae-4226-8870-2d458ebaff1e.zip

Model Default Mode:

Import

Model Version:

PowerBI_V3

Performance Trace Logs:

C:\Users\tvaishnav\Microsoft\Power BI Desktop Store App\PerformanceTraces.zip

Enabled Preview Features:

PBI_enableWebView2

PBI_mobileAuthoringFormattingUI

PBI_sparklines

Disabled Preview Features:

PBI_shapeMapVisualEnabled

PBI_SpanishLinguisticsEnabled

PBI_qnaLiveConnect

PBI_azureMapVisual

PBI_compositeModelsOverAS

PBI_enhancedTooltips

PQ_WebView2Connector

PBI_scorecardVisual

PBI_fieldParametersSuperSwitch

Disabled DirectQuery Options:

TreatHanaAsRelationalSource

Cloud:

GlobalCloud

DPI Scale:

150%

Supported Services:

Power BI

Formulas:

section Section1;

shared PRHours = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint", [Query="SELECT e.PRCo as CompanyKey#(lf),CAST(e.PRCo as varchar) + ' | ' +cast(e.Employee as varchar) + ' | ' + cast(h.PRGroup as varchar) AS EmployeeKey#(lf),cast(e.PRCo as varchar) + ' | '+ cast(h.EarnCode AS varchar) AS EarnCodeKey#(lf),LTRIM(RTRIM(e.PRCo))+' | '+LTRIM(RTRIM(h.Job)) AS ContractKey#(lf),LTRIM(RTRIM(h.Phase)) + ' | ' + jp.Description AS Phase#(lf),cast(e.PRCo as varchar)+ ' | ' + ltrim(rtrim(ISNULL(h.Craft,e.Craft)))+' | ' +ISNULL(h.Class,e.Class) AS Trade#(lf),CAST(e.PRCo as varchar) + ' | ' + LTRIM(RTRIM(h.Equipment)) AS EquipmentKey#(lf),PREndDate DateKey#(lf),CASE WHEN h.Job IS NULL AND h.Equipment IS NULL THEN 1#(lf)#(tab) WHEN h.Job IS NULL AND h.Equipment IS NOT NULL THEN 2#(lf)#(tab) WHEN h.Job IS NOT NULL AND h.Equipment IS NULL THEN 3#(lf)#(tab) END AS [PR Hours Category]#(lf),SUM(h.Hours) as Hours #(lf)FROM bPREH as e inner join bPRTH as h on e.Employee = h.Employee and e.PRCo = h.PRCo#(lf)left join bJCCM as m ON h.PRCo = m.JCCo and h.Job = m.Contract#(lf)left join bJCDM as d ON m.JCCo = d.JCCo and m.Department = d.Department#(lf)left join bPRCC as c ON h.PRCo = c.PRCo and isnull(h.Craft,e.Craft) = c.Craft and isnull(h.Class,e.Class) = c.Class#(lf)left join bPRGR as r ON h.PRCo = r.PRCo and h.PRGroup = r.PRGroup#(lf)left join bPREC as ec ON h.PRCo = ec.PRCo and h.EarnCode = ec.EarnCode #(lf)left join bJCJP as jp ON h.PRCo = jp.JCCo and h.Job = jp.Job and h.Phase = jp.Phase#(lf)WHERE h.EarnCode in (1,2,3,4) #(lf)Group by e.PRCo,e.Employee,h.PREndDate,h.Job,h.EarnCode,isnull(h.Craft,e.Craft),isnull(h.Class,e.Class),e.Craft,e.Class,h.PRGroup,h.Phase,jp.Description,h.Equipment"]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"CompanyKey", type text}})

in

#"Changed Type";

shared Company = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUfINV4rViVYyNwCyg12VYmMB", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Co = _t, Description = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Co", Int64.Type}, {"Description", type text}})

in

#"Changed Type";

shared Employee = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint", [Query="SELECT distinct#(lf)cast(e.PRCo as varchar) + ' | ' + cast(e.Employee as varchar) + ' | ' + cast(h.PRGroup as varchar) as EmployeeKey#(lf),e.Employee#(lf),e.FirstName#(lf),e.LastName#(lf),h.PRGroup#(lf),cast(h.PRGroup as varchar) + ' | ' + r.Description AS [PRGroup Full]#(lf),cast(e.Employee as varchar) + ' | ' + e.FirstName + ' ' +e.LastName AS [Employee Name]#(lf)FROM bPREH as e inner join bPRTH as h on e.Employee = h.Employee and e.PRCo = h.PRCo#(lf)left join bJCCM as m ON h.PRCo = m.JCCo and h.Job = m.Contract#(lf)left join bPRGR as r ON h.PRCo = r.PRCo and h.PRGroup = r.PRGroup#(lf)--WHERE (h.Job is not null) and m.ContractStatus = 1"])

in

Source;

shared Job = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint", [Query="SELECT #(lf)ltrim(rtrim(m.JCCo))+' | '+ltrim(rtrim(m.Contract)) AS ContractKey#(lf),ltrim(rtrim(m.Contract)) + ' | ' + m.Description [Job]#(lf),m.Department+' | '+d.Description as [Division]#(lf),CASE WHEN m.ContractStatus = 1 THEN 'Active'#(lf) WHEN m.ContractStatus <> 1 THEN 'Closed' END AS [Job Status]#(lf)FROM #(lf)bJCCM as m #(lf)left join bJCDM as d ON m.JCCo = d.JCCo and m.Department = d.Department"])

in

Source;

shared #"Earn Code" = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint", [Query="SELECT cast(PRCo as varchar) + ' | '+cast(EarnCode as varchar) as ECKey#(lf),cast(EarnCode as varchar) + ' | ' + Description AS EarnCode#(lf)FROM PREC#(lf)WHERE EarnCode IN (1,2,3,4)"])

in

Source;

shared Trade = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint", [Query="SELECT DISTINCT cast(c.PRCo as varchar) + ' | ' + cast(c.Craft as varchar)+ ' | ' + cast(c.Class as varchar) AS CraftClassKey#(lf),c.PRCo,c.Craft,c.Class,c.Description AS Trade,m.Description AS CraftName#(lf)FROM PRCC c LEFT JOIN PRCM m#(lf)ON c.PRCo = m.PRCo and c.Craft = m.Craft "]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"PRCo", type text}})

in

#"Changed Type";

shared #"PR Hours Category" = let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUfLKT1JIzEtRcC0szSzITc0rUSjNSyxLzMxJTMpJVYrViVYyAqqCy4JFjCH6lGJjAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"PR Hours Category" = _t, #"Category description" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"PR Hours Category", Int64.Type}, {"Category description", type text}})

in

#"Changed Type";

shared Equipment = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint"),

dbo_PBI_dEquipment = Source{[Schema="dbo",Item="PBI_dEquipment"]}[Data]

in

dbo_PBI_dEquipment;

shared #"Equipment Hours" = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint"),

dbo_PBI_fEquipment = Source{[Schema="dbo",Item="PBI_fEquipment"]}[Data]

in

dbo_PBI_fEquipment;

shared Productivity = let

Source = Sql.Database("sc-exp-sql2\Viewpoint", "Viewpoint"),

dbo_PBI_fProducitivity = Source{[Schema="dbo",Item="PBI_fProducitivity"]}[Data],

#"Changed Type" = Table.TransformColumnTypes(dbo_PBI_fProducitivity,{{"Mth", type date}, {"ActualDate", type date}})

in

#"Changed Type";

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tvaishnav Well, the best practice is whatever works in your particular situation. 😉 Generally speaking through, what you described is the conventionally accepted wisdom. However, it's not foolproof as you saw when using the date from the productivity table was running faster.

Running totals by day across 33M records is likely going to be problematic through however you try to slice it. Basically, for each date, you have to put all 33M records into memory, then filter that down but in some cases you are still dealing with tens of millions of rows over and over again for certain dates. You might try filtering your visual so that it only returns a set number of days. Also, you might try calculating this in Power Query or as a calculated column so that it runs as part of refresh instead of every time the visual is loaded.

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: The Definitive Guide to Power Query (M)

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greg_Deckler I guess I do get hung up on best practice a little too much.

Yes. I can push the calculation to source and be done with it. Again "best practice" bug made me question doing that since I am essentially brining same data again.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 113 | |

| 97 | |

| 85 | |

| 70 | |

| 61 |

| User | Count |

|---|---|

| 151 | |

| 121 | |

| 104 | |

| 87 | |

| 67 |