- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- DAX optimization

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DAX optimization

Hi Experts

I am trying to optimize the following DAX measure, not sure if there is a better way to re writre the current measure as the visual assoicated with the measure is close to timing out i.e. 225 seconds (the not enough resources available error)

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

Here are some suggestions about optimize your model.

- Remove unused tables or columns, where possible.

- Avoid distinct counts on fields with high cardinality – that is, millions of distinct values.

- Take steps to avoid fields with unnecessary precision and high cardinality. For example, you could split highly unique datetime values into separate columns – for example, month, year, date, and so on. Or, where possible, use rounding on high-precision fields to lower cardinality – (for example, 13.29889 -> 13.3).

- Use integers instead of strings, where possible.

- Be wary of DAX functions, which need to test every row in a table – for example, RANKX – in the worst case, these functions can exponentially increase run-time and memory requirements given linear increases in table size.

- When connecting to data sources via DirectQuery, consider indexing columns that are commonly filtered or sliced again. Indexing greatly improves report responsiveness.

For further informatiom, please refer to the official document .

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

If you take the answer of someone, please mark it as the solution to help the other members who have same problems find it more quickly. If not, let me know and I'll try to help you further. Thanks.

Best Regards

Allan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

Here are some suggestions about optimize your model.

- Remove unused tables or columns, where possible.

- Avoid distinct counts on fields with high cardinality – that is, millions of distinct values.

- Take steps to avoid fields with unnecessary precision and high cardinality. For example, you could split highly unique datetime values into separate columns – for example, month, year, date, and so on. Or, where possible, use rounding on high-precision fields to lower cardinality – (for example, 13.29889 -> 13.3).

- Use integers instead of strings, where possible.

- Be wary of DAX functions, which need to test every row in a table – for example, RANKX – in the worst case, these functions can exponentially increase run-time and memory requirements given linear increases in table size.

- When connecting to data sources via DirectQuery, consider indexing columns that are commonly filtered or sliced again. Indexing greatly improves report responsiveness.

For further informatiom, please refer to the official document .

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

One thing I can note on your DAX expression is the RETURN part. Do you really need that IF condition on DIVIDE function?

Without IF condition, it should do same. Try replacing RETURN part in the expression with:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Pragati11. just trying out your suggestion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If this month start is a date. Create a date table move the calcultaion there.

Example

Cumm Sales = CALCULATE(SUM(Sales[Sales Amount]),filter(all(date),date[date] <=maxx(date,date[date])))

See if it improves performance

To get the best of the time intelligence function. Make sure you have a date calendar and it has been marked as the date in model view. Also, join it with the date column of your fact/s. Refer :

https://radacad.com/creating-calendar-table-in-power-bi-using-dax-functions

https://www.archerpoint.com/blog/Posts/creating-date-table-power-bi

https://www.sqlbi.com/articles/creating-a-simple-date-table-in-dax/

Microsoft Power BI Learning Resources, 2023 !!

Learn Power BI - Full Course with Dec-2022, with Window, Index, Offset, 100+ Topics !!

Did I answer your question? Mark my post as a solution! Appreciate your Kudos !! Proud to be a Super User! !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi Amit

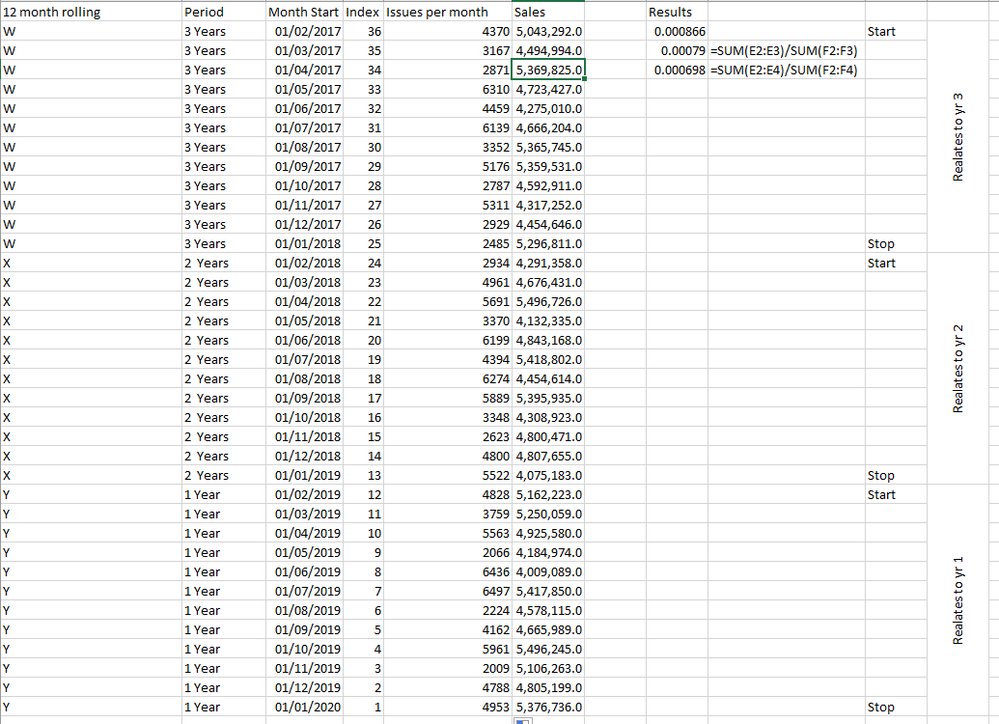

here is my date table. i have shown where i want the cumulative formula to start and end at for each of the 3 periods. I have included the excel formula on how the result is to be calculated. you could use the rolling month column??? this splits the periods up too

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 95 | |

| 77 | |

| 65 | |

| 53 |

| User | Count |

|---|---|

| 144 | |

| 105 | |

| 102 | |

| 89 | |

| 63 |