- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Converting Excel Formulas to DAX

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Converting Excel Formulas to DAX

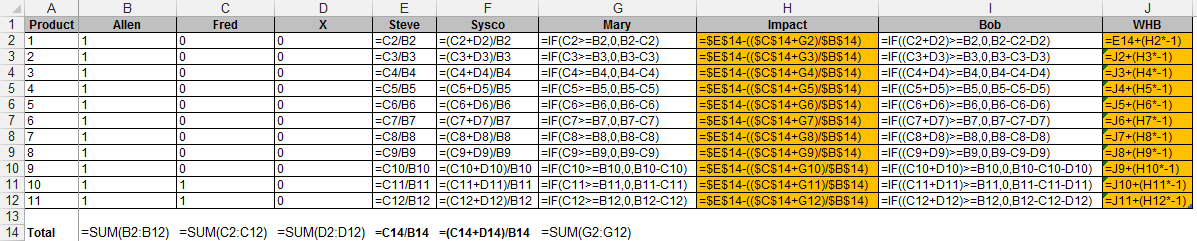

Hi there, I am trying to convert an Excel document into my data model and am having trouble with absolute referencing. Here is a screenshot of the data:

Columns H and J are the I am having trouble with. For Column H, how do I reference the totals of columns E,C, and B while getting the rows for row G? I assumed it would be the sum function of that column "Sum(Impact)" but the end result is incorrect. I have tried using the Earlier function but need some direction to solving this problem. The calculated field/measure gives me incorrect numbers when it does work. I also need additional help with Column J which I have yet to pursue as hard since it uses Column H.

Notes:

- All fields are numerical

- Data has been hidden for privacy reasons

- I have created the working formulas as calculated columns but did briefly experiment with calculated columns, however the datasheet will be long so I do not want the column to calculate for all rows but if it will fix the formula, I will work with that as well.

Any help would be appreciated in leading me in the right direction, thanks in advance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Didn't have time to read the whole thread, but your WHB is impossible in DAX as you've got it defined. Luckily we can redefine it in a much more reasonable way (in terms of fields being accessed). Here are all the measures in clean DAX for you. It should be a good exercise in observing row vs filter context.

DAX isn't really the tool of choice for this, though. This sort of data munging should be done before the model is loaded. Unless you want these as measures, in which case, most of the ALL()s should be replaced with ALLSELECTED(), and the raw column references will have to be wrapped in SUM()s.

Steve = DIVIDE( SomeDamnTable[Fred], SomeDamnTable[Allen] ) // All row context Sysco = DIVIDE( SomeDamnTable[Fred] + SomeDamnTable[X] // All row context ,SomeDamnTable[Allen] ) Mary = IF( SomeDamnTable[Fred] >= SomeDamnTable[Allen] // All row context ,0 ,SomeDamnTable[Allen] - SomeDamnTable[Fred] ) Impact = CALCULATE( // Do this whole thing in a filter context made up of the entire table DIVIDE( SUM( SomeDamnTable[Fred] ) ,SUM( SomeDamnTable[Allen] ) ) ,ALL( SomeDamnTable ) ) - DIVIDE( CALCULATE( // this CALCULATE is in a filter context of the entire table SUM( SomeDamnTable[Fred] ) ,ALL( SomeDamnTable ) ) + SomeDamnTable[Mary] // This is row context ,CALCULATE( // this calculate is in the filter context of the whole table SUM( SomeDamnTable[Allen] ) ,ALL( SomeDamnTable ) ) ) Bob = IF( // all row context ( SomeDamnTable[Fred] + SomeDamnTable[X] ) >= SomeDamnTable[Allen] ,0 ,SomeDamnTable[Allen] - SomeDamnTable[Fred] - SomeDamnTable[X] ) WHB =

CALCULATE(

DIVIDE(

SUM( SomeDamnTable[Fred] )

,SUM( SomeDamnTable[Allen] )

)

,ALL( SomeDamnTable )

) - CALCULATE(

SUM( SomeDamnTable[Impact] )

,FILTER(

ALL( SomeDamnTable )

,SomeDamnTable[Index] <= EARLIER( SomeDamnTable[Index] )

)

)

You should try to do this sort of thing before your data hits your model. Here's some Power Query to get you there. You can examine all this in the .pbix here.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSAWMDMI7ViVYywhAxxhAxwRAxxRAxwxAxxxCxwBCxxBAxNIAKGSKEDFGFYgE=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Product = _t, Allen = _t, Fred = _t, X = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Product", Int64.Type}, {"Allen", Int64.Type}, {"Fred", Int64.Type}, {"X", Int64.Type}}),

Steve = Table.AddColumn(#"Changed Type", "Steve", each [Fred] / [Allen]),

Sysco = Table.AddColumn(Steve, "Sysco", each ( [Fred] + [X] ) / [Allen]),

Mary = Table.AddColumn(Sysco, "Mary", each if [Fred] >= [Allen]

then 0

else [Allen] - [Fred]),

Impact = Table.AddColumn(Mary, "Impact", each ( List.Sum( Mary[Fred] ) / List.Sum( Mary[Allen] ) )

- ( ( List.Sum( Mary[Fred] ) + [Mary] )

/ List.Sum( Mary[Allen] ) )),

Bob = Table.AddColumn(Impact, "Bob", each if ( [Fred] + [X] ) >= [Allen]

then 0

else [Allen] - [Fred] - [X]),

Index = Table.AddIndexColumn(Bob, "Index", 1, 1),

WHB = Table.AddColumn(Index, "WHB", each let

CurrentRow = [Index]

,Base =

( List.Sum( Index[Fred] )

/ List.Sum( Index[Allen] ) )

,SumImpact =

List.Sum(

Table.Column(

Table.SelectRows( Index

, each [Index] <= CurrentRow )

,"Impact" ) )

,WHB = Base - SumImpact

in

WHB),

#"Removed Columns" = Table.RemoveColumns(WHB,{"Index"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Removed Columns",{{"Impact", Currency.Type}, {"WHB", type number}, {"Mary", Int64.Type}, {"Sysco", Int64.Type}, {"Steve", Int64.Type}, {"X", Int64.Type}, {"Fred", Int64.Type}, {"Allen", Int64.Type}, {"Product", Int64.Type}, {"Bob", Int64.Type}})

in

#"Changed Type1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

when your DAX calculation is to much complicated to create and cause perfomance issue, try to push down the complexity in your ETL in order to transform and expose a comprehensive oriented analytical model.

Data preparation is the key to build and effective and performant analytical model.

This have been always the case on a standard BI Project, and still the same on BI Self-Service.

In your case I would have precaculated some metric with Power Query to create by the end a very simple DAX formula.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@greggyb @fbrossard Thank you both for the suggestions. I have moved the majority of my former measures upstream to where it calculates before it hits the data model. For now, I am only using Steve, Sysco, Impact, and WHB as measures in the data model.

I have also created an index column to handle the sorting, however, I get a semantic error for the WHB formula:

WHB =

CALCULATE(

DIVIDE(

SUM( SomeDamnTable[Fred] )

,SUM( SomeDamnTable[Allen] )

)

,ALL( SomeDamnTable )

) - CALCULATE(

SUM( SomeDamnTable[Impact] )

,FILTER(

ALL( SomeDamnTable )

,SomeDamnTable[Index] <= EARLIER( SomeDamnTable[Index] )

)

)

"Earlier/Earliest refers to an earlier row context which doesn't exist."

However, the sorting can be done manually as long as the WHB formula works.

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

WHB was defined as a calculated column in my model. That error indicates you're using it as a measure.

Use MAX(), which will be evaluated in the filter context of the visualization, rather than EARLIER() which depends, as the error indicates, on a row context that cannot exist in the top level of a measure evaluation in a visualization.

I've not put much thought toward using these as measures, but I can't see much issue. Subtotals will be calculated as of the item in that subtotal with the greatest value for [Index].

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Should IMPACT also be used as a Calculated Column?

I am asking because WHB as a calculated column throws out an error saying "the sum function only accepts a column reference as the argument number 1."

The error highlights the IMPACT measure, however, moving IMPACT to a calculated column results in the same number in every row.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) Impact is not the same for every row. The .pbix file I shared has identical results to your expected results sample in this thread; the last two rows are 0, the rest are -9.09%.

2) What are your reporting requirements? Whether these should be measures or columns in the model depends on that.

You wanted an output table identical to Excel, and I can do that with very little understanding of your problem. Answering any other questions will be an exercise in frustration for all if we're not both clear on the requirements for the final solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@greggyb The reporting requirement just asks for accurate data so it does not matter if it is a measure or column as long as the correct results are reached. I am currently using the data model in Excel to prepare the data for PowerBI when we transfer our reports over to PowerBI. Everything is working except for the WHB formula. That is the final piece of the puzzle.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Accurate data is not a sufficient requirement.

Will reporting always be at the grain of the table, or will we expect to further summarize these values? What should interaction with slicers and filters look like?

If it's all detail reporting (at the table grain in the sample you've provided), then you can and probably should persist everything in the table (and that table should probably be in SQL Server - DAX and Tabular are analytical technologies, not terribly well suited to detail-level reporting).

If, instead these values must be aggregated, we need to understand what aggregation looks like. Does it make sense to do simple sums, averages, maxes, mins, etc on these values as calculated columns, or should the numerators and denominators be recalculated based on filter context?

Accurate implies different requirements based on the answers to the above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the reporting will always be at the grain of the table. This data will only be used for report and is separate from everything else so simple sums, averages, maxes, etc will work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then choose among the Power Query code and DAX calculated columns in the .pbix file I shared. Better to represent these as columns.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thanks for all the help. I really appreciate it. Your first post in the thread really answered a lot of questions so I'll mark that as the answer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Didn't have time to read the whole thread, but your WHB is impossible in DAX as you've got it defined. Luckily we can redefine it in a much more reasonable way (in terms of fields being accessed). Here are all the measures in clean DAX for you. It should be a good exercise in observing row vs filter context.

DAX isn't really the tool of choice for this, though. This sort of data munging should be done before the model is loaded. Unless you want these as measures, in which case, most of the ALL()s should be replaced with ALLSELECTED(), and the raw column references will have to be wrapped in SUM()s.

Steve = DIVIDE( SomeDamnTable[Fred], SomeDamnTable[Allen] ) // All row context Sysco = DIVIDE( SomeDamnTable[Fred] + SomeDamnTable[X] // All row context ,SomeDamnTable[Allen] ) Mary = IF( SomeDamnTable[Fred] >= SomeDamnTable[Allen] // All row context ,0 ,SomeDamnTable[Allen] - SomeDamnTable[Fred] ) Impact = CALCULATE( // Do this whole thing in a filter context made up of the entire table DIVIDE( SUM( SomeDamnTable[Fred] ) ,SUM( SomeDamnTable[Allen] ) ) ,ALL( SomeDamnTable ) ) - DIVIDE( CALCULATE( // this CALCULATE is in a filter context of the entire table SUM( SomeDamnTable[Fred] ) ,ALL( SomeDamnTable ) ) + SomeDamnTable[Mary] // This is row context ,CALCULATE( // this calculate is in the filter context of the whole table SUM( SomeDamnTable[Allen] ) ,ALL( SomeDamnTable ) ) ) Bob = IF( // all row context ( SomeDamnTable[Fred] + SomeDamnTable[X] ) >= SomeDamnTable[Allen] ,0 ,SomeDamnTable[Allen] - SomeDamnTable[Fred] - SomeDamnTable[X] ) WHB =

CALCULATE(

DIVIDE(

SUM( SomeDamnTable[Fred] )

,SUM( SomeDamnTable[Allen] )

)

,ALL( SomeDamnTable )

) - CALCULATE(

SUM( SomeDamnTable[Impact] )

,FILTER(

ALL( SomeDamnTable )

,SomeDamnTable[Index] <= EARLIER( SomeDamnTable[Index] )

)

)

You should try to do this sort of thing before your data hits your model. Here's some Power Query to get you there. You can examine all this in the .pbix here.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSAWMDMI7ViVYywhAxxhAxwRAxxRAxwxAxxxCxwBCxxBAxNIAKGSKEDFGFYgE=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Product = _t, Allen = _t, Fred = _t, X = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Product", Int64.Type}, {"Allen", Int64.Type}, {"Fred", Int64.Type}, {"X", Int64.Type}}),

Steve = Table.AddColumn(#"Changed Type", "Steve", each [Fred] / [Allen]),

Sysco = Table.AddColumn(Steve, "Sysco", each ( [Fred] + [X] ) / [Allen]),

Mary = Table.AddColumn(Sysco, "Mary", each if [Fred] >= [Allen]

then 0

else [Allen] - [Fred]),

Impact = Table.AddColumn(Mary, "Impact", each ( List.Sum( Mary[Fred] ) / List.Sum( Mary[Allen] ) )

- ( ( List.Sum( Mary[Fred] ) + [Mary] )

/ List.Sum( Mary[Allen] ) )),

Bob = Table.AddColumn(Impact, "Bob", each if ( [Fred] + [X] ) >= [Allen]

then 0

else [Allen] - [Fred] - [X]),

Index = Table.AddIndexColumn(Bob, "Index", 1, 1),

WHB = Table.AddColumn(Index, "WHB", each let

CurrentRow = [Index]

,Base =

( List.Sum( Index[Fred] )

/ List.Sum( Index[Allen] ) )

,SumImpact =

List.Sum(

Table.Column(

Table.SelectRows( Index

, each [Index] <= CurrentRow )

,"Impact" ) )

,WHB = Base - SumImpact

in

WHB),

#"Removed Columns" = Table.RemoveColumns(WHB,{"Index"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Removed Columns",{{"Impact", Currency.Type}, {"WHB", type number}, {"Mary", Int64.Type}, {"Sysco", Int64.Type}, {"Steve", Int64.Type}, {"X", Int64.Type}, {"Fred", Int64.Type}, {"Allen", Int64.Type}, {"Product", Int64.Type}, {"Bob", Int64.Type}})

in

#"Changed Type1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd then consider creating separate tables to hold aggregate/ calculated data and link in the model

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 113 | |

| 97 | |

| 79 | |

| 73 | |

| 56 |

| User | Count |

|---|---|

| 145 | |

| 105 | |

| 104 | |

| 90 | |

| 63 |