- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Convert transactions to balance

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Convert transactions to balance

Hello, Comunity.

Help me please 🙂

I have a transaction table and I can't find the way to calculate stock for certain date.

Example data:

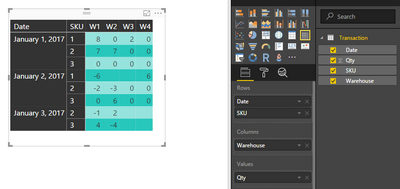

In result, it should be certain quantity for each wharehouse for each SKU for each date.

I would appreciate any help with Idea and suggestions.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I'd say the measure is the way to go here. It should work as desired in the chart if you take the date-field from your date-table and not from the transactions-table.

Link to file: https://www.dropbox.com/s/dc8budcqd68ogz4/PBI_CumTotalAllDates.pbix?dl=0

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But i need to use the numbers in the formulas...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What formula? Could you please describe your expectation? So I could figure out possible approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For example, build a diagram, that describes the dynamic of stock of certain sku in certain warehouse. I need to have all this data in a table, not only on visualization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cool,

Please provide the formula what u tried and let us know from that formula what u trying get.

That will help us achieve your goal my dear friend.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An advanced Power Query solution below, creating a record for each possible combination SKU/Warehouse/Date with quantities and balances.

It is assumed that your data has unique SKU/Warehouse/Date combinations (i.e. max 1 transaction per SKU / Warehouse / Date), otherwise a grouping step must be added.

If your data set is large, you should expect quite some runtime, but I already managed to reduce runtime by adding helper columns PrevSKU and PrevWarehouse with the values from the previous rows: Power Query is much faster when values on 1 row can be compared with each other, rather than values on different rows,

let

Source = Excel.CurrentWorkbook(){[Name="Transactions"]}[Content],

Typed1 = Table.TransformColumnTypes(Source,{{"Date", type date}, {"Warehouse", type text}, {"SKU", Int64.Type}, {"Qty", Int64.Type}}),

UniqueSKUs = Table.Distinct(Typed1, {"SKU"}),

RemovedOthers = Table.SelectColumns(UniqueSKUs,{"SKU"}),

WarehouseLists = Table.AddColumn(RemovedOthers, "Warehouse", each List.Distinct(Table.Column(Typed1,"Warehouse"))),

ExpandedWarehouse = Table.ExpandListColumn(WarehouseLists, "Warehouse"),

DateLists = Table.AddColumn(ExpandedWarehouse, "Date", each List.Distinct(Table.Column(Typed1,"Date"))),

ExpandedDate = Table.ExpandListColumn(DateLists, "Date"),

Merged = Table.NestedJoin(ExpandedDate,{"SKU", "Warehouse", "Date"},Typed1,{"SKU", "Warehouse", "Date"},"NewColumn",JoinKind.LeftOuter),

ExpandedQty = Table.ExpandTableColumn(Merged, "NewColumn", {"Qty"}, {"Qty"}),

ReplacedNullsWithZero = Table.ReplaceValue(ExpandedQty,null,0,Replacer.ReplaceValue,{"Qty"}),

SortedSKUWHSDate = Table.Sort(ReplacedNullsWithZero,{"SKU", "Warehouse", "Date"}),

SKUWarehouse = Table.SelectColumns(SortedSKUWHSDate,{"SKU", "Warehouse"}),

PrevSKUWarehouse = Table.InsertRows(SKUWarehouse,0,{[SKU = null,Warehouse = null]}),

AddedPrevSKUWarehouse = Table.FromColumns({Table.ToRecords(SortedSKUWHSDate),Table.ToRecords(PrevSKUWarehouse)}),

Expanded1 = Table.ExpandRecordColumn(AddedPrevSKUWarehouse, "Column1", {"SKU", "Warehouse", "Date", "Qty"}, {"SKU", "Warehouse", "Date", "Qty"}),

Expanded2 = Table.ExpandRecordColumn(Expanded1, "Column2", {"SKU", "Warehouse"}, {"Column2.SKU", "Column2.Warehouse"}),

RenamedColumns1 = Table.RenameColumns(Expanded2,{{"Column2.SKU", "PrevSKU"}, {"Column2.Warehouse", "PrevWarehouse"}}),

RemovedBottomRow = Table.Buffer(Table.RemoveLastN(RenamedColumns1,1)),

Balance = List.Generate(

() => [Counter = 0, Balance = RemovedBottomRow[Qty]{0}],

each [Counter] < Table.RowCount(RemovedBottomRow),

each [Counter = [Counter] + 1, Balance = if RemovedBottomRow[SKU]{Counter} = RemovedBottomRow[PrevSKU]{Counter} and

RemovedBottomRow[Warehouse]{Counter} = RemovedBottomRow[PrevWarehouse]{Counter}

then [Balance] + RemovedBottomRow[Qty]{Counter}

else RemovedBottomRow[Qty]{Counter}],

each [Balance]),

AddedBalance = Table.FromColumns({Table.ToRecords(RemovedBottomRow),Balance}),

Expanded3 = Table.ExpandRecordColumn(AddedBalance, "Column1", {"SKU", "Warehouse", "Date", "Qty", "PrevSKU", "PrevWarehouse"}, {"SKU", "Warehouse", "Date", "Qty", "PrevSKU", "PrevWarehouse"}),

RenamedColumns2 = Table.RenameColumns(Expanded3,{{"Column2", "Balance"}}),

RemovedColumns = Table.RemoveColumns(RenamedColumns2,{"PrevSKU", "PrevWarehouse"}),

Typed2 = Table.TransformColumnTypes(RemovedColumns,{{"Warehouse", type text}, {"Date", type date}, {"Balance", Int64.Type}})

in

Typed2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MarcelBeug,

that's an interesting approach. If you still have the test-environment with the large datasets, I'd be interested how the performance compares to this function:

(SourceTable as table, GroupColumns as list) =>

let

Source = SourceTable,

ChgType = Table.TransformColumnTypes(Source,{{"Date", type date}}),

Partitions = Table.Buffer(Table.Group(ChgType, GroupColumns , {{"Partition", each _}})),

AddAllDates = Table.AddColumn(Partitions, "Dates", each List.Transform({Number.From(List.Min(ChgType[Date]))..Number.From(List.Max(ChgType[Date]))}, each Date.From(_))),

ExpandDates = Table.ExpandListColumn(AddAllDates, "Dates"),

AddRT = Table.AddColumn(ExpandDates, "RunningTotal", (Earlier) => List.Sum(Table.SelectRows(Earlier[Partition], each [Date]<=Earlier[Dates])[Qty])),

AddDaily = Table.AddColumn(AddRT,"Daily", (Earlier) => List.Sum(Table.SelectRows(Earlier[Partition], each [Date]=Earlier[Dates])[Qty])),

Cleanup = Table.RemoveColumns(AddDaily,{"Partition"})

in

Cleanup

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF

Your approach is much faster indeed. I'll take a closer look this evening. On first sight the result doesn't inlcude all combinations SKU/Warehouse/Date as indicated by OP in the original question.

Otherwise I wonder if that would be really necessary.

Expect another update from me later today.

Thanks,

Marcel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tringuyenminh92 and @MarcelBeug,

I just remembered a trick I learned from Bill Szysz, that puts the performance here into a different dimension:

let

Source = TransactionTable,

ChgType = Table.TransformColumnTypes(Source,{{"Date", type date}}),

Sort = Table.Buffer(Table.Sort(ChgType,{{"SKU", Order.Ascending}, {"Warehouse", Order.Ascending}, {"Date", Order.Ascending}})),

Partitions = Table.Buffer(Table.Group(Sort, {"Warehouse", "SKU"}, {{"all", (Earlier) => Table.AddColumn(Table.AddIndexColumn(Earlier, "Index",1,1), "RT", each List.Sum(List.Range(Earlier[Qty],0,[Index])))}})),

#"Expanded all" = Table.ExpandTableColumn(Partitions, "all", {"Date", "Qty", "RT"}, {"Date", "Qty", "RT"})

in

#"Expanded all"

In the Partitions: Instead of filtering the table, one creates a list-range. I have absolutely no idea why this is so much faster, but it is 🙂

Pls find the workbook here: https://www.dropbox.com/s/02850h7yrqe1kz5/PBI_RunningTotal2_3.xlsx?dl=0

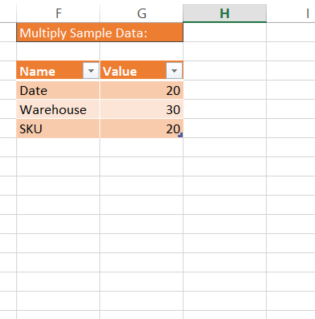

You can adjust the size of the sample data by increasing the numbers in the number in the orange table:

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF,

Oh, Both of your solutions and @MarcelBeug'solution are interesting to learn. I'm having concern about performance benchmark for your apporach and Marcel's approach, cause i usually think as your nested sum approach for balance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tringuyenminh92,

yes, performance is an issue if you do it in M/the query-editor, therefore the suggestion for a measure.

But the Partition-step in my query helps if for whatever reason you want to do it in M 😉

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF,

The measure approach is clear to me. Could i have your sample pbix file that you are doing the converting with above M script, so I could quickly observe step by step to understand the role of Partition step? ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks as if you need a running total (or cumulative total). You should add a Date-Table and then use this for a measure in your data model:

Cumulative Quantity :=

CALCULATE (

SUM ( Transactions[Qty] ),

FILTER (

ALL ( 'Date'[Date] ),

'Date'[Date] <= MAX ( 'Date'[Date] )

)

)

Your desired report can then be created with a matrix .

For an explanation on how it works, pls check this: http://www.daxpatterns.com/cumulative-total/

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hallo all!

Yes, i need running total, that inlcudes all combinations SKU/Warehouse/Date.

And it's necessary, course i need to visualize a dynamic of stock for all days,

without that gaps, when there was no transactions:

Thanks for the M-code, that's absolutely new for me, and i'll try it:)

But maybe, there's a way to solve the problem thru the measure?

There's ADDMISSINGITEMS function - someone knows how's it works?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I'd say the measure is the way to go here. It should work as desired in the chart if you take the date-field from your date-table and not from the transactions-table.

Link to file: https://www.dropbox.com/s/dc8budcqd68ogz4/PBI_CumTotalAllDates.pbix?dl=0

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Although Imke's previous post was marked as the solution (and I fully agree), just because I would provide more information this evening: below my results of blowing up the transaction table for each combination SKU/WHS/Date applied to Imke's Transactions query in her PBIX-file.

The net result of blowing up the table is that you always have all warehouses and all SKU's in your slicers.

Compared to a previous post I improved performance by creating separate lists for the unique values and by using "combine tables" instead of "merge tables" (and then remove duplicates).

So just for the record (and educational purpose ![]() 😞

😞

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("fdDBDcAgCAXQXThLI2C0m/Rg3H+NQtSTYOL38vLB2DtkevRwpgYJPtLL8sJIauwZ1olHkTUtKJohTxQXKUDRFGcjXzZuQ3GGbuSgaAtr0DND7zWyPscbWpbp0PED", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Date = _t, Warehouse = _t, SKU = _t, Qty = _t]),

Typed1 = Table.TransformColumnTypes(Source,{{"Date", type date}, {"Warehouse", type text}, {"SKU", Int64.Type}, {"Qty", Int64.Type}}),

UniqueSKUs = Table.Distinct(Typed1, {"SKU"}),

RemovedOthers = Table.SelectColumns(UniqueSKUs,{"SKU"}),

AddedZeroQty = Table.AddColumn(RemovedOthers, "Qty", each 0, Int64.Type),

UniqueWHSs = List.Buffer(List.Distinct(Table.Column(Typed1,"Warehouse"))),

WarehouseLists = Table.AddColumn(AddedZeroQty, "Warehouse", each UniqueWHSs, type {text}),

ExpandedWarehouse = Table.Buffer(Table.ExpandListColumn(WarehouseLists, "Warehouse")),

UniqueDates = List.Buffer(List.Distinct(Table.Column(Typed1,"Date"))),

DateLists = Table.AddColumn(ExpandedWarehouse, "Date", each UniqueDates, type {date}),

ExpandedDate = Table.Buffer(Table.ExpandListColumn(DateLists, "Date")),

CombinedTables = Table.Combine({Typed1, ExpandedDate}),

RemovedDuplicates = Table.Distinct(CombinedTables, {"Date", "Warehouse", "SKU"})

in

RemovedDuplicates

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MarcelBeug,

yes, this is very nice code. Much faster than my attempts with Joins. So my M-winner of the day is this combined code :

let

Source = TransactionTable,

Typed1 = Table.TransformColumnTypes(Source,{{"Date", type date}, {"Warehouse", type text}, {"SKU", Int64.Type}, {"Qty", Int64.Type}}),

UniqueSKUs = Table.Distinct(Typed1, {"SKU"}),

RemovedOthers = Table.SelectColumns(UniqueSKUs,{"SKU"}),

AddedZeroQty = Table.AddColumn(RemovedOthers, "Qty", each 0, Int64.Type),

UniqueWHSs = List.Buffer(List.Distinct(Table.Column(Typed1,"Warehouse"))),

WarehouseLists = Table.AddColumn(AddedZeroQty, "Warehouse", each UniqueWHSs, type {text}),

ExpandedWarehouse = Table.Buffer(Table.ExpandListColumn(WarehouseLists, "Warehouse")),

UniqueDates = List.Buffer(List.Distinct(Table.Column(Typed1,"Date"))),

DateLists = Table.AddColumn(ExpandedWarehouse, "Date", each UniqueDates, type {date}),

ExpandedDate = Table.Buffer(Table.ExpandListColumn(DateLists, "Date")),

CombinedTables = Table.Combine({Typed1, ExpandedDate}),

RemovedDuplicates = Table.Distinct(CombinedTables, {"Date", "Warehouse", "SKU"}),

ChgType = Table.TransformColumnTypes(RemovedDuplicates ,{{"Date", type date}}),

Sort = Table.Buffer(Table.Sort(ChgType,{{"SKU", Order.Ascending}, {"Warehouse", Order.Ascending}, {"Date", Order.Ascending}})),

Partitions = Table.Buffer(Table.Group(Sort, {"Warehouse", "SKU"}, {{"all", (Earlier) => Table.AddColumn(Table.AddIndexColumn(Earlier, "Index",1,1), "RT", each List.Sum(List.Range(Earlier[Qty],0,[Index])))}})),

ExpandAll = Table.ExpandTableColumn(Partitions, "all", {"Date", "Qty", "RT"}, {"Date", "Qty", "RT"})

in

ExpandAllBetter together 🙂

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thak you!

it was exacly my mistake!

Tell me please, how did you include the data in .pbix file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Tiolan,

this is a very common error. One should always hide the fact-table's date columns from view. Or better: Hide the fact-tables completely. (And put your measures into "blank-measure-only"-tables).

You can use the Enter-data-feature to copy-paste tables from Excel or wherever: http://powerbi.tips/2016/04/manually-enter-data/

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Tiolan,

You could use Matrix control (but remember to fill in the transaction that has 0 quantity)

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 110 | |

| 95 | |

| 76 | |

| 65 | |

| 51 |

| User | Count |

|---|---|

| 146 | |

| 109 | |

| 106 | |

| 88 | |

| 61 |