- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Consuming only updated records in Power query

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Consuming only updated records in Power query

Hey Guys,

I need to put a logic in Power query to consume only updated records from source. Details are:

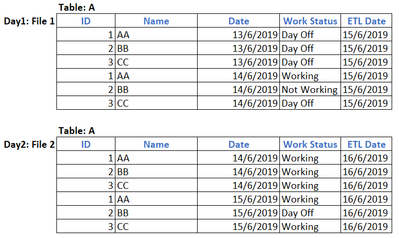

I am using Azure blob storage as a source to my Power BI report. Data in blob is coming in form of files. Like x files for Table A. Every time when the file is generated in blob it has last x days of data in it. I only need to ingest the latest record for ID column based on ETL date to avoid duplicates.

As shown for Day1 and Day2, I am getting respective files for Table A with last two days of data.

Following should be the records coming in Power BI Model for Table A for these two days when refresh is done on Day 2:

Thanks.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mann

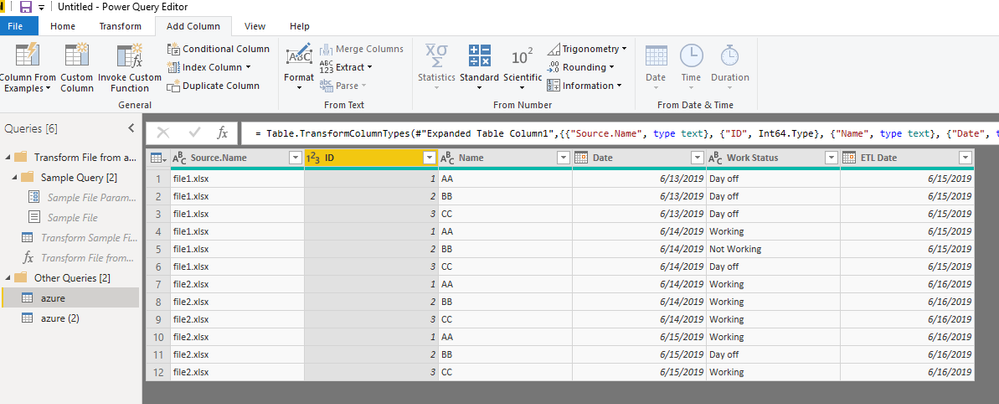

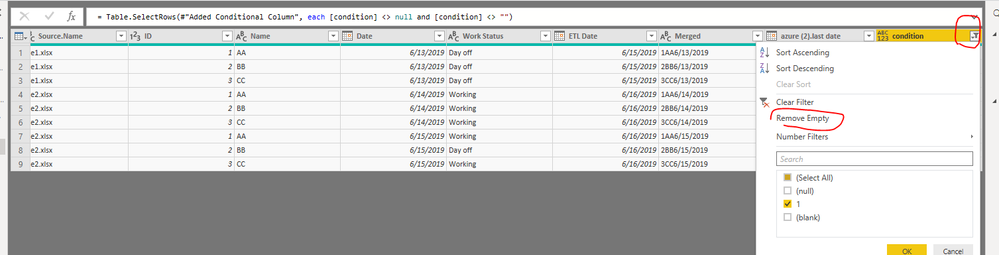

After refreshing, two files append in one table.

Ctrl+click on "ID","Name","Date", select Add Column->merge column

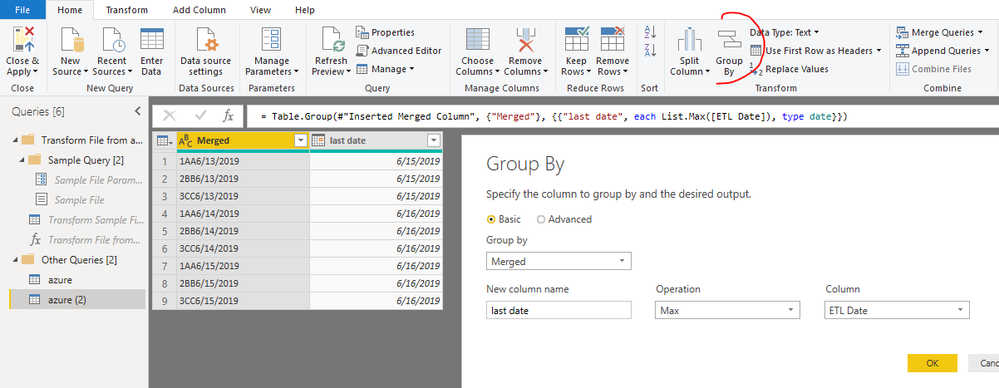

Then make a copy of this query->azure (2)

In "azure (2)",

Group by

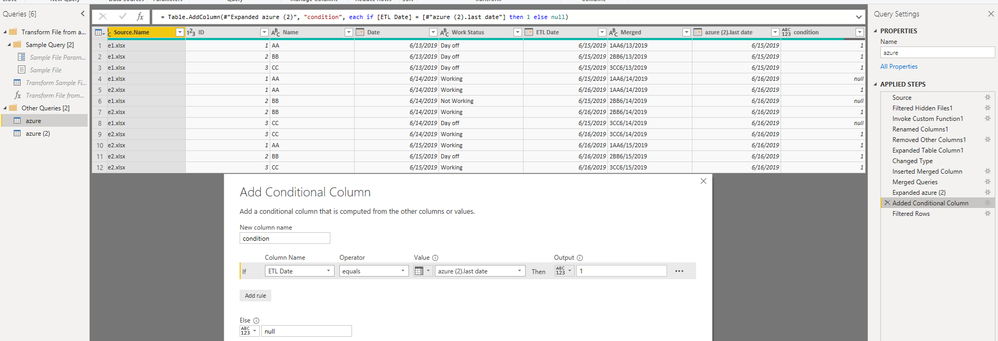

In "azure", merge queries from "azure(2)" based on "Merged" column,

expand "last date" column,

Add a condition column

then filter "condition" column to remove blank.

Best Regards

Maggie

Community Support Team _ Maggie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Maggie,

Thanks for the solution. This is great! Using this solution results into creating duplicate queries and we are dealling with huge volume of data here so I did following steps to manage it in one query:

#"Invoke Custom Function1" = Table.AddColumn(#"Removed Other Columns", "Transform File from Table A", each #"Transform File from Table A"([Content])),

#"Removed Other Columns1" = Table.SelectColumns(#"Invoke Custom Function1", {"Transform File from Table A"}),

#"Expanded Table Column1" = Table.ExpandTableColumn(#"Removed Other Columns1", "Transform File from Table A", Table.ColumnNames(#"Transform File from Table A"(#"Sample File"))),

#"Changed Type" = Table.TransformColumnTypes(#"Expanded Table Column1",{{"ID", Int64.Type}, {"Name", type text}, {"Date", type text}, {"Work Status", type text}, {"ETL Date", type text}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID", "Date"}, {{"All Data", each _, type table [ID=number, Name=text, Date=text, Work Status=text, ETL Date=text]}, {"Max Date", each List.Max([ETL Date]), type text}}),

#"Expanded All Data" = Table.ExpandTableColumn(#"Grouped Rows", "All Data", {"Name", "Work Status", "ETL Date"}, {"All Data.Name", "All Data.Work Status", "All Data.ETL Date"}),

#"Filtered Rows1" = Table.SelectRows(#"Expanded All Data", each [All Data.ETL Date] = [Max Date]),

#"Renamed Columns" = Table.RenameColumns(#"Filtered Rows1",{{"All Data.Name", "Name"}, {"All Data.Work Status", "Work Status"}, {"All Data.ETL Date", "ETL Date"}})I am grouping the needed columns and then expanding the data. After this I am filtering it with Max Date variable.

This also works as expected.

Do you know which out of these two solution is best in terms of performance?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mann

After refreshing, two files append in one table.

Ctrl+click on "ID","Name","Date", select Add Column->merge column

Then make a copy of this query->azure (2)

In "azure (2)",

Group by

In "azure", merge queries from "azure(2)" based on "Merged" column,

expand "last date" column,

Add a condition column

then filter "condition" column to remove blank.

Best Regards

Maggie

Community Support Team _ Maggie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is awesome Maggie, this is exactly what I was looking for. 🙂

Regards

David

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Maggie,

Thanks for the solution. This is great! Using this solution results into creating duplicate queries and we are dealling with huge volume of data here so I did following steps to manage it in one query:

#"Invoke Custom Function1" = Table.AddColumn(#"Removed Other Columns", "Transform File from Table A", each #"Transform File from Table A"([Content])),

#"Removed Other Columns1" = Table.SelectColumns(#"Invoke Custom Function1", {"Transform File from Table A"}),

#"Expanded Table Column1" = Table.ExpandTableColumn(#"Removed Other Columns1", "Transform File from Table A", Table.ColumnNames(#"Transform File from Table A"(#"Sample File"))),

#"Changed Type" = Table.TransformColumnTypes(#"Expanded Table Column1",{{"ID", Int64.Type}, {"Name", type text}, {"Date", type text}, {"Work Status", type text}, {"ETL Date", type text}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID", "Date"}, {{"All Data", each _, type table [ID=number, Name=text, Date=text, Work Status=text, ETL Date=text]}, {"Max Date", each List.Max([ETL Date]), type text}}),

#"Expanded All Data" = Table.ExpandTableColumn(#"Grouped Rows", "All Data", {"Name", "Work Status", "ETL Date"}, {"All Data.Name", "All Data.Work Status", "All Data.ETL Date"}),

#"Filtered Rows1" = Table.SelectRows(#"Expanded All Data", each [All Data.ETL Date] = [Max Date]),

#"Renamed Columns" = Table.RenameColumns(#"Filtered Rows1",{{"All Data.Name", "Name"}, {"All Data.Work Status", "Work Status"}, {"All Data.ETL Date", "ETL Date"}})I am grouping the needed columns and then expanding the data. After this I am filtering it with Max Date variable.

This also works as expected.

Do you know which out of these two solution is best in terms of performance?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mann

I think your solution may be better for performance.

For my solution, there are duplicate queries.

You could click on the query, uncheck "enable load", so that this query will not be added in the data model.

It may improve the performance.

There are some tips to speed the performance in Power Query

Performance tip for List.Generate (1): Buffer your tables in Power BI and Power Query

Table.Buffer for cashing intermediate query results or how workaround Unnecessary Queries Issue

Best Regards

Maggie

Community Support Team _ Maggie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mann

Before, your data source has "file1" (Table A),

Now a new file "file2" (Table B) is added to your data source,

You click on "refresh" button from Power BI, but only want the updated data like the last screenshot you post here.

Right?

Best Regards

Maggie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These two files are for same Table A. Process is: On Day1 File 1 is present in Blob storage and then Power Bi is refreshed. Then On Day2 File 1 and File 2 both are present and then Power BI is refreshed. So files will keep adding in the source blob container everyday.

Requirement is to consume all files present in source whenever Power Bi is refreshed. Since some updates are coming in the files. Power BI should load only the updates as per ETL date as I have shown.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 107 | |

| 93 | |

| 77 | |

| 65 | |

| 53 |

| User | Count |

|---|---|

| 147 | |

| 106 | |

| 104 | |

| 87 | |

| 61 |