- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Collecting data (not visual) outputs from Pyth...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Collecting data (not visual) outputs from Python

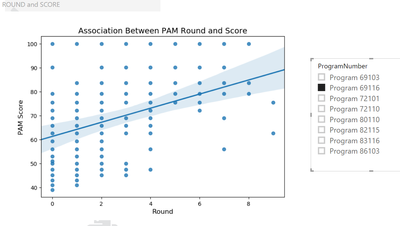

I've been moving some basic Python visuals into Power BI which is amazing with the slicers:

Now I'm trying to add in some statistical analysis, and pass data / output back to the canvas. Here's a simple Pearson example:

# The following code to create a dataframe and remove duplicated rows is always executed and acts as a preamble for your script:

# dataset = pandas.DataFrame(ROUND, SCORE)

# dataset = dataset.drop_duplicates()

# Paste or type your script code here:

import scipy.stats

print('Pearson Correlation:',scipy.stats.pearsonr(dataset['ROUND'], dataset['SCORE']))Normally in Spyder the Print command outputs to the iPython console. However in PBI it fails with the error "No image was created. The Python code didn't result in the creation of any visuals."

Is there a way to output text from the Python script to the PBI visual?

Alternatively (and better), can I add the outputs from the Python script to the PBI data model? (I've read a litte about the REST API, but wondering if there is a more direct way).

Thanks.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sfmike99

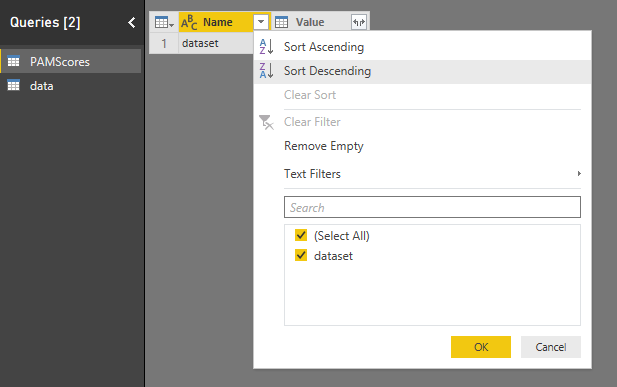

when you open a Query in the query editor you can choose Python script in the Transform tab (on the far right).

The DataFrame passed to Python is named 'dataset' and it is the table of the preceding query step. You can apply transofmrations to this DataFrame within Python by inputting the code and after you click ok you will see different DataFrames available for expansion, normally here you'd expand only the dataframe you actually want to return. Let me know if you need me to post pictures which could make it more clear

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sfmike99 yes all the Drataframes should be visible after the script is run. You should have as many rows as dataframes created by your code. Normally then you'd filter by just the one you'd want to expand and then expand it

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sfmike99 ,

have you already tried to run the Python script from within the Power Query window?

That is where a Pandas DataFrame can be returned

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aha that makes sense. My first day trying this...

Can you point me to any examples of the syntax? Lots for visuals but can't find much about passing data / dataframes back and forth in Power Query. For example:

import pandas

dataset = pandas.DataFrame(ROUND, SCORE)

Fails with the message "NameError: name 'ROUND' is not defined" even though that column is in an existing table.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sfmike99

when you open a Query in the query editor you can choose Python script in the Transform tab (on the far right).

The DataFrame passed to Python is named 'dataset' and it is the table of the preceding query step. You can apply transofmrations to this DataFrame within Python by inputting the code and after you click ok you will see different DataFrames available for expansion, normally here you'd expand only the dataframe you actually want to return. Let me know if you need me to post pictures which could make it more clear

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LivioLanzo and @sfmike99 , this is exactly, what I'm looking for. I understand every step and it works, but now I need my new dataframes to become available outside the query editor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You just need to name your new dataframe in the Python script, then expand that one when it is finished.

For instance, I have a table with a bunch of programs with survey rounds and scores. I create a new dataframe based on unique program names, then compute the correlation between round and score for each program. (This is a perfect use case for Python since Power BI has very limited stats capabilities).

# 'dataset' holds the input data for this script

import scipy.stats

Results = dataset[['PROGRAM']].drop_duplicates()

Results['INDEX'] = Results['PROGRAM']

Results.set_index('INDEX', inplace=True)

for idx in Results.index:

MeasureX = dataset['ROUND'].loc[dataset['PROGRAM'] == idx]

MeasureY = dataset['SCORE'].loc[dataset['PROGRAM'] == idx]

Output = scipy.stats.pearsonr(MeasureX, MeasureY)

Results.loc[idx, 'R Value'] = Output[0]

Results.loc[idx, 'P Value'] = Output[1]When that completes, I see both 'dataset' and 'Results' coming back - I simply click to expand 'Results' and it's available in my Power BI model like any other table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That works - thanks for helping me get my head around it!

Can I generate a new dataframe and pass it back to Power BI? For example, if I want to create a summary table of scores?

I can create a new table in the Python script without errors:

newtable = dataset[('ROUND')]

But I think I should see that table in the pulldown after the script executes right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sfmike99 yes all the Drataframes should be visible after the script is run. You should have as many rows as dataframes created by your code. Normally then you'd filter by just the one you'd want to expand and then expand it

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That works! I forgot the double brackets in the Python script: newtable = dataset[['ROUND']]

Way cool...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sfmike99 no problem!

You can also pass to Python more than one dataframes (for instance the result of other Queries). In fact the Power Query function used to run the Python script has this synthax:

Python.Execute(script as Test, dataframes)

let's say you have two queries named Query1 and Query2 which you both need in Python, you would do:

Python.Execute('.. your python code ..', [df1=Query1, df2=Query2])

Now in Python the two dataframes are knows as df1 and df2

You can also omit the last argument, dataframes, this can be useful if you want to fetch data from the internet for instance:

Source = Python.Execute("import pandas as pd

import pandas_datareader as stock_reader

df1 = pd.DataFrame(stock_reader.get_data_yahoo('AMZN', start='2015-01-01')['Adj Close'])

df2 = pd.DataFrame(stock_reader.get_data_yahoo('MSFT', start='2015-01-01')['Adj Close'])

r = df1.merge(df2, how='inner', left_index=True, right_index=True, suffixes=('_AMZN', '_MSFT'))

r.reset_index(inplace=True)")

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LivioLanzo Nice explanation, thank you!

Is there any way to merge the two datasets/steps of the query? Or the moment we create the python output (step2) the source data (step 1) disappear?

My python script uses attributes from step1 but the returned results don't include those attributes.

How can I refer to different tables or columns of tables of the PBI file as well? Is there this possibility?

Thanks,

Elria

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 108 | |

| 100 | |

| 78 | |

| 64 | |

| 58 |

| User | Count |

|---|---|

| 148 | |

| 111 | |

| 94 | |

| 84 | |

| 67 |