- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Change Column Headers To Names Dynamically Nested ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Change Column Headers To Names Dynamically Nested in Column_1 by Column Count

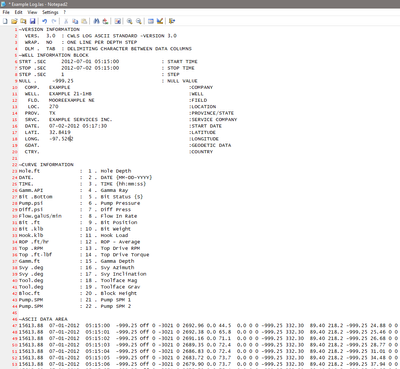

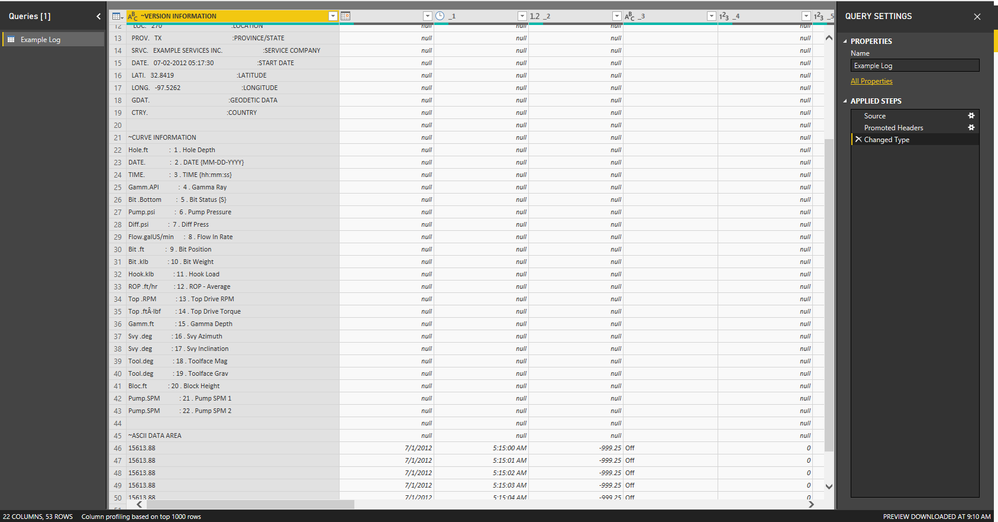

After the source is loaded into Power Bi - Power Query I tab delimit the column which will create 22 columns. The fields in column_1, under "~Curve Information", all the way to the blank field (Rows 23-44), need to become the new column headers for the information found in the rows under "ASCII Data Area".

The problem I face is that the count of columns and the count of associated names is dynamic. The good thing is that the two quantities will always be the same. If I pull the source this month it could be 22 columns but next month it could only be 18 records that were recorded.

Please provide any suggestions.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so this works:

let

Source = Table.FromColumns({Lines.FromBinary(File.Contents("C:\Users\MyComputer\Desktop\Example Log.las"), null, null, 1252)}),

#"Added Custom" = Table.AddColumn(Source, "curveFinder", each if Text.Contains([Column1], "~CURVE INFORMATION") then "COLUMNS" else if Text.Contains([Column1], "~ASCII DATA AREA") then "DATA" else null),

#"Filled Down" = Table.FillDown(#"Added Custom",{"curveFinder"}),

columnNames =

let

#"Filtered Rows" = Table.SelectRows(#"Filled Down", each ([curveFinder] = "COLUMNS")),

#"Removed Top Rows" = Table.Skip(#"Filtered Rows",1),

#"Removed Bottom Rows" = Table.RemoveLastN(#"Removed Top Rows",1),

#"Removed Other Columns" = Table.RemoveColumns(#"Removed Bottom Rows",{"curveFinder"}),

#"Extracted Text After Delimiter" = Table.TransformColumns(#"Removed Other Columns", {{"Column1", each Text.AfterDelimiter(_, " . "), type text}}),

#"Trimmed Text" = Table.TransformColumns(#"Extracted Text After Delimiter",{{"Column1", Text.Trim, type text}}),

#"Cleaned Text" = Table.TransformColumns(#"Trimmed Text",{{"Column1", Text.Clean, type text}}),

#"Replaced Value" = Table.ReplaceValue(#"Cleaned Text"," ","",Replacer.ReplaceText,{"Column1"}),

Custom1 = #"Replaced Value"[Column1]

in

Custom1,

data =

let

#"Filtered Rows" = Table.SelectRows(#"Filled Down", each ([curveFinder] = "DATA")),

#"Removed Top Rows" = Table.Skip(#"Filtered Rows",1),

#"Removed Columns" = Table.RemoveColumns(#"Removed Top Rows",{"curveFinder"}),

#"Split Column by Delimiter" = Table.SplitColumn(#"Removed Columns", "Column1", Splitter.SplitTextByDelimiter(" ", QuoteStyle.Csv), columnNames)

in

#"Split Column by Delimiter"

in

dataI've tested this on your log file, which contains 22 columns. I also incrementally deleted column names and corresponding data columns as part of my tests. It passed those tests.

While this may work now, it is very brittle and makes a TON of assumptions about the structure of your data in the future. Most important assumptions:

- Column names will always fall under '~CURVE INFORMATION' and the data will always fall under '~ASCII DATA AREA'

- There will always be a space between the last column name and '~ASCII DATA AREA'

- Column names will always be after this delimiter: ' . '

- The data values will always be delimited by a space (' ')

I hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so this works:

let

Source = Table.FromColumns({Lines.FromBinary(File.Contents("C:\Users\MyComputer\Desktop\Example Log.las"), null, null, 1252)}),

#"Added Custom" = Table.AddColumn(Source, "curveFinder", each if Text.Contains([Column1], "~CURVE INFORMATION") then "COLUMNS" else if Text.Contains([Column1], "~ASCII DATA AREA") then "DATA" else null),

#"Filled Down" = Table.FillDown(#"Added Custom",{"curveFinder"}),

columnNames =

let

#"Filtered Rows" = Table.SelectRows(#"Filled Down", each ([curveFinder] = "COLUMNS")),

#"Removed Top Rows" = Table.Skip(#"Filtered Rows",1),

#"Removed Bottom Rows" = Table.RemoveLastN(#"Removed Top Rows",1),

#"Removed Other Columns" = Table.RemoveColumns(#"Removed Bottom Rows",{"curveFinder"}),

#"Extracted Text After Delimiter" = Table.TransformColumns(#"Removed Other Columns", {{"Column1", each Text.AfterDelimiter(_, " . "), type text}}),

#"Trimmed Text" = Table.TransformColumns(#"Extracted Text After Delimiter",{{"Column1", Text.Trim, type text}}),

#"Cleaned Text" = Table.TransformColumns(#"Trimmed Text",{{"Column1", Text.Clean, type text}}),

#"Replaced Value" = Table.ReplaceValue(#"Cleaned Text"," ","",Replacer.ReplaceText,{"Column1"}),

Custom1 = #"Replaced Value"[Column1]

in

Custom1,

data =

let

#"Filtered Rows" = Table.SelectRows(#"Filled Down", each ([curveFinder] = "DATA")),

#"Removed Top Rows" = Table.Skip(#"Filtered Rows",1),

#"Removed Columns" = Table.RemoveColumns(#"Removed Top Rows",{"curveFinder"}),

#"Split Column by Delimiter" = Table.SplitColumn(#"Removed Columns", "Column1", Splitter.SplitTextByDelimiter(" ", QuoteStyle.Csv), columnNames)

in

#"Split Column by Delimiter"

in

dataI've tested this on your log file, which contains 22 columns. I also incrementally deleted column names and corresponding data columns as part of my tests. It passed those tests.

While this may work now, it is very brittle and makes a TON of assumptions about the structure of your data in the future. Most important assumptions:

- Column names will always fall under '~CURVE INFORMATION' and the data will always fall under '~ASCII DATA AREA'

- There will always be a space between the last column name and '~ASCII DATA AREA'

- Column names will always be after this delimiter: ' . '

- The data values will always be delimited by a space (' ')

I hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Is the whole data in the Example Log.las your source data which is imported to Power BI?

If so, could you paste some example data here so i can use them to test?

Also, could you show what it look like after importing data into Power BI?

Best Regards

Maggie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let

Source = Csv.Document(File.Contents("C:\Users\MyComputer\Desktop\Example Log.las"),[Delimiter=" ", Columns=22, Encoding=1252, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"~VERSION INFORMATION ", type text}, {"", type date}, {"_1", type time}, {"_2", type number}, {"_3", type text}, {"_4", Int64.Type}, {"_5", Int64.Type}, {"_6", Int64.Type}, {"_7", type number}, {"_8", Int64.Type}, {"_9", type number}, {"_10", Int64.Type}, {"_11", Int64.Type}, {"_12", Int64.Type}, {"_13", type number}, {"_14", type number}, {"_15", type number}, {"_16", type number}, {"_17", type number}, {"_18", type number}, {"_19", Int64.Type}, {"_20", Int64.Type}})

in

#"Changed Type"~VERSION INFORMATION

VERS. 3.0 : CWLS LOG ASCII STANDARD -VERSION 3.0

WRAP. NO : ONE LINE PER DEPTH STEP

DLM . TAB : DELIMITING CHARACTER BETWEEN DATA COLUMNS

~WELL INFORMATION BLOCK

STRT .SEC 2012-07-01 05:15:00 : START TIME

STOP .SEC 2012-07-02 05:15:00 : STOP TIME

STEP .SEC 1 : STEP

NULL . -999.25 : NULL VALUE

COMP. EXAMPLE :COMPANY

WELL. EXAMPLE 21-1HB :WELL

FLD. MOOREEXAMPLE NE :FIELD

LOC. 270 :LOCATION

PROV. TX :PROVINCE/STATE

SRVC. EXAMPLE SERVICES INC. :SERVICE COMPANY

DATE. 07-02-2012 05:17:30 :START DATE

LATI. 32.8419 :LATITUDE

LONG. -97.5262 :LONGITUDE

GDAT. :GEODETIC DATA

CTRY. :COUNTRY

~CURVE INFORMATION

Hole.ft : 1 . Hole Depth

DATE. : 2 . DATE {MM-DD-YYYY}

TIME. : 3 . TIME {hh:mm:ss}

Gamm.API : 4 . Gamma Ray

Bit .Bottom : 5 . Bit Status {S}

Pump.psi : 6 . Pump Pressure

Diff.psi : 7 . Diff Press

Flow.galUS/min : 8 . Flow In Rate

Bit .ft : 9 . Bit Position

Bit .klb : 10 . Bit Weight

Hook.klb : 11 . Hook Load

ROP .ft/hr : 12 . ROP - Average

Top .RPM : 13 . Top Drive RPM

Top .ft·lbf : 14 . Top Drive Torque

Gamm.ft : 15 . Gamma Depth

Svy .deg : 16 . Svy Azimuth

Svy .deg : 17 . Svy Inclination

Tool.deg : 18 . Toolface Mag

Tool.deg : 19 . Toolface Grav

Bloc.ft : 20 . Block Height

Pump.SPM : 21 . Pump SPM 1

Pump.SPM : 22 . Pump SPM 2

~ASCII DATA AREA

15613.88 07-01-2012 05:15:00 -999.25 Off 0 -3021 0 2692.96 0.0 44.5 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 24.88 0 0

15613.88 07-01-2012 05:15:01 -999.25 Off 0 -3021 0 2692.38 0.0 65.8 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 25.46 0 0

15613.88 07-01-2012 05:15:02 -999.25 Off 0 -3021 0 2691.16 0.0 71.1 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 26.68 0 0

15613.88 07-01-2012 05:15:03 -999.25 Off 0 -3021 0 2689.35 0.0 72.4 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 28.77 0 0

15613.88 07-01-2012 05:15:04 -999.25 Off 0 -3021 0 2686.83 0.0 72.4 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 31.01 0 0

15613.88 07-01-2012 05:15:05 -999.25 Off 0 -3021 0 2683.72 0.0 73.7 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 34.48 0 0

15613.88 07-01-2012 05:15:06 -999.25 Off 0 -3021 0 2679.90 0.0 73.7 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 37.94 0 0

15613.88 07-01-2012 05:15:07 -999.25 Off 0 -3021 0 2675.79 0.0 75.4 0.0 0 0 -999.25 332.30 89.40 218.2 -999.25 42.05 0 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 112 | |

| 100 | |

| 80 | |

| 64 | |

| 57 |

| User | Count |

|---|---|

| 145 | |

| 111 | |

| 92 | |

| 84 | |

| 66 |