- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Can you help me understand system requirements...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you help me understand system requirements and how to reduce memory needs?

I am currently using Power BI Desktop (September 2019) for my company and I usually have to export large amounts of data from multiple systems to CSV and then load it into my Power Query Editor. At this time, there is not a way for me to connect directly to the source systems.

Within Power Query Editor, I do a lot of data cleansing and manipulation, building of a unique key table to link the data, pivots, appending, etc. I spent a lot of time in Query Editor waiting for things to load so I can do the steps necessary to clean the data.

When I click "Close and Apply", it is not unusual to have data models that can take 1-2 hours to finish processing. I recently had a data model where I added an additional data file and it gave me an error saying that "there's not enough memory". So, the system was not able to process everything.

Therefore, I wanted to look at the following options:

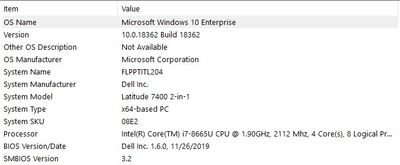

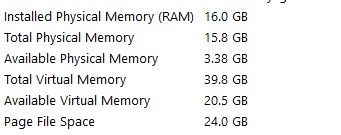

(1) Would a better computer help? I can ask my manager for a more powerful computer if I can justify the need for it. Right now, I am using Power BI Desktop (September 2019) because our company has not updated Power BI Server yet so I have to stay on that version of Power BI. I did read this article regarding system requirements but I am not very familiar with how the systems work. Below are my laptops specs. Would getting a better computer help much and if so, what do I need to specifically request?

(2) How can I better optimize my data so that it can process faster?

(2a) I have been trying to read more on this topic because initially, as a new developer, I would just pull in all of the data that I think I could possibly need. Now I am learning that I need to reduce the number of columns where possible and that it is not as much of an issue with the number of rows.

As a follow-up question to this, does that mean in query editor, I can pull in all of the columns (let's say 30 columns) and then remove 20 of the columns that are not as important within Query Editor. OR, do I need to reduce the file to ONLY the 10 columns I need before I even import the data into Query Editor?

(2b) Another step I have been taking is to use multiple PBIX files that feed into one another. For example, I just pulled in a huge payroll file that has all of the detail information (which I may need if questions are asked). I would then process all of that data in a PBIX file, summarize it to the key elements I need, and then EXPORT it into Excel. Then I would IMPORT that already processed data into a new PBIX file (along with other files) to reduce the processing requirement. Is that a normal practice or workaround?

When I used to do a lot of work with Access, I would sometimes use an Access database to initially clean and process the various data sources. Then I would use another Access DB to link to the other databases to pull the data together. So, I'm replicating that idea in Power BI.

Any advice that can be provided would be appreciated. If you have articles on optimizing the speed, I will study those. I have already done some research on that but it is not an area of expertise for me.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. yes, although 16 GB should give you comfortable performance you can see that it is not enough by the size of the swap file.

2. Merging and joining in Power Query is not just slow, it also messes up query folding. Probably not as important for you since your data source doesn't support folding, but worth mentioning nevertheless. Whereever possible keep the Power Query data sources separate, and use the Power BI part to join the tables into a meaningful data model

2a. Very much so. Keep your tables narrow. Length is not as important. Learn about cardinality though, and about normalization/denormalization.

2b. Parallel data sources are preferable to daisy-chained data sources.

If you haven't used DAX Studio yet - drop everything, install DAX Studio, learn how it works, and then go back to Power BI and learn about the Power Query Column Statistics tools too. These two tools will help you understand your data better and will help you make informed decisions on your process and data model design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. yes, although 16 GB should give you comfortable performance you can see that it is not enough by the size of the swap file.

2. Merging and joining in Power Query is not just slow, it also messes up query folding. Probably not as important for you since your data source doesn't support folding, but worth mentioning nevertheless. Whereever possible keep the Power Query data sources separate, and use the Power BI part to join the tables into a meaningful data model

2a. Very much so. Keep your tables narrow. Length is not as important. Learn about cardinality though, and about normalization/denormalization.

2b. Parallel data sources are preferable to daisy-chained data sources.

If you haven't used DAX Studio yet - drop everything, install DAX Studio, learn how it works, and then go back to Power BI and learn about the Power Query Column Statistics tools too. These two tools will help you understand your data better and will help you make informed decisions on your process and data model design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlin Thank you very much for your detailed answer and support.

1. I will check with IT to see if I can get more RAM for my computer.

2. Query folding is a new concept to me. For anyone else reading this post, I found this resource helpful in understanding the basics of query folding. Thank you for explaining that. I will need to learn more but I think part of my problem is that I think I must be doing tons of query folding. Here's an example of a typical model for me:

I. I pull data from a system that users a NPI (provider) number as the person identifier along with some other data (such as wRVUs-that measures work) and a specific date.

II. I pull data from another system that does not contain an NPI but has an employee ID with payroll data that occurs every two weeks and sometimes overlaps into two months. NPI to EEID has a one to many relationship since one provider can be an employee with their SSN and they can also have another employee record as their business EIN. It also has each provider's pay for each contract that they work at.

III. I pull budgets from a financial system which are based on the month and Contract ID.

In order to link this all together, I have to assign the EEIDs to an NPI (using a separate mapping that I built out from multiple company data sources). I also have to assign every record from the three data sources to a YYYY-MM so I have to transform the data to get that field. Then there are other considerations like I have to find terminations using another file and pull those out.

So, as a result, I end up creating a unique key that is the NPI-ContractID-YYYY-MM so I can link all three data sources. But that requires a lot of data manipulation of all of the sources.

I did take this DAX course which was very basic but did not cover the items you suggest so I will look into that. I do have DAX studio so I am trying to figure out how to use it.

I am not sure if I understand parallel data sources versus daisy chain. I assume that the scenerio I described in my original post is daisy chain.

Can you help me understand what parallel might look like in the above scenario? I would like to know if there's a more efficient way to do the transformations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The best option is to have an external system that does all the data model work for you (like an SSAS cube).

Second best option is to let Power BI's data model do the work for you, meaning that you only import the individual data sources and then link them in Power BI's visual part. That requires individual key links (which you may not have) and prefers star schema or snowflake schema of "dimension" and "fact" tables - which you may or may not be able to accomplish.

Worst option is to do data modeling in Power Query. That always backfires sooner or later.

btw, what you are doing as described in the original post is not query folding, but the exact opposite. Query folding means that Power Query can skip all prior transforms in a query by pushing out the entire query logic up to this point back to the upstream system.

Let's say you have a giant source table in SQL server, and need to fetch only a small subset of it in Power Query. Naturally what you would try is to use a custom query in the Source line. While intuitive, it is also "wrong" as this is the moment where query folding is blocked. Instead, connect to your giant table as is, and then do the transforms in Power Query (filtering etc). When you right click on the generated Power Query steps you will see the "View Native Query" option available as long as query folding is still active.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlin thanks very much for your help. That makes sense and I will look into some of your suggestions.

It sounds like modeling my data in SSAS first may be a better option (however, I do not know how to do that but I may be able to learn). I will also see if there's someone at my company that may be able to help me.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 114 | |

| 97 | |

| 86 | |

| 70 | |

| 62 |

| User | Count |

|---|---|

| 151 | |

| 120 | |

| 103 | |

| 87 | |

| 68 |