- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculating kWh based on datetime and kW

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculating kWh based on datetime and kW

Hi

I want to calculate kWh.

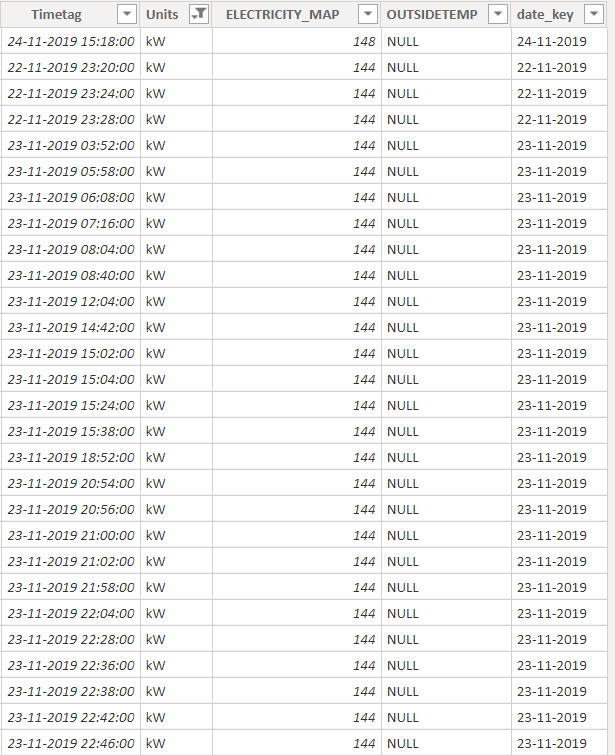

I have a table with time stamps and corresponding kW, and I want to convert it to kWh.

I can imagine I have to calculate some time duration in hours, but I don't know how to go about doing this so that I'm still able to filter on an store ID and a time period.

Do anyone have an idea how to do this?

Thank you very much.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This turned out to be much more challenging than expected. Power BI kept running out of memory even though it is running on 32 GB. I ended up presorting the data in SQL server (and adding the index row there)

SELECT

ROW_NUMBER() OVER(ORDER BY [Telemetry AssetID ]

,[Telemetry PointName ]

,[Telemetry Timetag ] asc) Row,

[Telemetry SiteID ] [SiteID]

,[Telemetry AssetID ] [AssetID]

,[Telemetry PointName ] [PointName]

,[Telemetry Timetag ] [Timetag]

,[Telemetry Units ] [Units]

,[Telemetry ELECTRICITY_MAP ] [ELECTRICITY_MAP]

,[Telemetry StoreID ] [StoreID]

,[Telemetry date_key ] [date_key]

FROM [Telemetry]

Then the calculated column can be written as such: (shown in two variations)

kWh =

var i = Telemetry[Row]

var t = Telemetry[Timetag]

var ap = Telemetry[AssetID] & Telemetry[PointName]

var f = filter(Telemetry,Telemetry[Row]=i-1)

var pt = SELECTCOLUMNS(f,"pt",Telemetry[Timetag])

var pv = SELECTCOLUMNS(f,"pv",Telemetry[ELECTRICITY_MAP])

var pap= SELECTCOLUMNS(f,"pap",Telemetry[AssetID] & Telemetry[PointName])

//var pt = maxx(f,Telemetry[Timetag])

//var pv = maxx(f,Telemetry[ELECTRICITY_MAP])

//var pap = maxx(f,Telemetry[AssetID] & Telemetry[PointName])

return if (ap=pap,divide(Telemetry[ELECTRICITY_MAP]+pv,120)*DATEDIFF(pt,t,MINUTE),0)

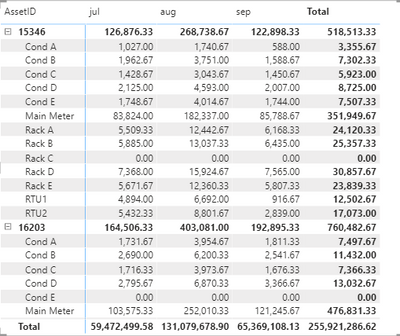

and the calculation yields the expected result.

Thank you for the challenge. I'm still baffled that Power BI struggles with such a seemingly small dataset (7.8M rows) but I guess there are a few cartesian products happening that explode the memory needs. Hope someone else can find a faster solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @lbendlin , i used your formula for the calculated column and it works just fine. In my case i had also to calculate from kW to kWh where the readings (timetags) are not evenly distributed (1:1 as in the example above). The problem is now, when i would like to create an incremental refresh, it seems calculated columns doesn't work with incremental refresh. How could i change the calculated column to a measure? Let's assume my table is also called "Telemetry", and i have two columns which are "Timestamp" and "Electricity Map".

I would appreciate your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't see a reason why a calculated column would not work with incremental refresh - do you have anything to reproduce that?

Please provide sanitized sample data that fully covers your issue. If you paste the data into a table in your post or use one of the file services it will be easier to work with. Please show the expected outcome.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your quick reply! When i activated the incremental refresh there was an error after the refresh on the visuals that the calculated column had no data in it. When I removed incremental refresh, it worked again. I think it's very similar to this case: Incremental Update Issue: Calculated Column Data D... - Microsoft Power BI Community

Here is a fraction of my dataset: https://www.transfernow.net/dl/20220208JpCf11jW

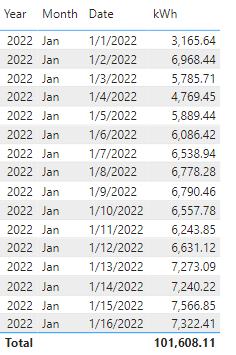

In my full dataset i have data from 2019 till today. What i would like to get kWh per Day, Month and Year.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll look at the data but I think might be a misunderstanding of how incremental refresh works. The post you link to seems to be based on the same misunderstanding.

Whenever you make structural (meta data) changes to an incremental refresh data source and then publish from Power BI Desktop to the workspace the following things will happen:

- All partitions are removed, and replaced by a single partition based on your upload (governed by the RangeStart and RangeEnd settings in Power Query, NOT by the incremental refresh settings in Power BI)

- If scheduled refresh is enabled for the dataset then a new refresh is triggered. Otherwise nothing will happen until you initiate a manual refresh

- upon this new refresh all partitions are recreated and recomputed based on the settings in Power BI, NOT on the Power Query settings.

- Any refresh request after that will execute based on the incremental refresh rules you indicated in Power BI.

This is the basic process. Advanced users will use XMLA queries to selectively refresh individual partitions, and use ALM toolkit to selectively modify meta data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alright, i will try it again with the incremental refresh settings - maybe i messed something up, this is a very new topic for me 😉

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the calculated column that computes the integral. Your timestamp granularity is good enough so you don't have to worry about the day passage. Performance is still bad, so I'll try Power Query next.

kWh =

var ts = 'data (1)'[Timestamp]

var pts = CALCULATE(max('data (1)'[Timestamp]),'data (1)'[Timestamp]<ts)

var ptsw = CALCULATE(sum('data (1)'[kW]),'data (1)'[Timestamp]=pts)

return divide('data (1)'[kW]+ptsw,2,0)*24*(ts-pts)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i'm running out of memory while performing this calculated column. I added now an Index (Row) and tried it with your posted formula from above (slightly adapted) and this works very smooth.

kWh =

var i = 'data (1)'[Row]

var t = 'data (1)'[Timestamp]

var f = filter('data (1),'data (1)'[Row]=i-1)

var pt = SELECTCOLUMNS(f,"pt",'data (1)'[Timestamp])

var pv = SELECTCOLUMNS(f,"pv",'data (1)'[kW])

return(divide('data (1)'[kW]+pv,120)*DATEDIFF(pt,t,MINUTE))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your data has sub-minute granularity. I would be wary about using DATEDIFF.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@chthomama Here is a good solution that is accurate and performant:

Add index column in Power Query.

Add calculated column:

kWh =

var idx= 'data'[Index]-1

var pts = COALESCE(CALCULATE(max('data'[Timestamp]),All('data'),'data'[Index]=idx),'data'[Timestamp])

var ptsw = CALCULATE(sum('data'[kW]),All('data'),'data'[Index]=idx)

return ('data'[kW]+ptsw)*12*('data'[Timestamp]-pts)

see attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds like a typical integral calculus. Multiply the average of two adjacent data points by the duration between them, that will give you the amount of energy consumed during that period. Then aggregate it up to hourly level. It gets a little fuzzy because you may not be able to guarantee that a measurement is available exactly at the top of the hour but your data granularity seems good enough to fudge it.

If you want help with the implementation please provide the sample data in usable format.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fantastic @lbendlin

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lbendlin

I would very much like some help with the implementation. Seems like the skills needed are a bit over my head.

How would like the format of a data sample? CSV or a .pbix file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Either is fine but if you already have the pbix started then that would be nicer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great.

You have the pbix file here.

Let me know if you need to know anything else. Thanks in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What type of granularity do you need? Is it sufficient to calculate the kWh per day, or do you need it more detailed? Your Calendar table only has Day level so I assume that's what we are going for?

By the way, CALENDARAUTO() already takes care of the MIN/MAX dates. You can simplify the DAX.

Calendar =

ADDCOLUMNS (

CALENDARAUTO( ),

"Year", FORMAT( [DATE],"yyyy"),

"Month", FORMAT ( [DATE], "MMM" ),

"Month Number", FORMAT( [DATE], "M" ),

"Weekday", FORMAT ( [DATE], "DDDD" ),

"Weekday Number", WEEKDAY( [DATE], 2 ),

"Quarter", "Q" & TRUNC ( ( MONTH ( [DATE] ) - 1 ) / 3 ) + 1,

"Week Number", WEEKNUM([DATE],2),

"Month Year", FORMAT([DATE],"MMM yy"),

"Month Year Number", FORMAT( [DATE],"yyyy")+FORMAT( [DATE], "M" ),

"date_key", FORMAT([DATE],"dd-mm-yyyy")

)- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a first rough approach using a measure. While it technically works it has really bad performance.

kWh =

var tt = selectedvalue(Telemetry[Timetag])

var curr = CALCULATE(sum(Telemetry[ELECTRICITY_MAP]),allselected(Telemetry),Telemetry[Timetag]=tt)

var ttprev = CALCULATE(max(Telemetry[Timetag]),allselected(Telemetry),Telemetry[Timetag]<tt)

var prev = CALCULATE(sum(Telemetry[ELECTRICITY_MAP]),allselected(Telemetry),Telemetry[Timetag]=ttprev)

return divide(curr+prev,120)*DATEDIFF(ttprev,tt,MINUTE)

Thinking about this a bit more I think a measure is not really needed - a calculated column should do as well. And it might also help with the performance. What is your ultimate granularity level - is AssetID + PointName sufficient? If yes, can you please produce a new version of the pbix that sorts the telemetry table by AssetID, PointName and Timetag , and add an index column after that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This turned out to be much more challenging than expected. Power BI kept running out of memory even though it is running on 32 GB. I ended up presorting the data in SQL server (and adding the index row there)

SELECT

ROW_NUMBER() OVER(ORDER BY [Telemetry AssetID ]

,[Telemetry PointName ]

,[Telemetry Timetag ] asc) Row,

[Telemetry SiteID ] [SiteID]

,[Telemetry AssetID ] [AssetID]

,[Telemetry PointName ] [PointName]

,[Telemetry Timetag ] [Timetag]

,[Telemetry Units ] [Units]

,[Telemetry ELECTRICITY_MAP ] [ELECTRICITY_MAP]

,[Telemetry StoreID ] [StoreID]

,[Telemetry date_key ] [date_key]

FROM [Telemetry]

Then the calculated column can be written as such: (shown in two variations)

kWh =

var i = Telemetry[Row]

var t = Telemetry[Timetag]

var ap = Telemetry[AssetID] & Telemetry[PointName]

var f = filter(Telemetry,Telemetry[Row]=i-1)

var pt = SELECTCOLUMNS(f,"pt",Telemetry[Timetag])

var pv = SELECTCOLUMNS(f,"pv",Telemetry[ELECTRICITY_MAP])

var pap= SELECTCOLUMNS(f,"pap",Telemetry[AssetID] & Telemetry[PointName])

//var pt = maxx(f,Telemetry[Timetag])

//var pv = maxx(f,Telemetry[ELECTRICITY_MAP])

//var pap = maxx(f,Telemetry[AssetID] & Telemetry[PointName])

return if (ap=pap,divide(Telemetry[ELECTRICITY_MAP]+pv,120)*DATEDIFF(pt,t,MINUTE),0)

and the calculation yields the expected result.

Thank you for the challenge. I'm still baffled that Power BI struggles with such a seemingly small dataset (7.8M rows) but I guess there are a few cartesian products happening that explode the memory needs. Hope someone else can find a faster solution.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 99 | |

| 77 | |

| 66 | |

| 54 |

| User | Count |

|---|---|

| 144 | |

| 104 | |

| 102 | |

| 87 | |

| 64 |