- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculate the sum of Duplicate values from a ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculate the sum of Duplicate values from a Table

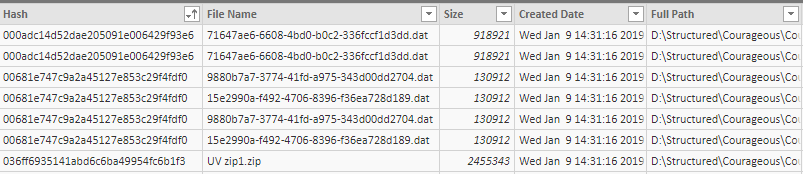

i have a table that has a hash entry for for each item in the table. where you have duplicate hash values i would like to sum the size column of only the duplicate value.

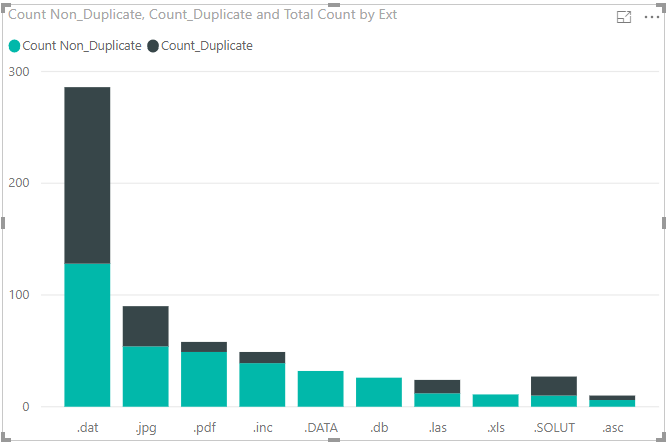

The aim is that i can have a clear split in a stacked column chart that shows the total size of the duplicate value and the total size of each distint size.(The total sum of the duplicate value and the distinct values should be equal to the total sum of the occurance of a particular hash entry).

i did something for the sum(see visual below). i just want something similar for the sum. i'm guessing it would require i create a measure that can sum the values of every distinct hash entry and also the sum of every duplicate entry. Just not sure how to go about do it. any help will be appreciated

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

I think the approach presented earlier could still be more versatile but we can certainly follow your logic for the count, with two measures.

The aggregate size of the files excluding the duplicates would be:

SizeWithoutDuplicates =

SUMX (

DISTINCT ( unstructured[Hash] ),

CALCULATE(DISTINCT(unstructured[Size]))

)

and then we can calculate the size from the duplicates using the previous measure:

SizeOfDuplicates = SUM ( unstructured[Size] ) - [SizeSizeWithoutDuplicates]

Does that make sense?

I'm also thinking, wouldn't it be better to build a table with no duplicates and work with that? Or are you actually interested in the info from the duplicates?

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

It's always better to show a sample of your data in text-tabular format in addition to (or instead of) a screen capture. Otherwise people trying to help cannot copy the data and work with it.

I'm curious. How did you do it for the count shown in the chart? I would think what you need for the Size column would follow the same logic as what you need for the count.

I would create a new calculated column in your table in which you mark the rows that are duplicated. Probably the best option is to show how many times the row appears in the table. You can then use the column to easily tell apart the duplicates from the rest for whatever you need, for instance the SUM of Size.

NewColumn =

CALCULATE (

COUNT ( Table1[Hash] ),

ALLEXCEPT (

Table1,

Table1[Hash]

)

)In actual fact, if the duplicates are at the row level (i.e. the full row), the following ought to be valid too:

NewColumn = CALCULATE( COUNT(Table1[Hash]) )

since context transition will result in a filter on every column with the values in the current row. This, however, would be unnecessary if the hash alone uniquely defines a row and less efficient than the previous approach. Plus it would possibly fail if you add any other column to the table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AIB,

Sorry about that, i wasnt sure if there was any formatting for inserting tabular data here. I can forward the entire pbix file if this helps, but i'm also not how to do this here.(i've copied the file to onedrive, see link below)

To count only the duplicate values i had to get the count the distinct hash values in the rows and then subtracted it from the total rows in the table.

Count_Duplicate = CALCULATE(COUNTROWS(unstructured) -COUNTROWS(DISTINCT(unstructured[Hash])))

so in my Stacked bar chart visual i am showing a count of the unique rows and a count of the duplicate rows per extension. i now want to do the same for the Size column, just not sure how to go about it yet

Hash File Name Size Created Date Ext 000adc14d52dae205091e006429f93e6 71647ae6-6608-4bd0-b0c2-336fccf1d3dd.dat 918921 Wed Jan 9 14:31:16 2019 .dat 000adc14d52dae205091e006429f93e6 71647ae6-6608-4bd0-b0c2-336fccf1d3dd.dat 918921 Wed Jan 9 14:31:16 2019 .dat 00681e747c9a2a45127e853c29f4fdf0 15e2990a-f492-4706-8396-f36ea728d189.dat 130912 Wed Jan 9 14:31:16 2019 .dat 00681e747c9a2a45127e853c29f4fdf0 9880b7a7-3774-41fd-a975-343d00dd2704.dat 130912 Wed Jan 9 14:31:16 2019 .dat 00681e747c9a2a45127e853c29f4fdf0 15e2990a-f492-4706-8396-f36ea728d189.dat 130912 Wed Jan 9 14:31:16 2019 .dat 00681e747c9a2a45127e853c29f4fdf0 9880b7a7-3774-41fd-a975-343d00dd2704.dat 130912 Wed Jan 9 14:31:16 2019 .dat 036ff6935141abd6c6ba49954fc6b1f3 UV zip1.zip 2455343 Wed Jan 9 14:31:16 2019 .zip 03a7207db6b0975d67e9587c55838e0d fina2.prn 959110 Wed Jan 9 14:31:16 2019 .prn 041911c49ffe28152ffee31c7c2c128f Figure 1- sampling.pdf 979842 Wed Jan 9 14:31:16 2019 .pdf 044b8c7695490a8b2df3dc7a171c8e53 UV_5.JPG 813283 Wed Jan 9 14:31:16 2019 .JPG 057e3f7d4bf19a31170d2123577b6b61 Enc11.pdf 3690599 Wed Jan 9 14:31:16 2019 .pdf 05fa9166cd534684b7b8685db34b38a1 224e891f-ab20-4fdb-828c-0cd1fc5a0aed.dat 176718 Wed Jan 9 14:31:16 2019 .dat 05fa9166cd534684b7b8685db34b38a1 224e891f-ab20-4fdb-828c-0cd1fc5a0aed.dat 176718 Wed Jan 9 14:31:16 2019 .dat 0641070c3b2ab508e3830c5a1d3015c5 30-2a-5-aitdsi.las 9144351 Wed Jan 9 14:31:16 2019 .las 0641070c3b2ab508e3830c5a1d3015c5 30-2a-5-aitdsi.las 9144351 Wed Jan 9 14:31:16 2019 .las 068caea49131db5a5aad28e2af1600ce 82204c42-8d11-4a68-9936-ae96a23ae0ed.dat 13125 Wed Jan 9 14:31:16 2019 .dat 068caea49131db5a5aad28e2af1600ce 82204c42-8d11-4a68-9936-ae96a23ae0ed.dat 13125 Wed Jan 9 14:31:16 2019 .dat 08a24d94b5bdd80f2ba0b8aa9a02df2d Plate 03 WL.jpg 8268853 Wed Jan 9 14:31:16 2019 .jpg 08a24d94b5bdd80f2ba0b8aa9a02df2d Plate 03 WL.jpg 8268853 Wed Jan 9 14:31:16 2019 .jpg 08a96bf259ce9127eedeb8198388f2c9 Courageous_PVTsim_NIL.fdb 2232320 Wed Jan 9 14:31:16 2019 .fdb 08e7d5257b1f35efa27ef3f7dae958c7 Gocad work_Courageous July 2007.pdf 5640816 Wed Jan 9 14:31:16 2019 .pdf 09d2b8a2303fd689241033ed8bc50347 report_final_version.doc 1005056 Wed Jan 9 14:31:16 2019 .doc 0b251039dd19125721c2ef3fbdbdd9b9 Plate 05 WL.jpg 8489791 Wed Jan 9 14:31:16 2019 .jpg 0b251039dd19125721c2ef3fbdbdd9b9 Plate 05 WL.jpg 8489791 Wed Jan 9 14:31:16 2019 .jpg 0c9e9442d9ea5299b9cadddd5f91de43 Plate 04 UV.jpg 7153698 Wed Jan 9 14:31:16 2019 .jpg 0c9e9442d9ea5299b9cadddd5f91de43 Plate 04 UV.jpg 7153698 Wed Jan 9 14:31:16 2019 .jpg 0e9ff542758c2242c5b6bb9c447b462f 0b40e24b-9fac-4e7d-b274-232bff1ed916.dat 176718 Wed Jan 9 14:31:16 2019 .dat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

I think the approach presented earlier could still be more versatile but we can certainly follow your logic for the count, with two measures.

The aggregate size of the files excluding the duplicates would be:

SizeWithoutDuplicates =

SUMX (

DISTINCT ( unstructured[Hash] ),

CALCULATE(DISTINCT(unstructured[Size]))

)

and then we can calculate the size from the duplicates using the previous measure:

SizeOfDuplicates = SUM ( unstructured[Size] ) - [SizeSizeWithoutDuplicates]

Does that make sense?

I'm also thinking, wouldn't it be better to build a table with no duplicates and work with that? Or are you actually interested in the info from the duplicates?

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks AIB,

this worked very well. i agree with you, creating a new table without duplicates is a good idea. Because its a cleanup job, i wanted to show how much data could be deleted and also highlight hotspots of areas where the value could be generated for cleanup purposes.

Thanks alot for your help

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 111 | |

| 100 | |

| 80 | |

| 64 | |

| 58 |

| User | Count |

|---|---|

| 146 | |

| 110 | |

| 93 | |

| 84 | |

| 67 |