- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculate the Count of the Mode Value for each...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculate the Count of the Mode Value for each SN Group

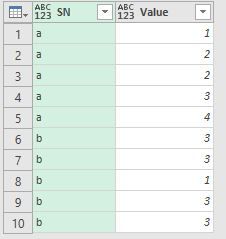

Source Data:

I was able to use the list functions for each grouped table to get:

Min

Max

Mode

Average

Count

#"Customized" = Table.Group(#"Changed Type", "SN", {{ "Min", each List.Min([Value]), type number},

{"Max", each List.Max([Value]), type number},{"Mode", each List.Mode([Value]), type number},

{"Average", each List.Average([Value]), type number},{"Count", each List.Count([Value]), type number}},

GroupKind.Local, Comparer.OrdinalIgnoreCase)

List.Mode returns the value used most for each table, but what is the proper way to add another column to show the count of the mode value occurances for each table?

For example, adding another column showing count of the mode values:

SN Mode_Value_Occurance

a 2

b 4

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something like this should work (additional code in red):

#"Customized" = Table.Group(#"Changed Type", "SN", {{ "Min", each List.Min([Value]), type number},

{"Max", each List.Max([Value]), type number},{"Mode", each List.Mode([Value]), type number},

{"Average", each List.Average([Value]), type number},{"Count", each List.Count([Value]), type number},

{"Mode_Value_Occurrence",

each let mode = List.Mode([Value]) in List.Count(List.Select([Value], each _=mode)), Int64.Type}},

GroupKind.Local, Comparer.OrdinalIgnoreCase)

Admittedly, the Mode ends up being evaluated twice for each group, but if this performs well enough I wouldn't worry about it.

Regards,

Owen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something like this should work (additional code in red):

#"Customized" = Table.Group(#"Changed Type", "SN", {{ "Min", each List.Min([Value]), type number},

{"Max", each List.Max([Value]), type number},{"Mode", each List.Mode([Value]), type number},

{"Average", each List.Average([Value]), type number},{"Count", each List.Count([Value]), type number},

{"Mode_Value_Occurrence",

each let mode = List.Mode([Value]) in List.Count(List.Select([Value], each _=mode)), Int64.Type}},

GroupKind.Local, Comparer.OrdinalIgnoreCase)

Admittedly, the Mode ends up being evaluated twice for each group, but if this performs well enough I wouldn't worry about it.

Regards,

Owen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @OwenAuger

It worked perfectly.

Help me undestand please.

There's no other way to define the mode value to count by looking at the "Mode" column?

That's the way I was trying to do it. Count the occurences in [Value} defined by the values in the "Mode" column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're welcome @roncruiser 🙂

One method I can think of to allow one aggregation to refer to another aggregation (e.g. Mode_Value_Occurrence to refer to Mode) is by creating a record rather than 6 separate aggregations, since the fields of a record can refer to each other. Then you just need another step to expand the record.

The code would look like this (paste into a blank query):

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WSlTSUTJUitWBsIywsIzhLBMwKwkuhsoyxC4bCwA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [SN = _t, Value = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SN", type text}, {"Value", type number}}),

Customized = Table.Group(#"Changed Type", "SN",{{ "Group", each [ Min = List.Min([Value]), Max = List.Max([Value]), Mode = List.Mode([Value]), Average = List.Average([Value]), Count = List.Count([Value]), Mode_Value_Occurrence = List.Count(List.Select([Value], each _=Mode))], type [Min=number, Max=number, Mode=number, Average=number, Count=Int64.Type, Mode_Value_Occurrence=Int64.Type]}}, GroupKind.Local, Comparer.OrdinalIgnoreCase),

#"Expanded Group" = Table.ExpandRecordColumn(Customized, "Group", {"Min", "Max", "Mode", "Average", "Count", "Mode_Value_Occurrence"}, {"Min", "Max", "Mode", "Average", "Count", "Mode_Value_Occurrence"})

in

#"Expanded Group"

Alternatively, it might be possible to a subsequent step using Table.AddColumn that takes the resulting Mode values and counts rows of the table just before the grouping step where Value matches the Mode. However this would be a bit awkward and would require effectively re-running the grouping. It would also be difficult since the Table.Group step has GroupKind.Local, meaning you would have separate groupings for each "run" of the same SN value.

Regards,

Owen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What would be more efficient in terms of processing time? Creating a record or table. My dataset is 150K devices (SN's). Each device has many different parameter values. I used "Value" as a generic term, but each device has ~100 different "Value's". From each device i would be extracting the same statistics for many different "Value" parameters. Knowing which to use, a record or a table, can give me a time advantage loading into the datamodel. As it is, it takes a very long time.

Thanks for your help again ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My intuition (without testing) is that creating and expanding the record adds some overhead that wouldn't exist with the regular grouping. Would be interested to hear about relative performance on a trimmed-down version of your dataset.

A side note - I would suggest changing GroupKind.Local to GroupKind.Global, unless you specifically wanted local grouping. This should also help with performance.

One other question - is it necessary to pre-calculate these statistics in Power Query, or could you simply have DAX measures that are evaluated when required?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For each device (.xml file), there are 40 different tracked parameters. For each parameter, there are 128 different nodes. Each node value is recorded in the .xml file for every parameter.

40 parameters x 128 nodes = 5120 recorded values in each .xml file

For 100K devices = 512M recorded values.

The xml files are stored on our network. Not a server database like MySQL.

My first thought was to divide the 40 different parameters into 10 different excel workbooks holding 10 parameters each. That's based on my experience or inexperience. 🙂 Pre-calculating each tracked parameter per device seemed logical rather than trying to cram it all into one super large data model.

I'm open for suggestions to speed things up. Rather than having to reduce the sample size.

-------------------------

I'll change to GroupKin.Global to increase performance and keep grouped tables. Thank you again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As an added note of information, all group bys and the statistics are caculated by a separate function. Maybe that adds more overall processing time. Is it better practice to have group bys and statistics done in the main query rather than going through a function to calculate the stats for each xml file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting - is there any way you could post a small example of the actual files and existing queries you have set up?

I'm going to tag @ImkeF and @MarcelBeug who are more specialised in Power Query and may have some smarter ways of handling this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure. I'll prep a workbook to share with the group.

Notes: Each xml file is approximately 330KB in size.

As an experiement, late yesterday I kicked off a full load of each xml file at a reduced sample size of 50K devices (50K xml files) into the data model. I built a function that strips out ~1000 rows of useful data from each xml file that is 15 columns wide. Just basically a mass data import. Then write pertinent measures and calculations on top of that.

It doesn't seem efficient to do it this way as it's still loading this morning when I walked into work:

46 Million Rows

17hr: 28min later.

I'm still leaning towards breaking up the data into separate workbooks...

Any guidance would be appreciated. I'll post up the sample workbook soon... Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, will look into the sample workbook, as I don't fully understand the requirement yet.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 99 | |

| 77 | |

| 66 | |

| 54 |

| User | Count |

|---|---|

| 144 | |

| 104 | |

| 102 | |

| 87 | |

| 64 |