- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Average processingtime per ticket state / Meas...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Average processingtime per ticket state / Measure required

Hello everybody,

I've got an problem creating a report for showing the average processing time for several tickets based on a processing table which includes each state for each ticket and the needed time of processing in each state ( each incident mentioned several times // one time for each state // Ticket ID is unique ).

The sample data is linked to this post.

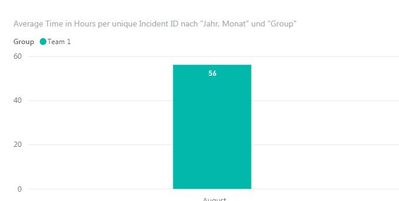

In this example there are 9 Unique Incidents within August 2018. I've already created a measure to calculate the average processing time for each team within this month based on the number of unique tickets within this month ( 9 unique tickets within 14 rows ). In this case 56,26 hours ( total hours 506 divided through 9 not 14 )

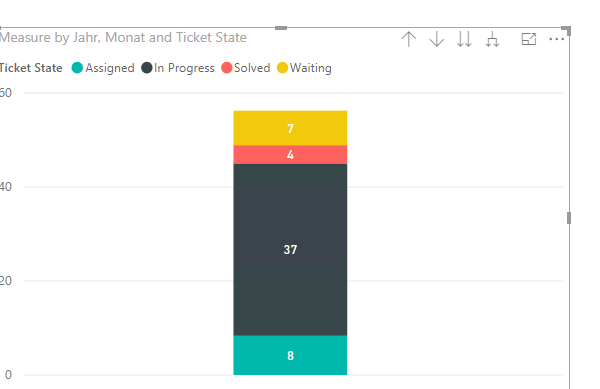

The issue I have is that now I want to calculate the average processing time by each state for each UNIQUE incident.

The expectation is that e.g. the 66 hours for state " waiting" will be divided by 9 unique incidents for August ( 7,33 hours ).

Of course the full dataset includes several months and because of this the reports needs a logic which divides by the number of unique incidents for each month. The result would be that the sum of 56 hours will be spread through the states with a total of 56 hours. ( Currently the sum is >150 because of the single states will be summarized ) .

The PBIX could be find here:

Thanks for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Cypher294,

Please use this measure.

Measure = CALCULATE(SUM(Sample_Data[Time in Hours]))/CALCULATE(DISTINCTCOUNT(Sample_Data[Incident ID]),ALLSELECTED(Sample_Data))

For more details, please check the pbix as attached.

Regards,

Frank

If this post helps, then please consider Accept it as the solution to help the others find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Cypher294,

To use the measure.

Measure = CALCULATE(SUM(Sample_Data[Time in Hours]))/CALCULATE(DISTINCTCOUNT('Incidents 2'[Incident ID]),ALLEXCEPT('Date','Date'[Date].[MonthNo]))

Regards,

Frank

If this post helps, then please consider Accept it as the solution to help the others find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @v-frfei-msft,

it looks well so long... But I have one more issue which cause currently some problems.

Normally not every ticket ID which is recorded in " Incidents 2 " is recorded in "Sample_Data" which cause some problems.

It divides by all the unique IDs recorded to Incident 2 table not just by the ones registered in BOTH.

If I adjust the measure ( page 2 --> Measure 2 ) It counts right for "Group" seperation but not for "State".

I've uploaded an adjusted sample data. Hopefully this is the last problem to be solved ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Cypher294,

To create another measure to work on State.

STATE = CALCULATE(SUM(Sample_Data[Time in Hours]))/CALCULATE(DISTINCTCOUNT('Sample_Data'[Incident ID]),ALLEXCEPT(Sample_Data,Sample_Data[Ticket State]))

Regards,

Frank

If this post helps, then please consider Accept it as the solution to help the others find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

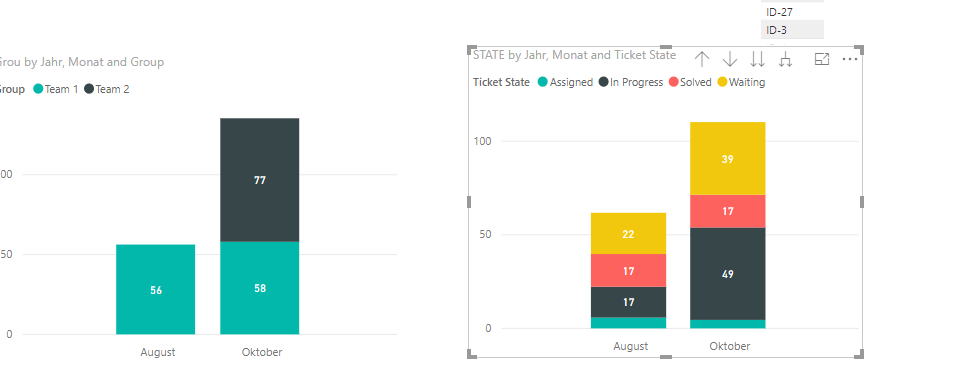

Hi @v-frfei-msft,

sorry it doesn't work until now ... For example the total hours for October is 1198,96. Divided by 17 Uniq Incidents for Oktober the Average should be ~ 70. These 70hours should be seperated by team and state within the two graphs but in both cases the Sum is 70. If you would select for example team 1 ( which has less than 70 for example the sum for Team 1 should also be seperated by the states but the sum should be the same ). Hopefully you understand what I mean.

currently in the last sample data the average in both graphs is not ~ 70 and additional different on both perspectives (SUM Group 135 and state 110 hours )

Maybe there is a way to fix it.

Thanks

Dennis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @v-frfei-msft,

Thanks for your fast support. I've tried it out but there is one more issue. The calculation just work for a single months if there is more data for additional months the average seems to be calculated for all IDs not for the ones within the dedicated month.

I've attached an additional sample data with more data to show the issue. if you select an explicit month it counts correctly but not if you not select one.

Thanks Dennis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Cypher294,

To update the measure as below.

Measure = CALCULATE(SUM(Sample_Data[Time in Hours]))/CALCULATE(DISTINCTCOUNT(Sample_Data[Incident ID]),ALLEXCEPT(Sample_Data,Sample_Data[Date].[MonthNo]))

Regards,

Frank

If this post helps, then please consider Accept it as the solution to help the others find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-frfei-msft,

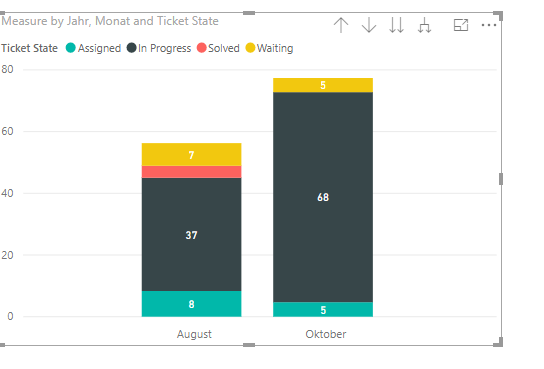

I Thought I could adapt the sample data to my real database table environment with all relations etc. but if I use the statement within this environment the calculation seems to be faulty.

I've attached a new pbix and seperated and relate the tables like they are in real.

The calculation with the seperation by team seems to be correct on a monthly basis but if I use the same measure to separate by state it seems not. The expextation is that the 77+58 ( sum by team = 135 ) will be distributed by the states but currently the sum is bigger than 135.

Maybe you could help again.

Thanks for your great support it helps me a lot.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 111 | |

| 100 | |

| 80 | |

| 64 | |

| 57 |

| User | Count |

|---|---|

| 146 | |

| 110 | |

| 93 | |

| 84 | |

| 67 |