- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Applying a Percentile on a Graph

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Applying a Percentile on a Graph

Hi all,

I am trying to work with a dataset that has these columns -

| Reporting Date | Form Type | ID | Actual Days |

| 12/2/2021 | Submittal | 01E-XXX-00002 | 467 |

| 12/2/2021 | Field Observation Report | 01C-XXX-00004 | 6 |

| 12/2/2021 | Field Observation Report | 01C-XXX-00004 | 377 |

| 12/2/2021 | Field Observation Report | 01C-XXX-00004 | 6 |

| 12/2/2021 | Submittal | 01C-XXX-00004 | 377 |

| 12/2/2021 | Submittal | 05BB-XXX-00001 | 8 |

| 12/2/2021 | RFI | 09AA-XXX-00001 | 411 |

| 12/2/2021 | RFI | 09AC-XXX-00003 | 405 |

| 12/2/2021 | Submittal | 09AS-XXX-00002 | 392 |

| 12/2/2021 | Submittal | 15AB-XXX-00002 | 399 |

| 12/2/2021 | RFI | 15AC-XXX-00001 | 1 |

I have created this line chart with the data -

I am wondering how do I exclude top 5% highest 'Actual Day's' values?

The measure I am using so far to attempt to filter =

BOTTOM 95% Latency =

VAR __rt = CALCULATE(Sum(Data[Actual Days]), FILTER(All(Data),Data[Actual Days] <= MAX(Data[Actual Days])))

VAR __95p = CALCULATE(SUM(Data[Actual Days]), ALL(Data)) * 0.95

RETURN

IF(__rt <= __95p, 1, 0)

I am filtering by 1 for the chart but am not sure of the results. It only classifies 4 rows as '0' out of 1,045 total rows and there are rows classified as '1' where Actual Days value is as high as the values classified as '0'.

Any input on this is greatly appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felixthecatx ,

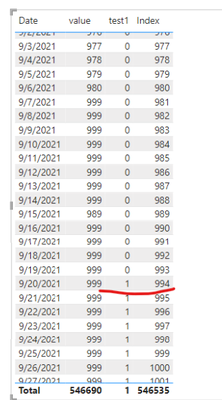

According to your description, 1045 data, 95 percent, 1045 * 0.95 = 992.75, that is, take the data after 993, if according to my previous method, when more than one duplicate value, may be all selected.

like below:

In my opinion, if you want to reach duplication with only one random peer, you need to create auxiliary index rows to ensure that 5 percent is 1.

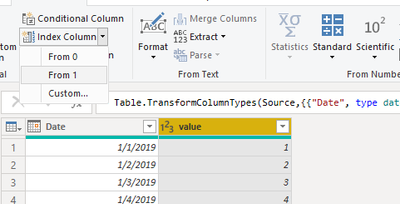

insert index:

Then adjust measure to the below:

test1 =

VAR RANK1 =

RANKX ( ALL ( 'Table' ), CALCULATE ( SUM ( 'Table'[value] )+0.0000001*SUM('Table'[Index]) ) )

VAR MIN1 =

CALCULATE ( COUNTROWS ( 'Table' ), ALL ( 'Table' ) ) * 0.05

RETURN

IF ( RANK1 <= MIN1, 1, 0 )final:

Best Regards

Lucien

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felixthecat_nyc ,

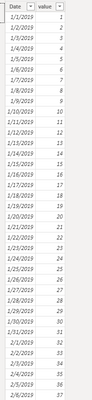

Since I don't have your original data of 1045 rows, I created a new template based on what you mentioned, as follows.

The sample have 1045 rows ,then I use the below measure:

test1 =

VAR RANK1 =

RANKX ( ALL ( 'Table'[Date] ), CALCULATE ( SUM ( 'Table'[value] ) ) )

VAR MIN1 =

CALCULATE ( COUNTROWS ( 'Table' ), ALL ( 'Table' ) ) * 0.05

RETURN

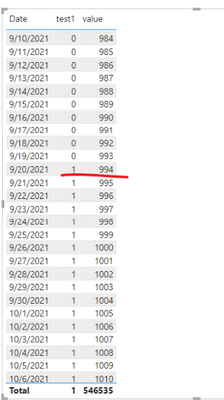

IF ( RANK1 <= MIN1, 1, 0 )Fianal get:(when value start from 994,then return 1)

The first step is to get the first 5 percent of the total, the second step is to sort the values, and then to get the desired data according to the worthy sort and the number of 5 percent.

In your sample ,use the below:

BOTTOM 95% Latency =

VAR __rt = RANKX ( ALL ( 'Data' ), CALCULATE ( SUM ( 'Data'[Actual Days] ) ) )

VAR __95p = CALCULATE ( COUNTROWS ( 'Data' ), ALL ( 'Data' ) ) * 0.05

RETURN

IF(__rt <= __95p, 1, 0)

Did I answer your question? Mark my post as a solution!

Best Regards

Lucien

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-luwang-msft . Thanks for your reply. The problem is I have repeating values so this method doesn't grab the right percentage. I am now trying to do something like this (although I haven't gotten this to work yet) -

I have a measure that = 95th percentile of the values column. I'm trying to use that to create a filter. I haven't had luck so far but this is essentially what I am trying =

Filter = IF((Data[Days]) >= [95% Days], 1, 0)- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felixthecatx ,

According to your description, 1045 data, 95 percent, 1045 * 0.95 = 992.75, that is, take the data after 993, if according to my previous method, when more than one duplicate value, may be all selected.

like below:

In my opinion, if you want to reach duplication with only one random peer, you need to create auxiliary index rows to ensure that 5 percent is 1.

insert index:

Then adjust measure to the below:

test1 =

VAR RANK1 =

RANKX ( ALL ( 'Table' ), CALCULATE ( SUM ( 'Table'[value] )+0.0000001*SUM('Table'[Index]) ) )

VAR MIN1 =

CALCULATE ( COUNTROWS ( 'Table' ), ALL ( 'Table' ) ) * 0.05

RETURN

IF ( RANK1 <= MIN1, 1, 0 )final:

Best Regards

Lucien

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is great, thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For more context - If I have 1,045 rows in my dataset, I am looking for 993 rows to be tagged as lower 95% in terms of 'Actual Days' values.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To expand on this issue, I have also formulated this measure =

95% Data =

VAR _sum =

SUM (Data[Actual Days])

VAR _top =

CALCULATE(

COUNTROWS( ADDCOLUMNS( VALUES(Data[Form ID]), "_sum", _sum)),

REMOVEFILTERS(Data[Form ID])

) * 0.95

RETURN

CALCULATE(

CALCULATE( AVERAGEA (Data[Actual Days])),

KEEPFILTERS(

TOPN(

_top,

ALLSELECTED( Data[Form ID]),

CALCULATE( AVERAGEA (Data[Actual Days]) ), ASC

)

)

)This seems to produce an average of the Actual Day values per Form ID with 5% excluded, which is great however - whenever I try to use this measure with other columns (namely the Reporting Date column) it breaks and the data no longer makes sense.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 111 | |

| 100 | |

| 80 | |

| 64 | |

| 58 |

| User | Count |

|---|---|

| 146 | |

| 110 | |

| 93 | |

| 84 | |

| 67 |