- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Ranks with filter function date

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ranks with filter function date

Hello to all,

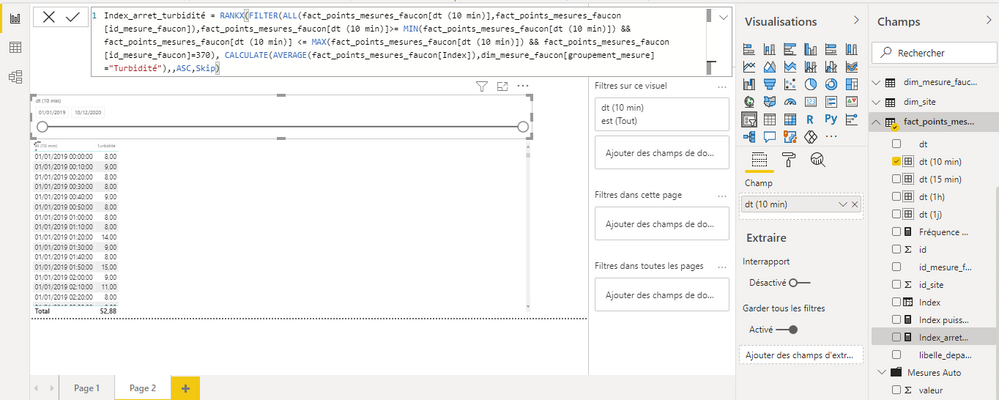

I use the following function filter for my Ranks measurement but I can't get the result I want:

Index_Arrets_Turbidity =

VAR min_ =

MIN ( dim_date[Date] )

VAR max_ =

MAX ( dim_date[Date] )

RETURN

RANKX (

FILTER (

ALL ( fact_points_measures_faucon ),

fact_points_measures_faucon [id_measure_faucon] = 370

&& fact_points_measures_faucon [dt (1j)] >= min_

&& fact_points_measurements_faucon [dt (1d)] <= max_

),

CALCULATE (

AVERAGE ( fact_points_measurements_faucon [Index] ),

dim_measurements_faucon [group_measurement] = "Turbidity".

),

,

CSL,

SKIP

)

I would like the values of DATE() not to be filled in manually but to be filled in automatically according to my time segment :

I share my pbix by this link: https://1drv.ms/u/s!Ao1OrcTeY008gYU2OOM2oBSrDBzgpQ?e=1kZwbg

Thanks in advance,

Joël

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem was that your Val_Turb_Freq_1h measure was not returning values for the day level rows. Here is a new measure that seems to work. It's a little off as your hardcoded values use a value of 81 as the threshold but the overall result for Val_Turb_Freq_1h is 80. The result from this measure matches those when 80 is used.

NewMeasure =

VAR ValTurbFreq1h =

CALCULATE (

[Val_Turb_Freq_1h],

ALLSELECTED ()

)

VAR vStop =

COUNTROWS (

FILTER (

VALUES ( fact_points_mesures_faucon[dt (10 min)] ),

[Turbidité] >= ValTurbFreq1h

)

) / 6

RETURN

vStop

Also, the logic to get your threshold values seems to be the 144th value of Turbidity (regardless if the scope is day, month, year or all time). Is that your intended logic?

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

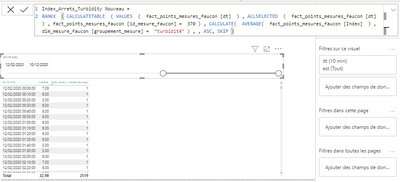

Thanks for sharing your pbix. I looked at it but am still a little confused. Please tell me if this measure is closer to what you are looking for. If not, please explain the calculation you are trying to do.

Index_Arrets_Turbidity New =

RANKX (

CALCULATETABLE (

VALUES ( fact_points_mesures_faucon[dt] ),

ALLSELECTED ( fact_points_mesures_faucon[dt] ),

fact_points_mesures_faucon[id_mesure_faucon] = 370

),

CALCULATE (

AVERAGE ( fact_points_mesures_faucon[Index] ),

dim_mesure_faucon[groupement_mesure] = "Turbidité"

),

,

ASC,

SKIP

)

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

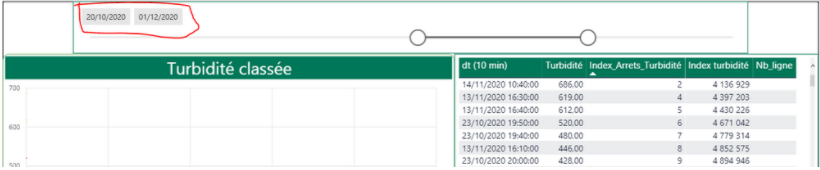

@mahoneypat thank you for your help so fast and precious nevertheless I get this result:

Unfortunately this is not the result I want.

What I would like to have is a Ranks function that automatically recalculates itself according to the time segment.

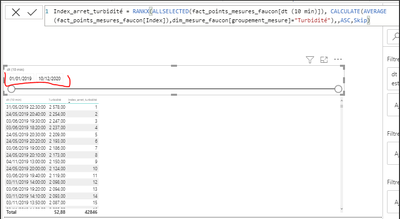

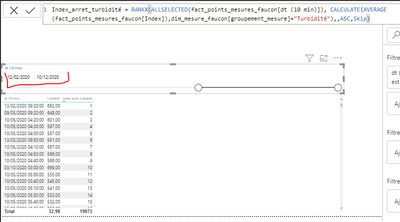

Roughly the same result as this function:

But without using "ALLSELECTED" because this function truncates the result of the other measurements that are related to it.

Thanks for your help

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you give an example of another calculation that is impacted by the ALLSELECTED on that column?

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mahoneypat I will try to explain my problem. First of all, I am French, which explains the writing of certain measures. I analyze the production of a hydroelectric power plant according to a turbidity threshold. Turbidity is the power of water. The higher its value is, the greater the risk of deteriorating our equipment. The definition of this threshold is important because it is the one that will determine whether or not we shut down the hydroelectric plant. My first objective was to find the turbidity value for a day of shutdown of the power station according to a period of time that I will have chosen thanks to the segment. To create this measurement I first used the "index" column of my Dataset and created the following measurement:

I used value 144 because my time base is in DT10MIN and therefore 24*6 is equal to 24h. The result obtained corresponds to my expectations. I then created a measure to calculate the downtime in h by first creating an "IF" function:

Arrêts turbidité_1j =

IF([Turbidité]>=[Val_Turb_Freq_1h],1,0)

Then a measurement that summarizes the downtime :

Arrêts turbidité_temps_1j (en h) =

SUMX(SUMMARIZE(VALUES(fact_points_mesures_faucon[dt (10 min)]),fact_points_mesures_faucon[dt (10 min)],"STOP",[Arrêts turbidité_1j]/6),[STOP])

problem, I get the result in the green table on the left.

However, when in the measurement "Arrêts turbidité_1j " I manually enter the turbidity threshold like this:

Arrêts turbidité_1j =

IF([Turbidité]>=80,1,0)

I get the result from the green table on the right and it's the correct one.

I would therefore like this result without having to manually enter the turbidity threshold since I have it automatically with the "Val_Turb_Freq_1h" measurement.

I hope it was understandable, thank you in advance,

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spent some time looking at it and am still a little confused at the calculation you are trying to do (the green tables aren't in the model). I'm sure there is a simpler way to calculate this. Can you explain in one sentence what you are looking for? In any case, one suggestion is to calculate [Val_Turb_Freq_1h] as a variable up front.

Arrêts turbidité_temps_1j (en h) =

var ValTurbFreq1h = [Val_Turb_Freq_1h]

Return

SUMX(SUMMARIZE(VALUES(fact_points_mesures_faucon[dt (10 min)]),fact_points_mesures_faucon[dt (10 min)],"STOP",IF([Turbidité]>=ValTurbFreq1h,1,0)/6),[STOP])

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you again for your time @mahoneypat . I have updated the PBIX link by adding the two green boards. I tested the formula but unfortunately it doesn't work ...

https://1drv.ms/u/s!Ao1OrcTeY008gYU2OOM2oBSrDBzgpQ?e=NBEX1B

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. I'll take a look this evening after work (but others should feel free to chime in to help too). Can you also provide a one-sentence summary of the desired calculation? I may start from scratch (the current approach isn't performant even when it gets fixed).

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @mahoneypat, I will try to explain what I want to calculate. I've added a visual in the link of my PBIX to make it more understandable. I would like the green table on the left to calculate for each day the shutdown duration according to the turbidity threshold that is defined upstream.In fact, I would like the same results as the green table on the right but using the value of the measurement: "Val_turb_freq_1h" instead of "80" in the IF measurement "Stop_turbidity_Freq_1h = IF([Turbidity]>=80, 1.0)" so that the calculation is done automatically without me entering the value manually.

https://1drv.ms/u/s!Ao1OrcTeY008gYU2OOM2oBSrDBzgpQ?e=jz8hV5

Thank you for your time,

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem was that your Val_Turb_Freq_1h measure was not returning values for the day level rows. Here is a new measure that seems to work. It's a little off as your hardcoded values use a value of 81 as the threshold but the overall result for Val_Turb_Freq_1h is 80. The result from this measure matches those when 80 is used.

NewMeasure =

VAR ValTurbFreq1h =

CALCULATE (

[Val_Turb_Freq_1h],

ALLSELECTED ()

)

VAR vStop =

COUNTROWS (

FILTER (

VALUES ( fact_points_mesures_faucon[dt (10 min)] ),

[Turbidité] >= ValTurbFreq1h

)

) / 6

RETURN

vStop

Also, the logic to get your threshold values seems to be the 144th value of Turbidity (regardless if the scope is day, month, year or all time). Is that your intended logic?

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I didn't see your question: " Also, the logic to get your threshold values seems to be the 144th value of Turbidity (regardless if the scope is day, month, year or all time). Is that your intended logic?"

Yes, this is my logic because I want to estimate the turbidity threshold for 1 day of shutdowns of our production plants over a given period of time. I will do the same work for 5, 10 and 15 days. (So 144*5, 144*10 and 144*15 because 144 minutes = 24 hours).

Thanks again Pat @mahoneypat !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your patience and sharing your knowledge @mahoneypat . This is the result I was hoping for! To try to better understand your measurement in order to progress, can you please explain to me the interest of using "VAR"? Concerning the value 81 you are right it was 80 the global value, I had simply used 81 as an example. Now that my problem is more understandable, do you think that I used the right method to calculate these downtimes or would you have done it differently? (Simple curiosity)

Thank you again for helping me, it was very generous of you!

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The use of variables is a good technique to learn, as it makes your code more readable but more importantly it prevents unnecessary recalculation of the same result many times.

DAX: Use variables to improve your formulas - Power BI | Microsoft Docs

The biggest suggestion I have for you is to change how you store the datetime values. Having high granularity columns (columns with many distinct values) quickly increases your file size. The dt column of your fact table has 2 million values and is the biggest contributor to filesize (id is big too). It is best practice to store dates and times in separate columns to reduce file size, and get rid of any columns you don't need. It would also be better to store your rounded time values (dt(10 min)) as times only (without dates), as you have the date already in another column.

FYI that you can use a tool like DAX studio to see what is driving for file size, as well as many other good features. Here is the view of the sizes for your columns.

You should also turn off Auto Date/Time in your model and use a separate Date table in your models.

Creating a simple date table in DAX - SQLBI

Here is a different way to find your 144th value. However, I would suggest you consider a different logic. You could get the last result at the end of the first day, average of last results for all days in scope, etc.

New 144 Value =

VAR Summary =

ADDCOLUMNS (

FILTER (

SUMMARIZE (

fact_points_mesures_faucon,

dim_mesure_faucon[groupement_mesure],

fact_points_mesures_faucon[Index]

),

dim_mesure_faucon[groupement_mesure] = "Turbidité"

),

"cTurbid",

CALCULATE (

AVERAGE ( fact_points_mesures_faucon[valeur] )

)

)

VAR Top144 =

TOPN (

144,

Summary,

fact_points_mesures_faucon[Index], ASC

)

VAR Top1 =

TOPN (

1,

Top144,

fact_points_mesures_faucon[Index], DESC

)

VAR vResult =

MAXX (

top1,

[cTurbid]

)

RETURN

vResult

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's very interesting what you advise me about date management. I'm considering doing it but I'm afraid it's unmanageable given the number of "ID_measures" I have in my document. I don't know how to do it. Is it long? Most of the examples I find on the internet are about product sales. Here, as you can see, my document records values from different sensors and I try to create KPIs with them. I can't find a similar example allowing me to create a date table for this application.

Thanks in advance,

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The use of a date table like in that link can be used in your model, but it is not critical on this one (but keep these points in mind for future model). For the ID column, since you have a distinct value on each row, you can just use Countrows instead in many cases. If you switched to an approach to take the last value of the day, you wouldn't need the index column. Not critical, but you could significantly decrease the size of your model.

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand. Thank you for these tips again!

I look forward to hearing from you and thank you again for your help!

See you soon!

Joël

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your FILTER, you are using ALL on the whole fact table which is removing filters from your date table too. Try just putting the columns you are filtering on in the ALL instead ( maybe use ALLSELECTED too).

FILTER (

ALL ( fact_points_measures_faucon[id_measure_faucon], fact_points_measures_faucon[dt (1j)], fact_points_measure_faucon[dt (1d))] ),

fact_points_measures_faucon [id_measure_faucon] = 370

&& fact_points_measures_faucon [dt (1j)] >= min_

&& fact_points_measurements_faucon [dt (1d)] <= max_

),

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mahoneypat

Thanks for your help but it doesn't work either, my visual loads without finding any result...

Another idea can be ? I shared my pbix to make it easier to understand.

Thanks in advance,

Joël

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 43 | |

| 23 | |

| 21 | |

| 15 | |

| 15 |

| User | Count |

|---|---|

| 45 | |

| 31 | |

| 30 | |

| 18 | |

| 17 |